Time series data forecasting is of particular interest to users in many industries who want more insight from their existing data. And with the AI era fast approaching, new technologies such as machine learning and deep learning algorithms are becoming essential elements of modern data analytics. As one of the major use cases of a time series database is predicting trends, this article discusses how to use machine learning and deep learning algorithms to forecast the future with the data stored in a TDengine cluster.

In this article, an example is given of time series data forecasting based on existing data stored in TDengine. We will generate sample data for an electricity consumption scenario and then demonstrate how to use TDengine and a few Python libraries to forecast how the data will change over the next year.

Introduction

In this example, the user is an electricity provider that collects electricity consumption data from smart meters each day and stores it in a TDengine cluster. The goal is to predict how consumption will grow over the next year so that appropriate actions, such as the deployment of additional equipment, can be taken in advance to support this growth.

The sample data assumes that electricity consumption increases by a certain percentage each year as the economy grows, and reflects a city in the northern hemisphere, where more electricity is generally used in the summer months.

To view the source code for this example, see the GitHub repository.

Procedure

This procedure describes how to run the sample code:

- Deploy TDengine on your local machine and ensure that the TDengine server is running.

Refer to the documentation for detailed instructions. - Clone the source code for this demo to your local machine.

git clone https://github.com/sangshuduo/td-forecasting - Install the required Python packages. Note that Python 3.6 or later is required.

python3 -m pip install -r requirements.txt - Run the

mockdata.pyfile to generate sample data.python3 mockdata.py - Run the

forecast.pyfile to forecast next year’s data.python3 forecast.py

Note: If an error occurs during image generation, install the libxcb-xinerama0 package.

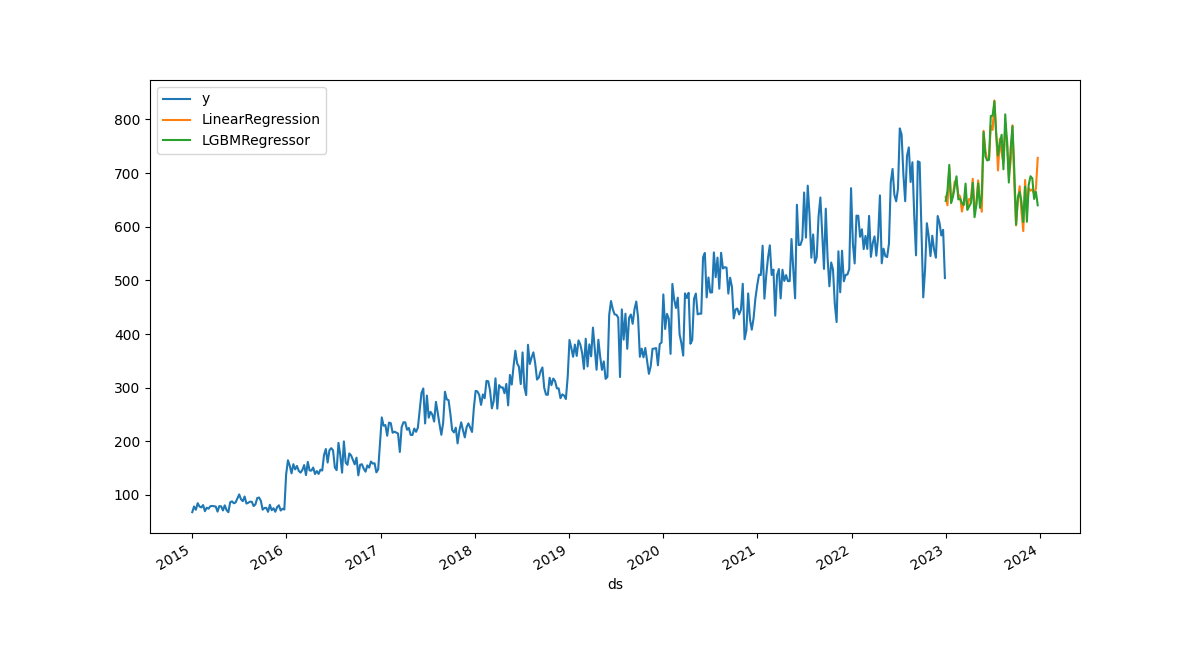

The following figure is displayed.

In the figure, the blue line indicates the sample data for years 2015 to 2023. The orange line is a prediction of the 2023-2024 data based on a linear regression model. The green line is a prediction of the same data based on a gradient boosting model.

How it works

Sample data generation

The mockdata.py file generates sample data for an electricity consumption scenario. In this scenario, it is assumed that consumption grows each year and is higher in the summer.

...

def insert_rec_per_month(conn, db_name, table_name, year, month):

increment = (year - 2014) * 1.1

base = int(10 * increment)

if month < 10 and month > 5:

factor = 10

else:

factor = 8

for day in range(1, monthrange(year, month)[1] + 1):

num = base * randint(5, factor) + randint(0, factor)

sql = f"INSERT INTO {db_name}.{table_name} VALUES ('{year}-{month}-{day} 00:00:00.000', {num})"

try:

conn.execute(sql)

except Exception as e:

print(f"command: {sql}")

print(e)

...Time series data forecasting

The forecast.py file forecasts next year’s data based on the sample data generated. First, the necessary modules are imported:

import argparse

import lightgbm as lgb

import matplotlib.pyplot as plt

import mlforecast

import pandas as pd

from mlforecast.target_transforms import Differences

from sklearn.linear_model import LinearRegression

from sqlalchemy import create_engine, text

...These modules are described as follows:

- lightgbm is a Python module that supports the LightGBM algorithm, a gradient-boosting framework that uses tree-based learning algorithms.

- Matplotlib is one of the most popular Python modules for visualization.

- mlforecast is a framework to perform time series forecasting using machine learning models.

- pandas is the most popular module to support data manipulation.

- scikit-learn (sklearn) is a module that supports popular data science/machine learning algorithms.

- SQLAlchemy is a Python SQL toolkit and object relational mapper that gives application developers the full power and flexibility of SQL.

Next, a connection to TDengine is established:

...

engine = create_engine("taos://root:taosdata@localhost:6030/power")

conn = engine.connect()

print("Connected to the TDengine ...")

df = pd.read_sql(

text("select _wstart as ds, avg(num) as y from power.meters interval(1w)"), conn

)

conn.close()

...This connection makes use of the TDengine Python client library together with SQLAlchemy to establish the connection and pandas to query data frames. We use the AVG() function and INTERVAL(1w) clause to query weekly averages from the TDengine cluster.

Now that we have generated our sample data and connected to TDengine, we can forecast future data:

...

df.insert(0, column="unique_id", value="unique_id")

print("Forecasting ...")

forecast = mlforecast.MLForecast(

models=[LinearRegression(), lgb.LGBMRegressor()],

freq="W",

lags=[52],

target_transforms=[Differences([52])],

)

forecast.fit(df)

predicts = forecast.predict(52)

pd.concat([df, predicts]).set_index("ds").plot(figsize=(12, 8))

...This uses the mlforecast module to implement forecasting through linear regression and through gradient boosting (LightGBM), and then combines the results into a single graph for comparison.

Finally, we display the results on screen or as an image file:

...

if args.dump:

plt.savefig(args.dump)

else:

plt.show()You can run forecast.py with the --dump <<em>filename</em>> parameter to save your results to disk.

Conclusion

This article demonstrates a basic example of time series data forecasting in TDengine. With a short Python script, you can easily and quickly predict electricity consumption from historical data stored in TDengine.