Before encountering industrial internet in 2018, I had no knowledge of time-series databases (TSDB). Due to my involvement in standards, I started to interact with TSDB manufacturers, ranging from energetic startups to experienced traditional IT companies. Suddenly, various TSDB products emerged like mushrooms after the rain.

The Rise and Evolution of Time-Series Databases

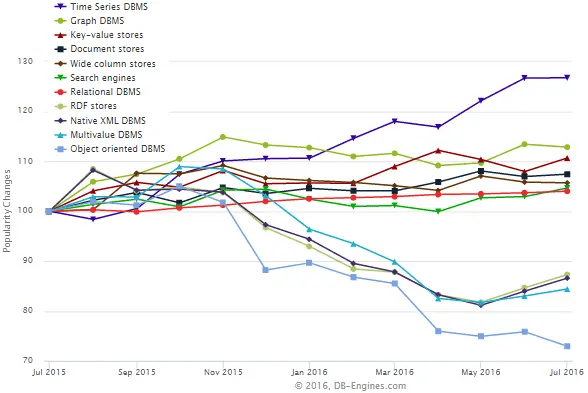

In fact, the trend began around 2016. Referring to a chart from DB-Engines, the popularity of TSDB increased by 26% within 12 months in 2016, more than double the growth rate of graph databases, which ranked second.

So, we accelerated our learning pace, hoping to quickly establish standards to provide some references for enterprises in their technology selection.

This database has been around for more than a decade, but we called it by another name—data historian.

Many colleagues who started in industrial IT mentioned the concept of “data historians” and claimed, “Our functionality is essentially the same.” This confused me, and made me want to understand the relationship between these two types of databases. Could they be classified as the same category? At that time, there wasn’t much information available online comparing these two types of databases.

Therefore, this article is a collection of my learning experiences, aiming to cover the origins, specific differences, and some emerging trends of these two databases.

Let’s start with some concepts for context.

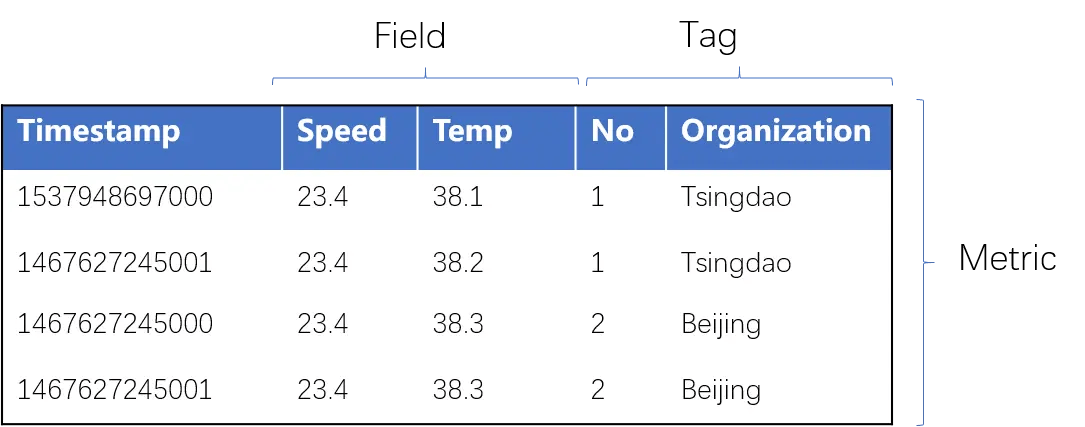

- Time-Series Data: A series of data points indexed in time order, generated at stable or variable frequencies, consisting of three key elements: timestamp, tags, and metrics.

- Time-Series Database: A database designed to store vast amounts of time-series data.

Are We Long-Lost Siblings?

Data historians originated from traditional industries, developed decades ago with mature technology, mainly supporting the rapid writing, storage, and querying of large amounts of measurement data in industrial scenarios, sometimes involving real-time feedback control.

Time-series databases, on the other hand, originated from the internet and rose with the advent of IoT. They primarily support the rapid writing and analysis of vast amounts of network monitoring and sensor data.

Let’s examine why industrial scenarios specifically designed data historians. Over 80% of data in industrial scenarios share characteristics such as timestamps, sequential generation, and large volumes of structured data collected at high frequencies. For instance, a medium-sized industrial enterprise might have 50,000 to 100,000 sensor points generating hundreds of gigabytes of data daily. Typically, these enterprises require data to be stored for long periods to query historical trends anytime. This basic requirement highlights some capabilities of traditional data historians, summarized as follows:

- High-Speed Ingestion: Industrial data historians often demand high write speeds. In process industry scenarios, sensors are set at every step, each with a high collection frequency, leading to extremely high write concurrency, sometimes reaching millions of data points per second. This requires both software efficiency and high-performance servers.

- Fast Query Response: There are two types of query demands: responding to real-time requests to reflect system status promptly and quickly querying vast historical data, even for a whole year, to aggregate data for specific periods.

- Strong Data Compression: Monitoring data needs long-term storage, sometimes for 5 to 10 years, necessitating effective data compression, either lossless or lossy. Lossy compression can achieve higher ratios, sometimes 1:30-40, requiring well-designed algorithms to retain essential data features upon restoration.

- Rich Toolsets: Traditional data historian solutions typically include comprehensive toolkits accumulated over years, such as numerous protocols and data models for various scenarios, constituting significant industrial software competitiveness.

- Pursuit of Extreme Stability: Industrial applications demand high software stability, with redundancy for high availability and exceptional software quality to ensure continuous operation for years without errors.

Now, let’s look at the environment in which time-series databases emerged. During the rapid development of the internet, with advancements in communication technology and reduced data transmission costs, the era of IoT saw a surge. Data collection extended beyond internet monitoring to devices like smartphones, fitness trackers, shared bicycles, and cars, continuously generating data. These data are collected and sent to the cloud for big data analysis, driving businesses to enhance efficiency and service quality.

Observing the data characteristics in internet scenarios reveals similarities to industrial real-time data:

- Short Individual Data Length, Large Volume: Data are short but voluminous.

- Timestamped and Sequential: Data are timestamped and generated sequentially.

- Structured Data: Data are mostly structured, describing specific parameters at specific times.

- Higher Write Frequency: Data are written more frequently than queried.

- Rare Updates: Stored data rarely require updates.

- Time-Range Analysis: Users are more interested in data over time periods rather than specific points.

- Time or Range-Based Queries: Data queries and analyses are mostly based on a specific time period or numerical range.

- Statistical and Visualization Needs: Data are often queried for statistical analysis and visualization.

These characteristics highlight that despite their different origins, both types of databases face similar challenges and meet similar needs, resulting in overlapping functionalities. It’s like siblings recognizing each other at first sight.

Do You Want to Replace Me? It’s Not That Easy

With IoT and industrial internet driving new production methods, organizational approaches, and business models, the traditional technical architecture of data historians faces challenges due to increasing sensors, data volumes, and higher big data analysis demands. Some issues need to be addressed:

- Scalability Bottlenecks: Traditional architectures ensure high single-machine performance and linear scaling by adding machines, but lack the dynamic scalability of distributed systems, making expansion difficult without pre-planning.

- Incompatibility with Big Data Ecosystems: Data needs to be understood and used, and big data industries have mature solutions for massive data storage and analysis, like Hadoop and Spark ecosystems. Industrial enterprises often upgrade or replace existing systems to adopt new big data technologies.

- High Costs: Traditional industrial data historian solutions are costly, often affordable only for large enterprises. With the spread of new technologies, even small and medium enterprises recognize the importance of data but prefer more affordable solutions.

Here, internet-based time-series databases exhibit inherent advantages:

- Distributed Architecture: Traditional data historians usually deploy a master-backup architecture with high-performance machines, relying on high-quality code for stability and high data compression ratios due to limited storage. In contrast, time-series databases’ distributed architecture allows easy horizontal scaling, reducing reliance on expensive hardware, and achieving high availability with cluster advantages, significantly lowering costs.

- More Flexible Data Models: Due to the specificity of industrial scenarios, traditional data historians often use a single-value model. Each monitored parameter is considered a data point, with a model built for each data point during writing. For example, a temperature indicator for a fan is a data point. Ten indicators for ten fans make 100 data points, each with descriptive information (name, accuracy, data type, digital/analog, etc.). Queries target each data point for values, making the single-value model very efficient for writing.

On the other hand, time-series databases have started adopting multi-value models, similar to object-oriented handling. For example, a wind turbine can be a data model including multiple measurement dimensions like temperature and pressure, along with tag information such as coordinates and identifiers. This approach better suits analytical scenarios when providing external services. Of course, single-value and multi-value models can be converted. Many databases offer multi-value models for external services but use single-value models for underlying storage.

Currently, most time-series databases choose NoSQL databases with better scalability as their underlying storage, offering flexible data models suitable for multi-value time-series data. They are easily scalable, allowing performance enhancement by adding machines, with high query efficiency, low cost due to open-source software, and seamless integration with big data ecosystems. Let’s see an example of TSDB using NoSQL databases as underlying storage:

| Time Series Database | Database Model |

|---|---|

| InfluxDB | Key-value |

| OpenTSDB | Wide column store |

| Graphite | Key-value/Wide column store |

| KairosDB | Wide column store |

| Prometheus | Key-value |

Open Source TSDB’s Underlying Storage Model

However, using NoSQL databases also means losing some features, such as transaction support. Ensuring data consistency requires other means, and SQL support is lacking. SQL, a standard query language, is well-known and easy to learn, so time-series database vendors are attempting to integrate SQL engines to lower the usage threshold.

Is Time-Series Database the Future?

Replacing traditional data historians isn’t easy. Data historians have been honed over years to offer top-notch performance, even enabling feedback control, with comprehensive toolsets. In contrast, time-series databases lack this depth of knowledge in these fields, primarily serving monitoring and analysis. Their reliance on multiple deployments and incomplete toolsets are issues, along with performance and reliability gaps for real-time feedback control.

Moreover, data historian vendors are actively enhancing their products, launching distributed versions and cloud services, establishing data management and analysis ecosystems, competing well against internet players.

The Race is On

No one is backing down in this competition. Both types of databases continue to evolve, driven by changing business needs. By leveraging their strengths and making necessary compromises, they maintain long-term vitality — stagnation causes anxiety, while change brings new life.

Let’s Progress Together

Regardless of technological changes, the ultimate goal is to solve user needs. Design driven by user demands will never go out of style. Let’s look at some new demands:

- Increasing Query Requirements: In the internet era, query demands extend beyond basic conditions or interpolation queries to include spatial dimensions, richer visualizations, and comprehensive information control.

- Shifting to Cloud Services: Traditional industrial real-time data processing often involves private deployments due to security and performance reasons, incurring high costs for machines, software, and services, requiring professional maintenance. Cloud services eliminate machine costs, require only business maintenance skills, and offer scalable, pay-as-you-go services, reducing costs. As network and cloud computing technologies mature, cloud services approach private deployment performance and security, becoming an unstoppable trend.

- Edge Computing: Industrial fields serve as significant IoT testing grounds, with increasing sensors and data collection challenging centralized processing for real-time analysis. Edge computing addresses this by handling immediate monitoring through edge devices, storing large-scale data for analysis, enhancing real-time data value and reducing storage burdens. Time-series databases are developing edge computing versions, incorporating stream processing capabilities for richer functionalities, suiting industrial IoT scenarios.

In Summary

In the course of technological development, both types of databases —— data historian and time-series —— have been continuously refining their functionalities to meet evolving business needs. Each leverages its strengths, complements the other, and makes necessary compromises. This process of adaptation and improvement is essential for maintaining long-term vitality —— stagnation causes anxiety, while change brings new life.