We designed an application with the following general workflow. The application is used to enter the IoT device number in advance and then the device is affixed to the animal. The device collects data 20 times per minute, and the collected data types include temperature, humidity, latitude and longitude, movement, pulse etc. The collected data is analyzed at the Edge and the summary results are sent to the cloud server through the 4G network. In the cloud, the corresponding variables, such as weather based on longitude and latitude are obtained and combined with the device data. AI is then used to comprehensively evaluate current health conditions in livestock, such as loss of appetite, fever trends, etc.

Architecture and Implementation

Like traditional IoT projects, this project had high performance requirements for data writing as well as certain aggregate queries. Specifically there are significantly more writes and fewer reads, which is a typical high-concurrency writing scenario. MongoDB was used before but aggregate queries were very inefficient and it was generally inconvenient. Cassandra was given a try but it did not meet the requirements. By chance, we learned about time series databases (TSDB) such as InfluxDB and TDengine. After building the test environment, we tested the two respectively, and finally settled on TDengine. In addition to the clear performance gap between TDengine and InfluxDB, the table data TTL mechanism, data compression, stream computing and other functions provided by TDengine made it a clear winner for our stringent high-performance requirements.

Based on the design principle of the supertable, we use the business related information of livestock as tags, which is also convenient to associate with data in MySQL. Each device exists as a subtable, and the device’s measurements are used as columns in the subtable.

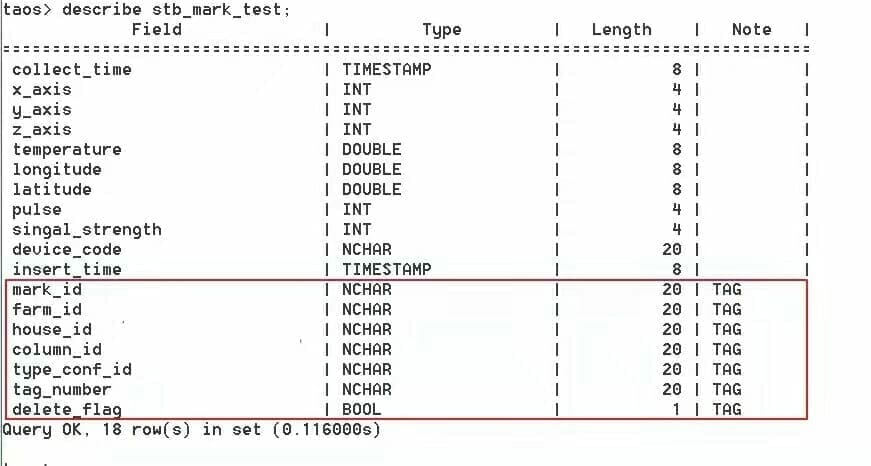

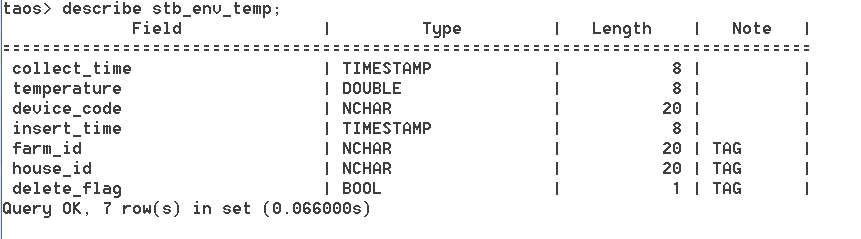

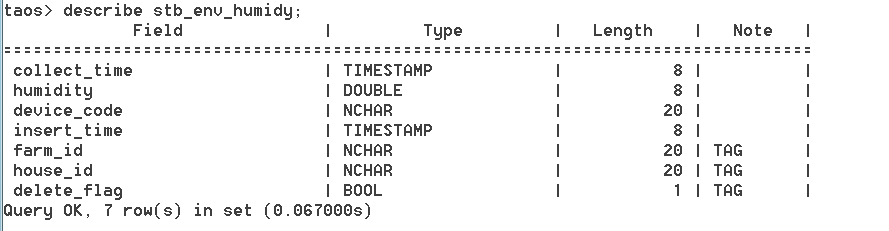

The supertable schema is shown in Figure 1 below. Figures 2 and 3 show the supertable schema for environmental sensors installed in fixed positions on the farm. The temperature and humidity table, in addition to the table that stores the original message data, are not listed here.

At present, all the IoT data tables of our company are designed based on the TDengine supertable. The core livestock supertable, has more tags than other tables. It should be noted that, in order to ensure that the tags in TDengine are consistent with MySQL, whenever the basic attributes of livestock are modified for business reasons, tags need to be modified synchronously in TDengine. The supertable design also allows us to trace possible processing delays and other problems.

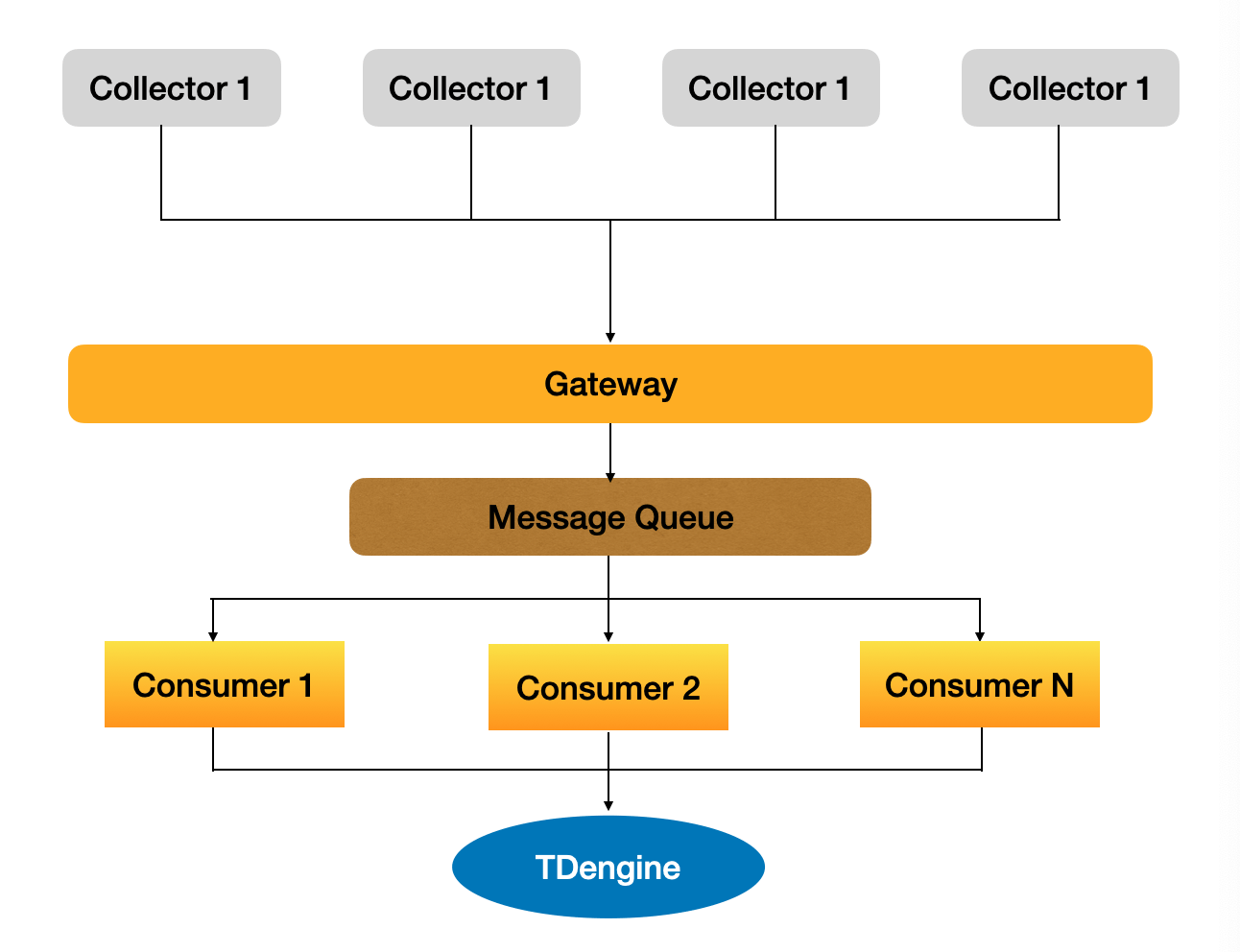

The collect_time in the table is the collection time, and the insert_time is the time when data is inserted into TDengine. If the time difference between the two is large, there may be network problems, collection device failures or insufficient server throughput etc. Having this data, allows us to investigate. The whole process of livestock data collection to data placement is shown in the figure below. The data collected by the collection device is transmitted through the 4G network. The device gateway performs preliminary processing on the data and then pushes it to MQ (Message Queue). The data is then transmitted to subscribers/consumers on MQ and finally to TDengine.

Data Migration and Ramifications

Since we were replacing MongoDB, we had to perform a data migration as follows:

- Newly generated collector data was written into MongoDB as well as TDengine.

- Historical data was gradually migrated into TDengine.

- The data source for AI analysis was changed from MySQL data warehouse, to TDengine.

During the migration process, we also encountered two small issues:

- Since the MySQL+MongoDB solution was used before, all MongoDB statements had to be rewritten as TDengine SQL. Although TDengine supports standard SQL, there are minor differences in details which have to be accounted for.

- Since we provide many services, I initially thought about creating a middleware layer to provide data query to other services in the system. However, since TDengine is a relatively new open source project I decided to let each service connect to TDengine by themselves. All the services can be integrated after the migration and routine operations are stable.

The ramifications after the migration are very clear. When we used MongoDB, the self-built cluster used six, 4-core, 32G servers. After migrating to TDengine, the self-built cluster only used only two, 8-core, 32G machines. This had led to a significant operational cost decline. In terms of specific performance, I simulated the data of more than 6,000 collectors, and the table data totaled about 300 million records. Most of our queries are based on subtables, and only some businesses need to view aggregated operational data. When querying group by+last_row(*) on the supertable, the data can be returned within 1.5s, and querying on the subtable in about 0.1 seconds (select * from son_table limit 10). This meets our business requirements.

Closing Thoughts

With the development of emerging technologies such as the Internet of Things and artificial intelligence, AIoT has become a major trend that cannot be ignored. To store the massive amounts of data, requires both warehouses and transactional databases. However, with a purpose-built product like TDengine, designed with IoT, time-series data in mind, this complexity can be avoided. In a sense, TDengine with it’s stream computing and high-performance query engine, helped save a lot of intermediate steps and resulted in significant cost reduction and increased efficiency. So far, TDengine has been outstanding in cost reduction and performance improvement. We are looking forward to the day when it can support calls to AI models and output the results directly. That will be perfect!