From manufacturing and energy to connected cars and infrastructure, the amount of data being generated in all industries is increasing faster than ever before. Data at this scale can offer astounding new insights into business processes, but requires a high performance industrial data platform that can handle it.

Industry leaders have realized that the key to high performance in industrial data processing is the deployment of a time-series database (TSDB). While some attempt to run their time-series data workflows on general-purpose databases such as MySQL or MongoDB, these enterprises find that as their business grows, the scale of their data increases exponentially, and their data infrastructure quickly becomes overwhelmed. Their costs skyrocket and their performance suffers as they are forced constantly to upgrade the hardware of their general-purpose systems just to keep up with the data that they generate.

TDengine is a high performance, scalable time series database that enables efficient ingestion, processing, and monitoring of petabytes of data per day, generated by billions of sensors and data collectors. Thanks to its data model that takes full advantage of the characteristics of time-series data, TDengine delivers more than ten times the performance of general-purpose platforms while requiring only one-fifth the storage space. By migrating data workflows to TDengine, enterprises not only enjoy faster data ingestion and query response times — they can also reduce the TCO of their industrial data operations by 50% or more.

Proven High Performance in Ingestion and Querying

In addition, TSBS benchmark results show that TDengine has far superior performance than other time-series database products in both ingesting and querying big data — while using far fewer CPU and storage resources.

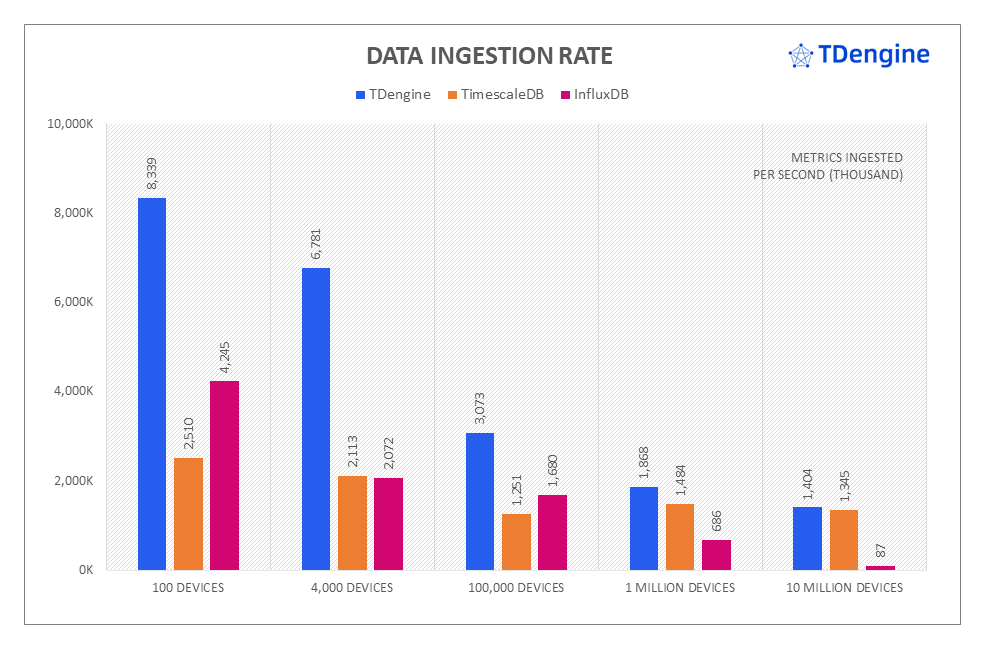

According to a publicly available TSBS evaluation, the ingestion performance of TDengine exceeded that of TimescaleDB and InfluxDB in all five scenarios of the IoT use case. At the same time, in the largest TSBS scenario of 10 million devices, TDengine required only one-third the disk space of InfluxDB and one-twelfth the disk space of TimescaleDB to store the data.

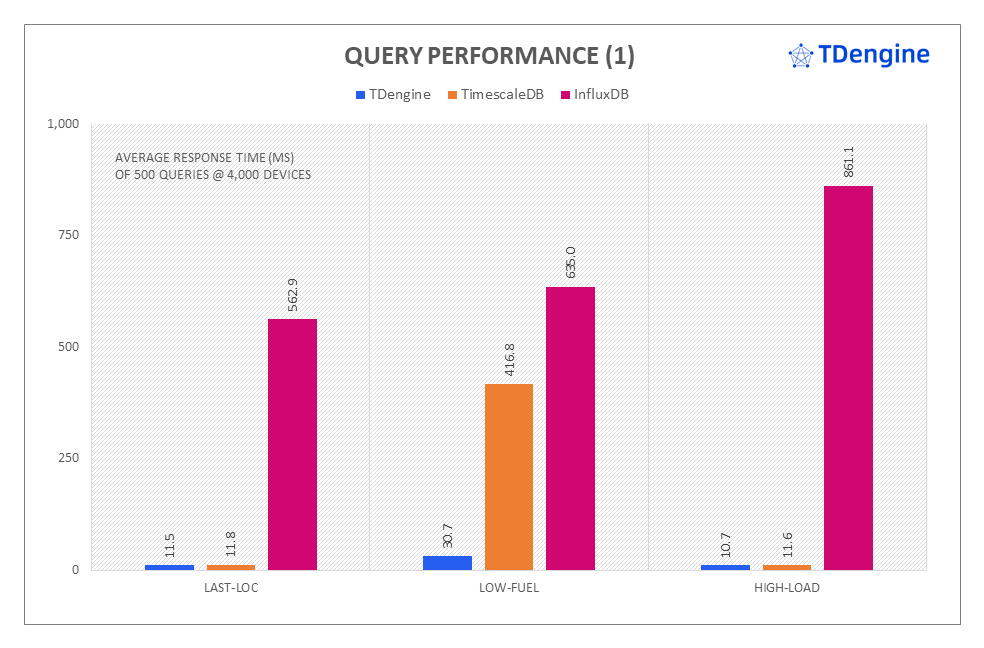

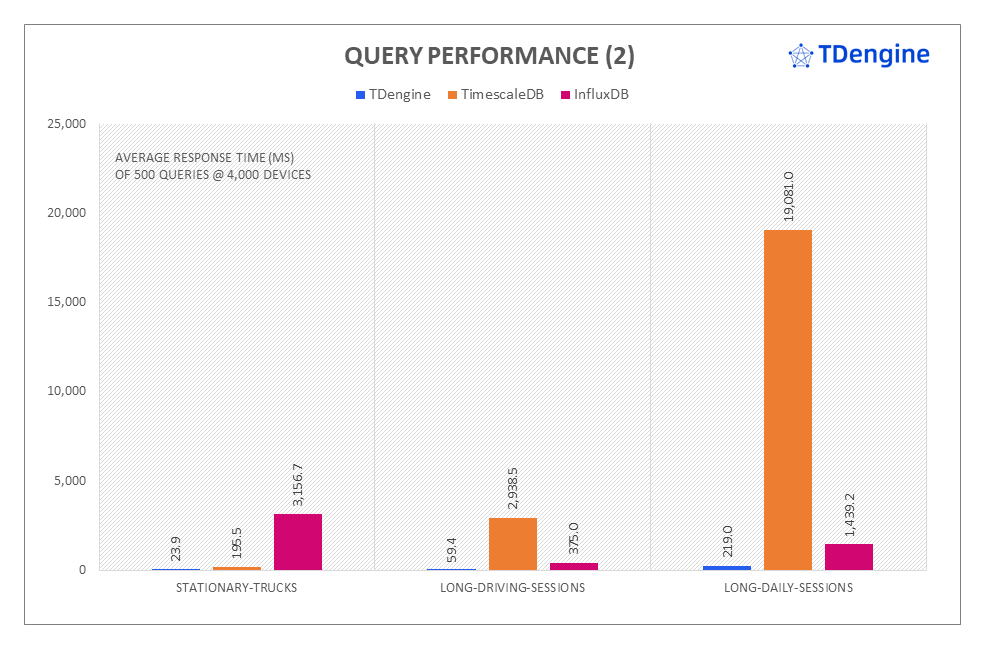

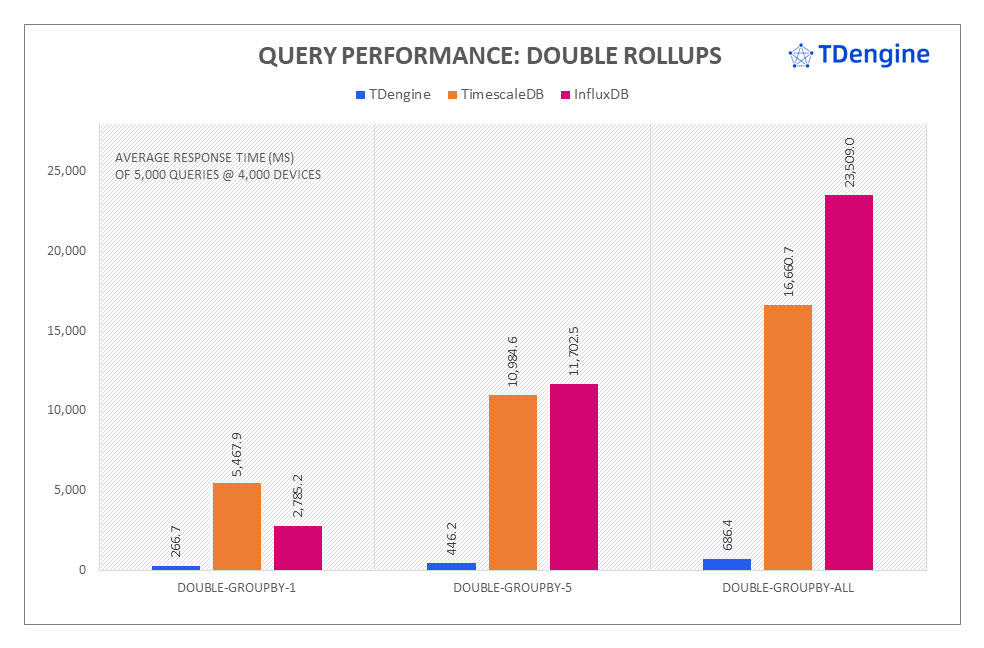

TDengine outperformed InfluxDB and TimescaleDB in query response time, especially in more complex scenarios. In extended testing on the TSBS scenario of 4,000 devices, TDengine displayed 87.1 times the performance of TimescaleDB in the long-daily-sessions scenario and 132 times the performance of InfluxDB in the stationary-trucks scenario.

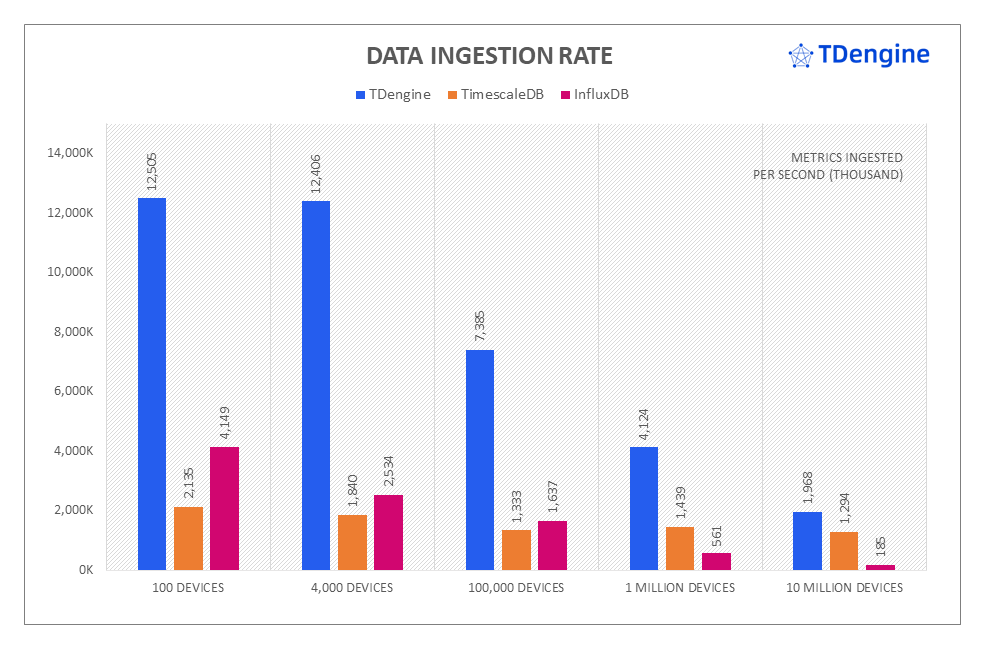

In addition to the IoT use case, TDengine also delivered superior performance in the TSBS DevOps CPU-only use case.

In this use case, TDengine ingested the test data between 1.5 to 6.7 times faster than TimescaleDB, and 3.0 to 10.6 times faster than InfluxDB, with significantly lower CPU overhead. For double rollups, TDengine responded up to 26x faster than InfluxDB and up to 24x faster than TimescaleDB.

How It Works

TDengine is able to deliver this high performance due to its storage architecture and distributed design, including the concept of creating one table per device, and by introducing the supertable to enable aggregation operations across tables. These elements ensure that TDengine provides optimal performance even with high-cardinality data.

One Table per Device

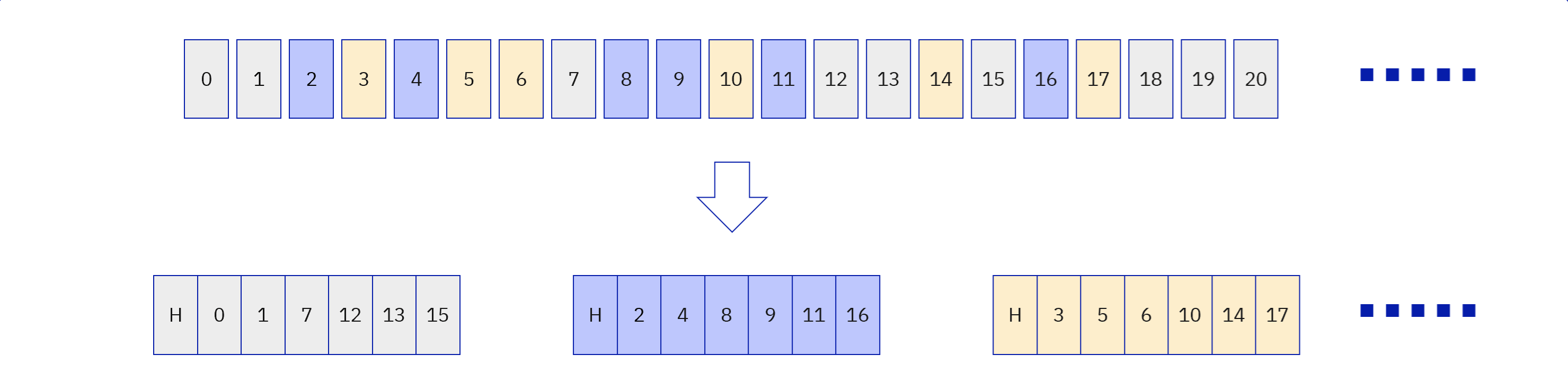

TDengine uses a unique data model in which one table is created for each device. The data within each table is stored in blocks, each of which contains a contiguous time range of data stored in column-oriented fashion. This improves performance in several ways:

- Locks are not encountered because each table is written to by only one device.

- Writing new data can be implemented as an append operation because each device typically writes data in the order that the data is generated.

- Querying data over a specific time period does not involve random read operations because the data in each block is stored contiguously in chronological order.

- Basic statistical information for the data records within a block is precomputed and stored in the block, so that raw data is not scanned when performing simple queries.

- High compression rates can be achieved because the metrics for consecutive timestamps for a single device are typically similar.

For example, consider a scenario with three devices writing data into TDengine, represented in the figure below by gray, blue, and orange. A table is created for each device, and the records in each table are stored in blocks. Each block contains the records of only one table stored continuously in order of timestamp. In addition, each block contains the maximum, minimum, sum, and count of the records within the block as precomputed values (shown in the figure below as H).

Supertables

To manage the large number of tables created in this model, TDengine uses the supertable: a template for a type of device that defines a shared schema of metrics and tags for all devices of that type. Each device within a supertable has one tag record, and the tag records for each device are stored together and indexed. Tags are stored separately from time-series as key-value pairs. In this way, you can easily and efficiently query the aggregated data of multiple devices by querying their supertable.

- In multi-dimensional analysis, tag filtering is performed before aggregation, greatly reducing the aggregated dataset.

- Compared with NoSQL databases, fewer resources are used because tags are not repeatedly stored.

- Although the number of tags is equal to the number of devices, it is small enough to fit in memory for improved performance.

Metadata Decentralization

Metadata storage is distributed among nodes in a TDengine cluster instead of being centralized on a single node. When an application wants to aggregate the data from multiple tables, TDengine sends the filtering conditions to all nodes simultaneously. Each node then works in parallel to find the requested tables, aggregate the data, and finally send the results back to the query node or driver where the merge operation is performed.

The distributed design of TDengine now guarantees latency for tag filtering operations provided that system resources are sufficient, and metadata is no longer a bottleneck. As the number of tables in a deployment increases, TDengine can simply allocate more resources and create more nodes to ensure the scalability of the system.