High cardinality has long been a major challenge for time-series databases (TSDB). Many databases perform well under low-cardinality conditions but slow down significantly as cardinality rises, forcing administrators to implement complex strategies to manually reduce cardinality or scale out their systems unnecessarily. This issue is particularly relevant in fields like IoT and Industrial IoT, where large volumes of unique time-series data points must be efficiently handled. In this article, we’ll explore how two leading time-series databases, TDengine and InfluxDB, address the challenges of high cardinality, and why TDengine stands out as the first TSDB to fully resolve this issue.

What Is High Cardinality?

To begin, cardinality can be defined as the number of unique values in a data set. The cardinality of a data set can be low or high. For example, Boolean data can only be true or false, and therefore has a very low cardinality of 2. On the other hand, a data point like a unique device ID could have extremely high cardinality: in a deployment with 100 million smart devices, the cardinality of the unique device ID would be hundreds of millions. This uniqueness allows for detailed and specific analyses, enabling organizations to uncover patterns and anomalies that may not be apparent in lower cardinality datasets.

For time-series data, things become more complicated. Time-series data is always associated with metadata — a number of tags or labels. The cardinality of a system is therefore the cross-product of the cardinality for each tag. For example, imagine a smart meter that is associated with device ID, city ID, vendor ID, and model ID. With millions of devices across hundreds of cities created by dozens of vendors each having several models, it’s easy to see how the cardinality of the data set can exceed 100 billion.

As the number of unique values increases, database performance can degrade, leading to slower query times and increased complexity in data management. For instance, when dealing with metrics that have numerous tags or labels—such as those found in IoT applications—the cardinality can skyrocket, complicating data retrieval and analysis. Consequently, while high cardinality data is invaluable for observability and troubleshooting, it requires robust database solutions capable of efficiently handling the associated complexities and performance demands.

TDengine’s Approach to High Cardinality

TDengine is purpose-built to handle time-series data in high-cardinality scenarios, particularly for IoT and Industrial IoT use cases. Here are some key features that make it effective for high-cardinality data:

Data Model: One Table per Data Collection Point

TDengine has a very specific data model in which a separate table is created for each data collection point (DCP). In the TDengine model, a DCP refers to a piece of hardware or software that collects one or more metrics based on preset time periods or triggered by events. In some cases, an entire device may be a single DCP, but more complex devices may include multiple DCPs that each collects data independently with different sampling rate.

TDengine then uses a consistent hashing method to determine which virtual node (vnode) is responsible for storing the data for a specific table. This approach renders the number of time series irrelevant, as consistent hashing efficiently distributes the load. Within each vnode, an index is constructed to enable quick access to the corresponding table. As additional Data Collection Points (DCPs) are added to the system, TDengine dynamically creates more vnodes, minimizing latency for table retrieval and supporting scalability.

This design guarantees the latency to insert data points into or query data from any single table even as the number of tables increases exponentially, from one million to 100 million. Thus latency is not affected by high cardinality in TDengine.

Separating Metadata from Time Series Data

By using the one table per data collection point design, TDengine can guarantee the latency for one single table. But real-world analytics use cases require the aggregation of data from multiple tables or devices. This is a major challenge for the TDengine design.

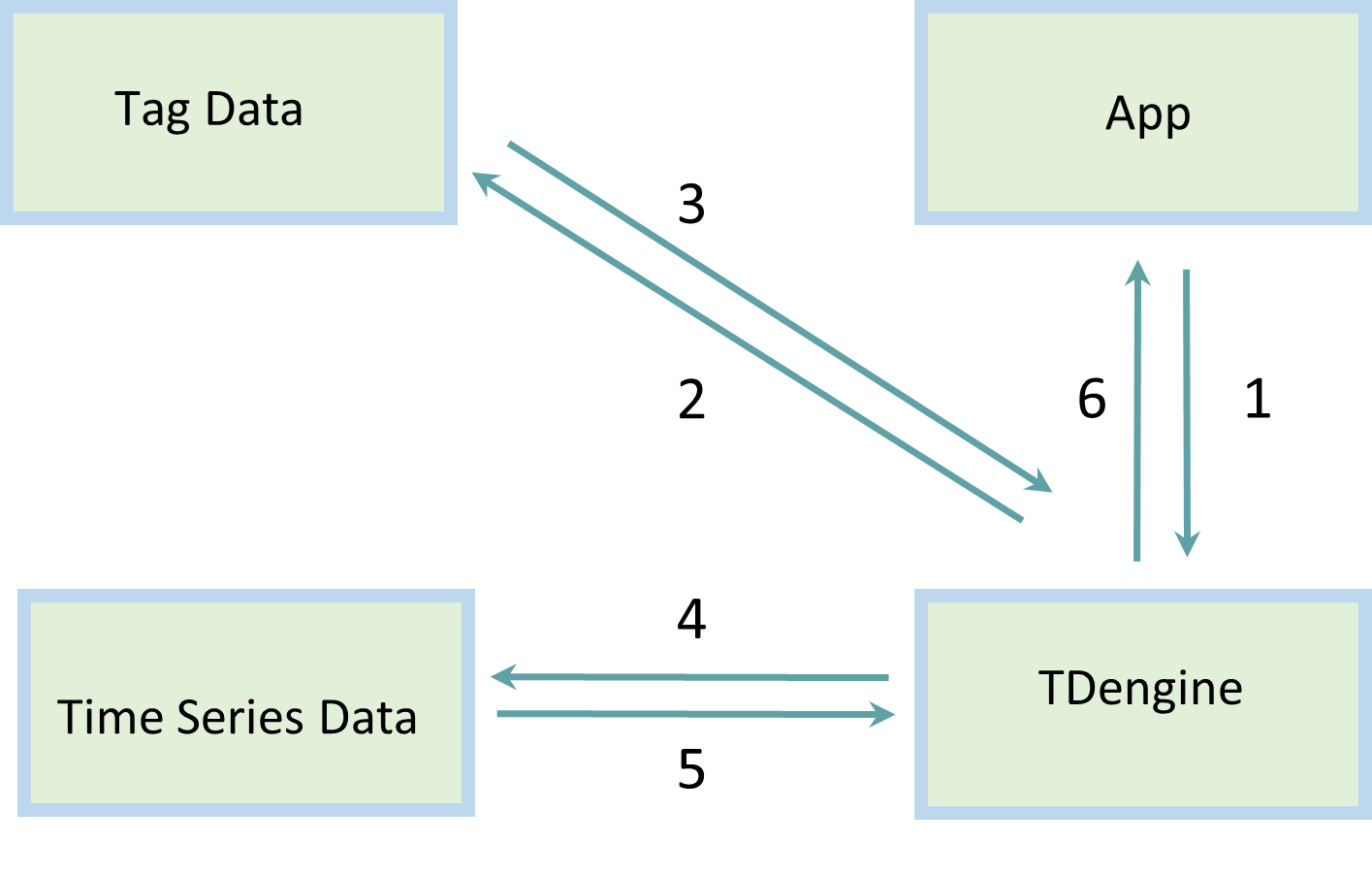

To solve this issue, TDengine introduces the supertable. Unlike a standard database, supertables allow applications to associate a set of labels to each table. TDengine stores those labels independently from the collected time-series data: a B-tree is used to index the labels and store them in the metadata store, while time-series data is stored in a unique time-series data store. Each table in the metadata store has only one row of data, and this row can be updated as needed. In the time-series data store, however, each table has many rows of data, and the data set grows with time until its lifetime has elapsed.

To aggregate data from multiple tables, the application specifies a filter for the labels, for example, calculate the power consumption for “user type” = “single family homes”. TDengine first searches the metadata store and obtains a list of tables that satisfy the filtering conditions, and then it fetches the data blocks stored in the time-series data store and finishes the aggregation process.

By first scanning the metadata store, whose data set is much smaller than the time-series data store, TDengine can provide very efficient aggregation. The process is shown in the following figure.

Distributed Design for Metadata Store

In TDengine 3.0, metadata storage is distributed among vnodes instead of being centralized on the mnode. When an application wants to aggregate the data from multiple tables, TDengine sends the filtering conditions to all vnodes simultaneously. Each vnode then works in parallel to find the requested tables, aggregate the data, and finally send the results back to the query node or driver where the merge operation is performed.

The distributed design of TDengine 3.0 now guarantees latency for label filtering operations provided that system resources are sufficient, and the mnode is no longer a bottleneck. As the number of tables in a deployment increases, TDengine can simply allocate more resources and create more vnodes to ensure the scalability of the system.

InfluxDB and High Cardinality

InfluxDB, one of the more widely adopted time-series databases, also handles high-cardinality workloads but faces some challenges compared to TDengine.

InfluxDB stores data points by series, which is the combination of measurement, tags, and fields. Each unique combination creates a new series, and high cardinality often leads to a massive increase in the number of series, which can strain InfluxDB’s indexing engine. InfluxDB’s time-series index (TSI) is designed to efficiently manage high cardinality by utilizing a log-structured merge tree system. This allows InfluxDB to handle large numbers of series keys while maintaining reasonable performance levels. The TSI includes an in-memory log that periodically flushes to disk, helping to manage memory usage effectively. But as cardinality increases, query performance can still degrade.

While the database is excellent for smaller workloads, it requires careful management, often necessitating more sophisticated cluster architectures to maintain high performance as cardinality grows. High cardinality increases the number of metadata entries that must be managed, leading to longer query times as the system grows.

Although some issues related to high cardinality may have been resolved in the latest version of InfluxDB, extensive and independent testing has not yet been done to confirm this. Furthermore, because InfluxDB has not released an open-source version of InfluxDB 3.0, any improvements are available only to enterprise customers and not to the greater community.

Performance Comparison: TDengine vs. InfluxDB

When considering performance in high-cardinality environments, TDengine generally outperforms InfluxDB due to its optimized architecture for handling large numbers of unique time-series. TDengine’s supertable structure, efficient metadata handling, and superior compression techniques allow it to scale more smoothly under high-cardinality loads without suffering from significant performance degradation.

By contrast, InfluxDB may struggle as cardinality increases, especially when dealing with high-frequency data from a large number of unique sources. While InfluxDB has improved its performance with innovations like the TSI, it can still encounter bottlenecks, particularly in large-scale deployments with millions of unique time-series identifiers.

Use Case Suitability

- TDengine is well-suited for environments with very high cardinality, such as IoT and Industrial IoT where thousands or millions of unique devices or sensors report data. Its ability to scale efficiently while maintaining performance makes it a strong choice for large, distributed applications.

- InfluxDB works well for applications with moderate cardinality, and its ease of use and extensive ecosystem make it a popular choice for monitoring systems, DevOps metrics, and applications with smaller datasets. However, for applications with rapidly increasing cardinality, InfluxDB may require additional tuning and architecture adjustments to maintain performance.

Conclusion

In summary, TDengine offers a more robust and efficient solution for handling high-cardinality time-series data, particularly in large-scale IoT and Industrial IoT deployments. Its Supertable architecture, compression techniques, and parallel processing capabilities ensure it can scale efficiently without compromising on performance.

While InfluxDB remains a strong choice for smaller to moderate workloads, it may struggle in extremely high-cardinality environments, requiring more complex configurations to maintain performance.

Choosing between the two depends on the specific requirements of your use case, but for large-scale, high-cardinality applications, TDengine has a clear advantage.