For logistics, real-time vehicle tracking data processing is crucial for overall system efficiency. In typical design, Redis is used for real time data processing, and HBase is used for historical data analysis. In this case, Kuayue-Express Group migrated from HBase + Redis to TDengine. After migration, the number of servers is cut from 21 to 3, daily storage is cut from 352G to 4G, and the query latency is significantly reduced.

Project Background

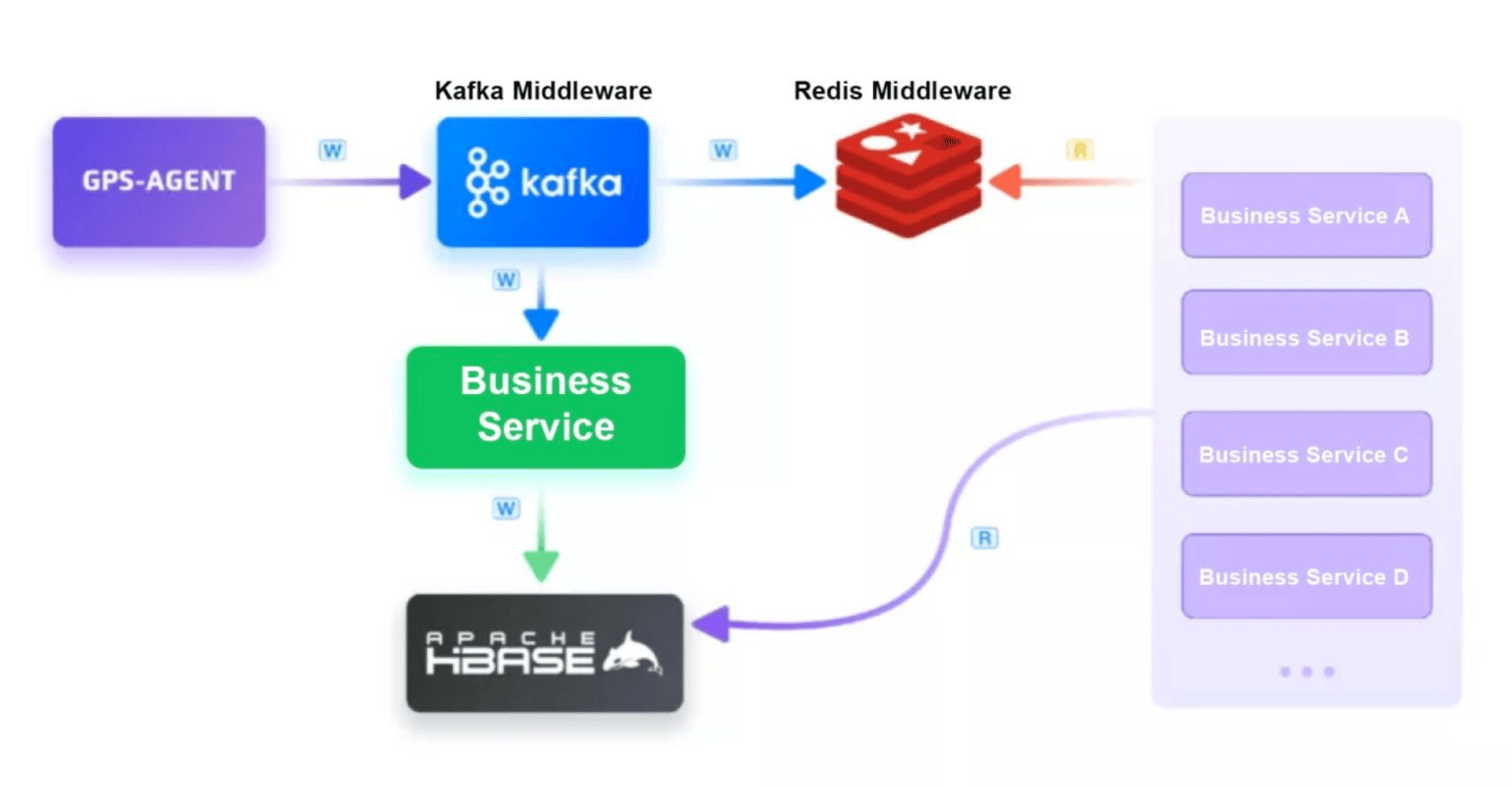

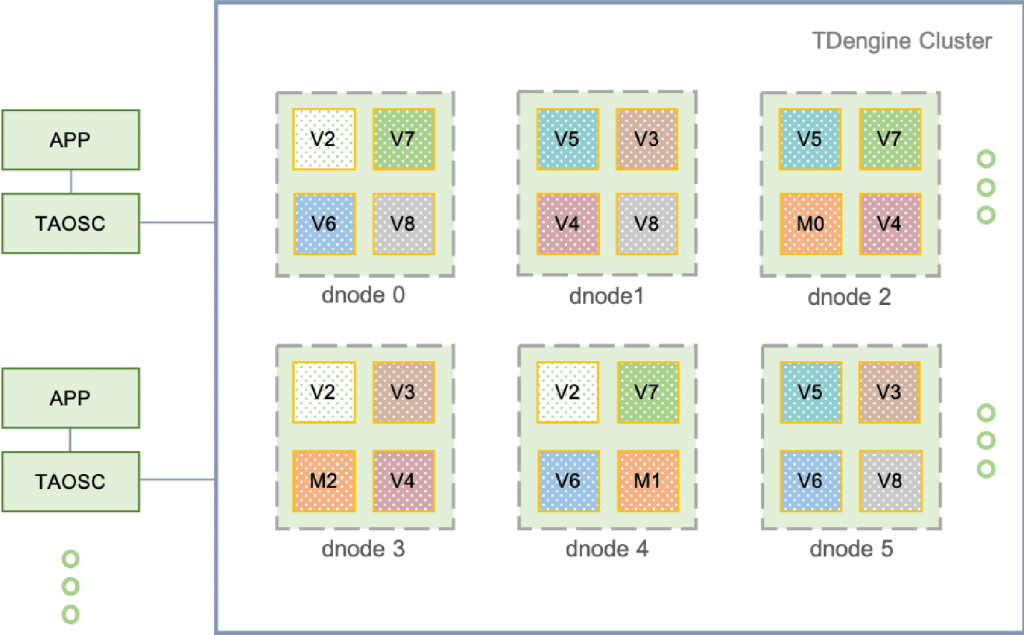

A few years ago, the vehicle trajectory positioning storage project was established. Tens of thousands of vehicles purchased by the Express Group reported information to the GPS-AGENT gateway through an on-board positioning device. The parsed service message was sent to the Apache Kafka message middleware, which then sent the message to the application. Historical location positioning information was written into an Apache HBase instance, and the latest vehicle location information was written into Redis. These databases were exposed to business services for real-time monitoring and analysis of vehicles. The original business structure is shown in the following figure:

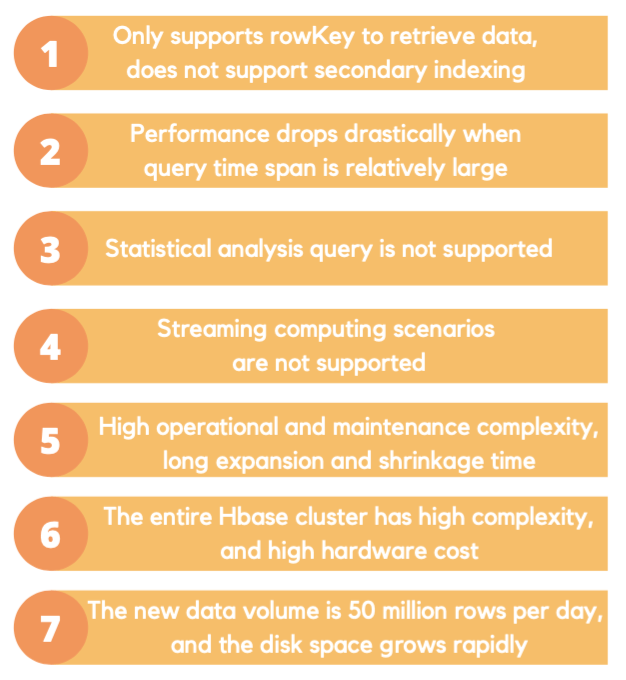

The original system had several operational pain points. For example, because the data is stored in HBase, the performance of the system drops significantly when data is queried over a large time-span. The pain points can be summarized as follows:

We started thinking about a way to resolve these issues.

Project Evolution

Prior to starting selection of new technology, we organized our business requirements as follows.

Let’s take a look at each one of these:

- Data is not updated or deleted frequently: Vehicle tracking information is simply reported at some frequency and is not updated or deleted. The information only needs to be saved for a certain amount of time.

- No transaction processing like traditional databases: Since the data does not need to be updated, there is no need to use transactions to ensure consistency like traditional databases. Eventual consistency is adequate.

- The traffic is generally stable, and the number of vehicles and the frequency of reporting within a period of time can be determined.

- Query analysis of data is based on time period and geospatial area determined by business needs.

- In addition to storage and query operations, various statistics and real-time calculations need to be performed based on the reporting needs of the business.

- The amount of data is huge with more than 50 million records collected every day and this will continue to grow as the business grows. So the solution has to be highly scalable.

Technical Selection

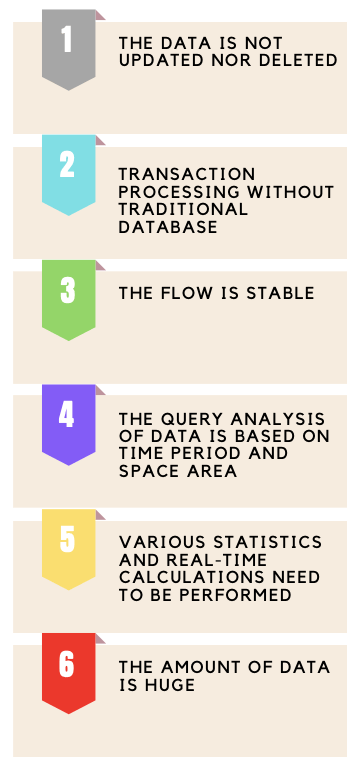

It can be seen from the above analysis that the vehicle trajectory is typical time series data, so it is more efficient to process it with a special time series database (TSDB). In the selection process, we compared several representative time series database products.

The comprehensive comparison results are as follows:

- The InfluxDB cluster version is not open source and incurs a cost. The hardware cost is also relatively high.

- CTSDB Tencent Cloud time series database has high memory usage and relatively high cost.

- The underlying base of OpenTSDB is still HBase, and still leads to a complex architecture.

- The TDengine cluster function is open source, with typical distributed database characteristics, and the compression ratio is also very high.

We concluded that several features of TDengine were optimal for our business requirements.

We conducted additional research on TDengine to summarize the following aspects.

We performed tests to ensure that TDengine could resolve the issues we experienced in our previous architecture. We were pleasantly surprised, when in addition to meeting our business needs, TDengine displayed very high performance and very high compression ratios! In addition to basic function, feature and performance tests, we conducted end-user testing in collaboration with our partners in the business. We explored the following:

- Can the cluster scale automatically as data is being written?

- Does the CACHELAST parameter work in practice and is the data valid?

- Analysis using TDengine’s statistical, aggregation and interpolation functions to meet business needs.

- Scenarios to override update parameters.

- Common query statements to meet business needs and data comparison to ensure that queries return valid data.

In-depth Understanding of TDengine

Prior to implementing and deploying TDengine, we studied the architecture, design and other aspects of the system. Below we summarize the core concepts of TDengine.

TDengine Architecture

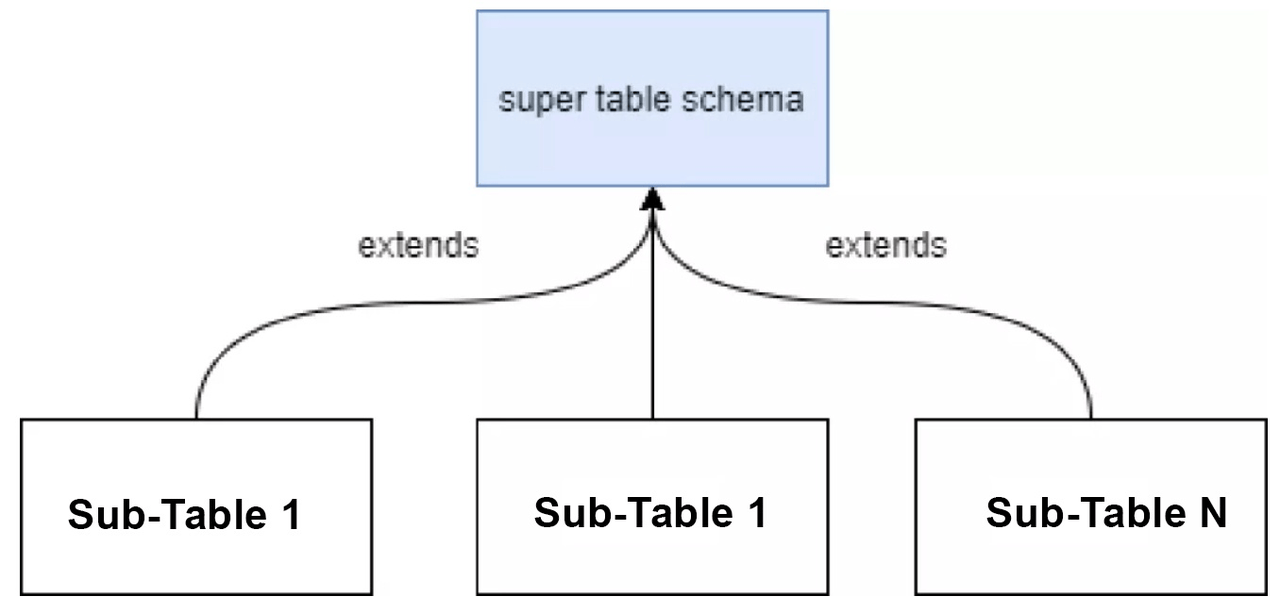

The figure below illustrates the native cluster design of TDengine. The dnode is the physical node that actually stores the data. The small boxes such as V2 and V7 in the dnode box are called vnodes, which are virtual nodes, and the M nodes, M0, M1 etc. are management nodes which monitors and maintains the running status of data nodes and also stores metadata about the tables and cluster. Those who are familiar with distributed middleware can intuitively tell that TDengine has a native distributed design.

Supertable

TDengine has the concept of a supertable. For example, in the Cross Express group case, all vehicles have their own subtable which inherit from a parent table called a supertable. The supertable defines the structure specification of the subtable but does not store the actual physical data. However we can perform statistical analysis and query data across subtables by querying the supertable, instead of needing to query each subtable.

High Compression Features

TDengine adopts a two-stage compression strategy. The first-stage compression uses delta-delta encoding, simple 8B method, zig-zag encoding, LZ4 and other algorithms, and the second-stage compression uses the LZ4 algorithm. The first-stage compression will perform specific algorithm compression for each data type, and the second-stage compression will be performed again for general purpose, provided that the parameter comp is set to 2 when building the library.

TDengine-based Architecture

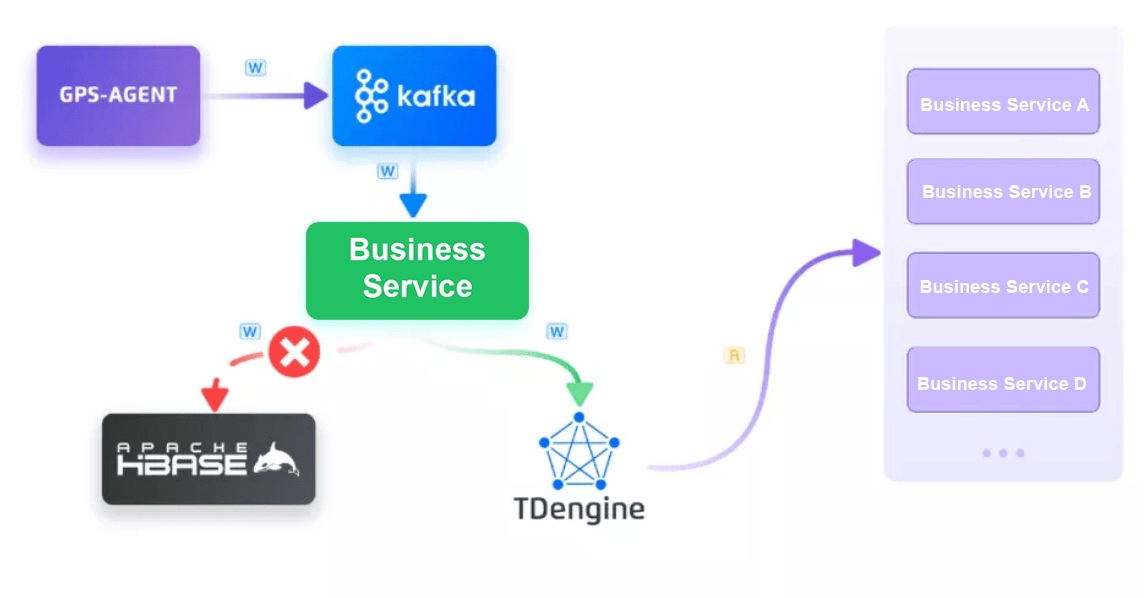

After thorough testing and verification, we introduced TDengine into our system. The new system architecture is shown in the following figure:

As can be seen from the architecture diagram, the in-vehicle data is still sent to Apache Kafka through the GPS-AGENT gateway for message parsing and then written to TDengine. The latest vehicle tracking information is no longer stored, or queried by the business services, in Redis, which simplifies the architecture. Querying and reporting is done through TDengine and HBase can be taken offline after a certain period of time.

Effects of Moving to TDengine

Since the migration to TDengine, various performance indicators have show significant improvement.

Compression Ratio

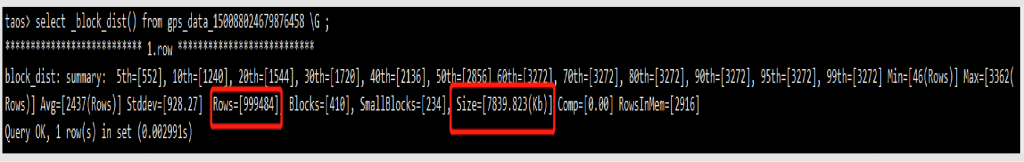

As shown in the figure, we see a table with 50,000 rows each of which is more than 600 bytes. The compressed size is 1665KB, and the compression ratio is as high as 1%. Next, let’s look at a subtable with millions of rows.

It actually occupies a disk size of 7839KB. Our compression ratio is much better than various official tests of TDengine. This is because of the repetitive nature of the data in our system and shows how TDengine uses the characteristics of time-series IoT data to optimize compression.

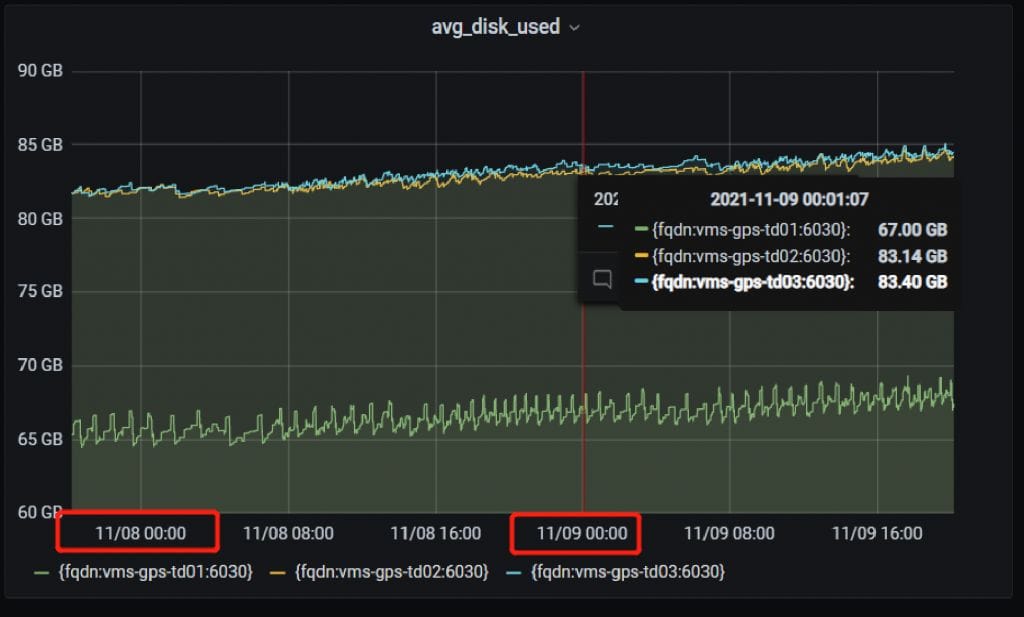

Daily Increment

Our current business daily write volume exceeds 50 million records. TDengine maintains the increasing disk size at about 1.4G per unit.

Overall Comparison of Various Performance Indicators

The figure below is a comparison of various indicators before and after implementation of TDengine.

The figure below is a comparison between HBase and TDengine. TDengine reduced the number of servers from 21 to 3 and the storage needs from 352G daily to 4G daily!

It can be seen from the comparison that TDengine has indeed greatly reduced our various costs.

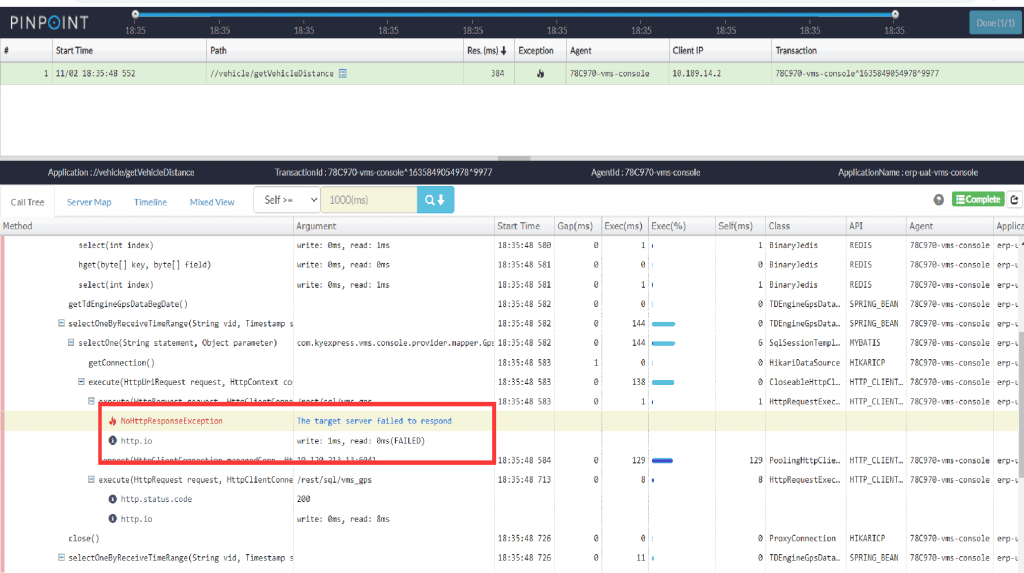

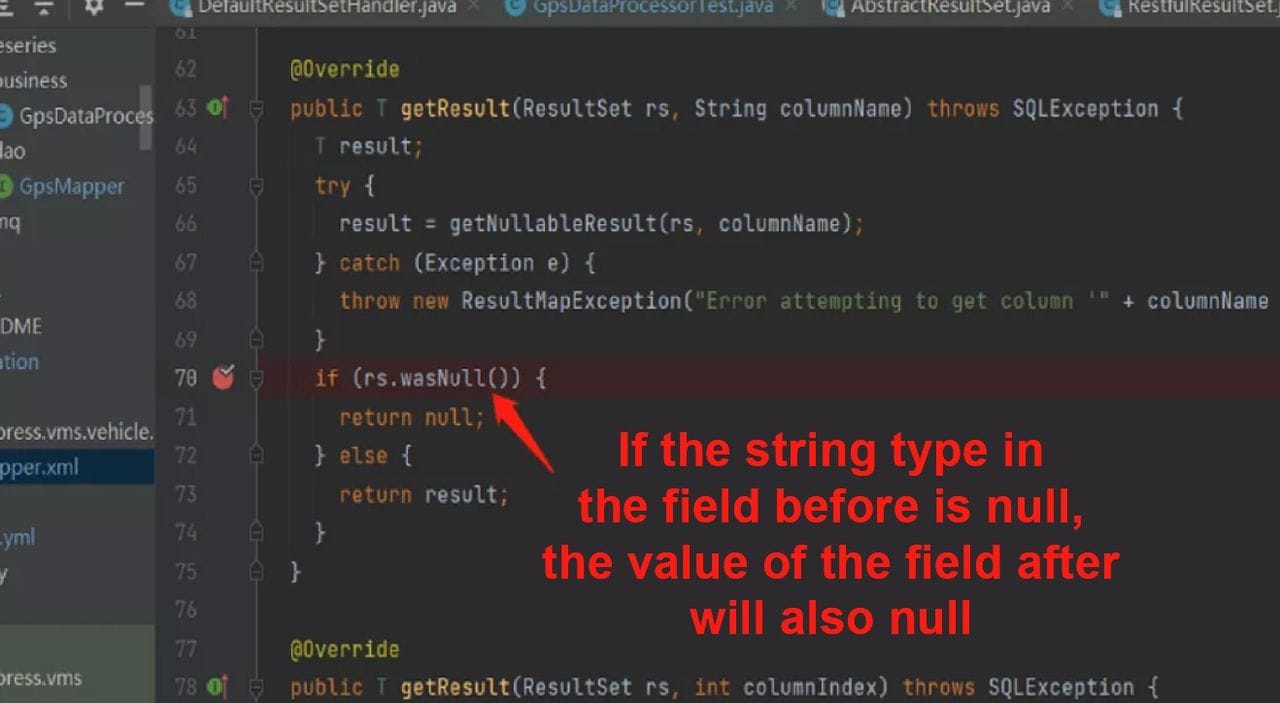

Issues and Suggestions

A relatively new system will inevitably encounter some issues and we have worked with the TDengine R&D team to resolve the issues. For example, we encountered the problem below during the process of using JDBC. Since TDengine is open source, we were able to raise a PR in Github to resolve the issue! This demonstrates not only the power of open source but a good reason to use TDengine.

There are two places we also hope that TDengine can be further optimized:

i. The monitoring function in version 2.3.0.x is relatively simple. We expect that later versions can provide more robust and more detailed monitoring. We noticed that the newly released version introduced a monitoring tool called TDinsight, and we will try it out soon.

ii. The current interval function does not support group by common column functionality, and we hope this will be supported in the future.

Finally, in the process of trial and deploying TDengine, we have received strong support from many developers at TDengine team, and we would like to express our gratitude to them.