Many friends and developers often ask me, why did you start developing a new time series database in 2017 when there were already so many time series databases on the market? And after five years with a team of more than 60 engineers, why are you still so obsessed with the development of TDengine? Last weekend I sat down to write this blog and share my thoughts with you all.

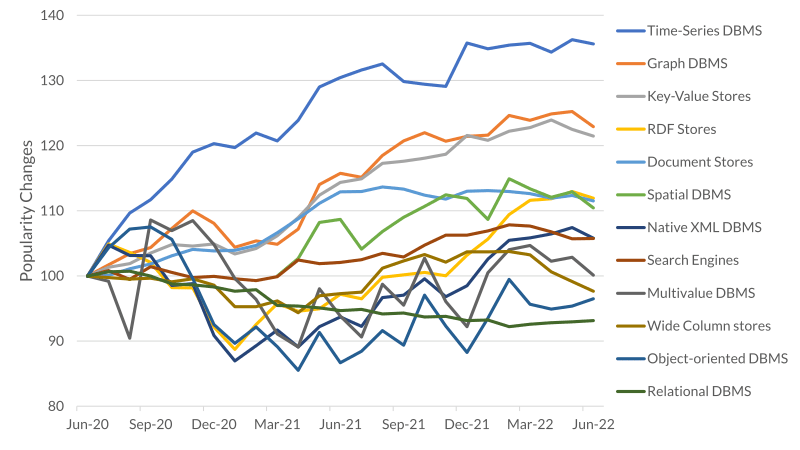

The idea of a purpose-built time series database (TSDB) is not new. Looking back at the history of the field, RRDtool, which came out in 1999, was probably the first time series database. However, as we can see from the database ranking site DB-Engines, it wasn’t until 2015 that time series databases started gaining in popularity – but over the past two years, time series databases have become the fastest trending of any database management system.

At the end of 2016, I saw that a new era in information technology was beginning. Everything was becoming connected: from home appliances to industrial equipment, everything was becoming “smart” – and generating massive amounts of data. And not just any data, but time series data. I quickly realized that the efficient processing of time series data coming from these smart sensors and devices would become an essential element of technological development going forward, so I started to develop TDengine, a new time series database system, in June 2017.

When I first started TDengine back in 2017, my main concern was whether the market still had room for another time series database. I constantly pondered whether existing databases were good enough to run time series applications, and whether they already met business needs. Even today, this is still something that I think about all the time, asking myself: is this time series database really worth my dedication and obsession?

Now let me share with you my conclusions based on a technical analysis. I’ll go over the key elements of time series data processing one by one:

Scalability

Due to the expansion of IT infrastructure and the advent of the Internet of Things (IoT), the scale of data is growing rapidly. A modern data center may need to collect up to 100 million metrics – everything from network equipment and servers to virtual machines, containers, and microservices is constantly sending out time-series data. As another example, every smart meter in a distributed power grid generates at least one data point every minute, and there are over 102.9 million smart meters in the United States. It is impossible for a single machine to handle this much data, so any system designed to process time-series data must be scalable.

However, many of the market-leading time-series databases do not provide a scalable solution. Prometheus, the de facto standard time-series database for Kubernetes environments, does not have a distributed design, and it has to rely on Cortex, Thanos, or other third-party tools for scalability. InfluxDB offers clustering only to enterprise customers, not as open-source software.

To get around this, many developers build their own scalable solutions by deploying a proxy server between their application and their TSDB servers (like InfluxDB or Prometheus). The collected time-series data is then divided among multiple TSDB servers based on the hash of the time-series ID.

For data ingestion purposes, this does solve the issue of scalability. But at the same time, the proxy server has to merge the query results from each underlying node, posing a major technical challenge. For some queries, like standard deviation, you can’t just merge the results – you have to retrieve the raw data from each node. This means that you need to rewrite the entire query engine, which makes for a huge amount of development work.

A closer inspection of the design of InfluxDB and TimescaleDB shows that their scalability is actually quite limited. They store metadata in a central location, and each time series is always associated with a set of tags or labels. That means if you have one billion time series, the system needs to store one billion sets of tags.

You can probably see what the issue is here: when you aggregate multiple time series, the system needs to determine which time series meet the tag filtering conditions first, and in a large dataset, this causes significant latency. This is known as the high-cardinality problem of time-series databases. And how can we solve this problem? The answer is a distributed design for metadata processing. Metadata cannot be stored in a central location, or it will quickly become a bottleneck. One simple solution is to use a distributed relational database for metadata, but this makes the system complicated, harder to maintain, and more expensive.

TDengine 1.x was designed to have all metadata stored on the management node, so it suffers from high cardinality, too. We made some improvements in the time series database architecture of TDengine 2.x, storing tag values on each virtual node instead of the central management node, but the creation of new time series and time taken to restart the system were still major bottlenecks. With the newly released TDengine 3.0, we were finally able to solve high cardinality completely.

Complexity

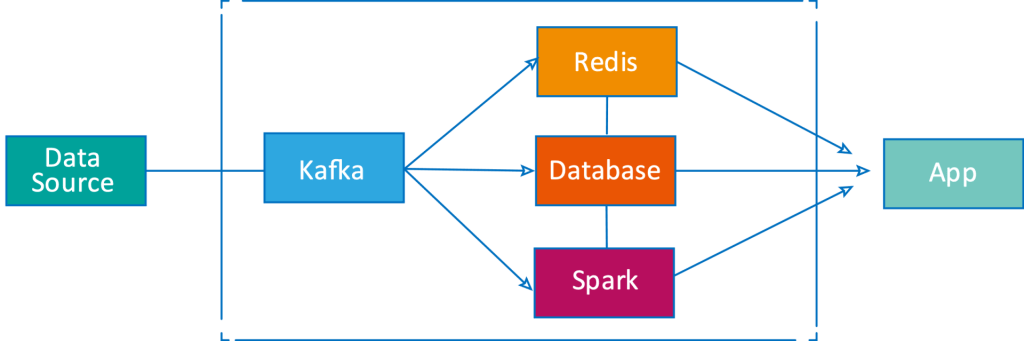

A database is a tool to store and analyze data. But time-series data processing requires more than just storage and analytics. In a typical time-series data processing platform, the TSDB is always integrated with stream processing, caching, data subscription, and other tools.

Stream Processing

Time-series data is a stream. To gain insight into operations faster or detect errors in less time, data points must be analyzed as soon as they arrive at the system. Thus stream processing is a natural fit for time-series data. Stream processing can be time-driven, producing new results at set intervals (known as continuous query), or data-driven, producing new results whenever a new data point arrives.

InfluxDB, Prometheus, TimescaleDB, and TDengine all support continuous query. This is very useful for monitoring dashboards, as all of the charts and diagrams can be updated periodically. But not all data processing requirements – ETL, for example – can be met by continuous query alone, and time-series databases need to support event-driven stream processing.

Before TDengine 3.0, no time-series database on the market had out-of-the-box support for event-driven stream processing. Instead, time-series data platforms are integrated with Spark, Flink, or other stream processing tools that are not designed for time-series data. These tools have difficulty processing the millions or even billions of streams in time-series datasets, and even if they are up to the task, it comes at the price of a huge amount of computing resources.

Caching

For many time-series data applications, like application performance monitoring, the values of data at specific times are not important. These applications are focused only on trends. However, IoT scenarios are a notable and important exception. For example, a fleet management system always wants to know the current position of each truck. For a smart factory, the system always needs to know the current state of every valve and the current reading of every meter.

Most time-series databases, including InfluxDB, TimescaleDB, and Prometheus, cannot guarantee on their own that the latest data point of a time series can be returned with minimum latency. To enable the current value of each time-series to be returned without high latency, these data platforms are integrated with Redis. When new data points arrive at the system, they have to be written into Redis as well as the database. While this solution does work, it increases the complexity of the system and the cost of operations.

TDengine, on the other hand, has supported caching from its first release. In many use cases, Redis can be completely removed from the system, making the overall data platform much simpler and less expensive to run.

Data Subscription

The message queue plays an important role in many system architectures. Incoming data points are first written into a message queue and then consumed by other components in the system, including databases. The data in the message queue is usually kept for a specified period of time (seven days in Kafka, for example). This is the same as the retention policy in a time-series database.

Most time-series databases ingest data very efficiently, up to millions of data points a second. This means that if time-series databases can provide data subscription functionality, they can replace message queues entirely, again simplifying system design and reducing costs.

In a time-series database, incoming data points are stored in the write-ahead log (WAL) in append-only mode. This WAL file is normally removed once the data in memory is persisted to the database, and used only to recover data if the system crashes. However, if we don’t remove the WAL file automatically but keep it for a specified period, the WAL file can become a persistent message queue and be consumed by other applications.

Providing data subscription via the WAL file has another big benefit: it enables filtering on data subscription. The system can save resources by passing only the data points that meet the filtering conditions to applications.

Of all the time-series databases available today, TDengine is the only one with data subscription. With TDengine 3.0, we are now able to provide comparable performance and the same API set as Kafka.

Summary

While time-series databases still work without event-driven stream processing, caching, and data subscription, developers are forced to integrate their TSDBs with other tools to achieve the required functionality. This makes the system design overly complicated, requires more resources, and is harder to maintain. With these features built inside a time-series database, the overall system architecture is simplified and the cost of operation is reduced significantly.

Cloud Native

The most beautiful thing about cloud computing is its elasticity – storage and compute resources are essentially infinite, and you only pay for what you need. This is one of the main reasons that all applications, including time-series databases, are moving to the cloud.

Unfortunately, most databases are just “cloud-ready”, not cloud-native. When you buy the cloud service provided by some database vendors, such as TimescaleDB, you need to tell the system how many virtual servers (including the CPU and memory configuration) and how many gigabytes of storage you want. Even if you don’t run any queries, you’re still forced to pay for the computing resources, and if your data grows in scale, you need to decide whether to buy more resources. By offering this kind of cloud solution, database service providers are really just reselling cloud platforms.

To fully utilize the benefits provided by the cloud platform, time-series databases must be cloud-native. To achieve this, they need to be redesigned with the following three points in mind:

- Separation of compute and storage: In containerized environments, specific containers may be up or down at any time, but stored data is persistent. A traditional time series database architecture is unable to cope with this because it stores data locally. Furthermore, to run a complicated query or do batch processing, more compute nodes need to be added dynamically to speed up the process.

- Elasticity: The system must be able to adjust storage and compute resources based on workload and latency requirements. For compute resources, this is not a hard decision for the system to make. But storage resources are another story. To scale a distributed database up or down, its shards have to be merged or split while live data is coming or queries are running. Designing a system that can accomplish this is no easy task.

- Observability: The status of a time-series database must be monitored together with the other components of the system, so a good database needs to provide full observability to make operations and management simpler.

At this point, any newly developed time series database design must be cloud native. While TDengine was designed from day one with a highly scalable distributed architecture, as of version 3.0 we now fully support the separation of compute and storage.

Ease of Use

While ease of use is a subjective term, we can attempt to list here a few criteria for a more user-friendly time-series database:

- Query language: As SQL is still the most popular database query language, SQL support essentially eliminates the learning curve for developers. Proprietary query languages, on the other hand, require developers to use their valuable time to learn them and also increase migration costs to or from other databases. In TDengine, you can reuse existing SQL queries with little to no change, and some queries can even be simplified thanks to enhancements in TDengine.

- Interactive console: An interactive console is the most convenient way for developers to manage running databases or run ad hoc queries. This remains true even with time-series databases deployed in the cloud.

- Sample code: Developers do not have time to read entire documentation sets just to learn how to use a certain feature or API. New database systems must provide sample code for major programming languages that developers can simply copy and paste into their applications.

- Data migration tools: A database management system needs to provide convenient and efficient tools to get data in and out of the database. The source and destination may be a file, another database, or a replica in a remote data center, and the transferred data may be a whole database, a set of tables, or data points in a time range.

Time for a New Time Series Database

Considering the above four major factors, I started to develop a new time series database from scratch in 2017. After many iterations, the TDengine Team and I are proud to have now released TDengine 3.0 on August 23, 2022.

TDengine 3.0 is a comprehensive, purpose-built platform designed from day one to meet the needs of next-generation time series applications. It features a true cloud-native time series database design in which both compute and storage resources are separated and can be changed dynamically based on the workload. It can be deployed on public, private or hybrid clouds. Its scalability is unprecedented, and it can support one billion time series while still outperforming other time series databases in terms of data ingestion rate and query latency.

Additionally, TDengine 3.0 simplifies system architecture and reduces operating costs with its built-in caching, stream processing (event- and time-driven), and data subscription features. It is no longer necessary to integrate a multitude of third-party components just to have the functionality you need for time-series data processing.

Most importantly, TDengine 3.0 helps you gain insight into your time-series data with analytical capabilities comparable to relational databases and support for standard SQL syntax with time-series-specific extensions.

Since we launched TDengine back in 2017, from 1.0 to 2.0 to 3.0, it has been highly recognized by a large number of enterprise customers and community users. As of the writing of this article, it has gathered over 19,000 stars and 4,400 forks on GitHub since going open source in July 2019 and has quickly risen in the time series database ranking on DB-Engines. Over 140,000 instances of TDengine are running in 50 countries, and more than 1,000 developers clone our source code every day.

TDengine 3.0 isn’t the end of the story for time series databases, but we believe that it solves all the major issues in the field today. If you’re interested in trying it yourself for free, you can download the installation package or check out the source code on our GitHub Repository. As a developer, I would appreciate any comments, feedback, or even contributions that you may have to improve the product further.