Yunda Express’s MySQL database could not keep up with a growing volume of hundreds of millions of records of order data. In order to meet business needs and optimize time-series records processing and ensure future scalability, Yunda added TDengine to their architecture.

As one of the biggest logistics companies in the world, Yunda’s daily order scanning volume can reach hundreds of millions. To ensure fast and efficient business operations, Yunda order processing system needs to aggregate and count all scanned order data from a nationwide network, and feed it back to users in real-time. All of this data is necessary for outlet staff as well as distribution center staff to forecast site scan volumes as well as individual workloads in addition to other relevant statistics.

Our current MySQL system could not handle our growing volume of scanned order data as Yunda’s business grew.

To ensure that we would have a fast responsive system, that would meet the needs of our fast-expanding business we started researching various databases. We realized that the data uploaded in real-time, by equipment is very important; and of course, this is time-series data. This was the data that needed to be optimized, and MySQL could not handle it properly. We came across TDengine and realized that its features, data model, and query performance on billions of records, were great and could meet our business requirements. We were satisfied with the results of our comprehensive testing and use of TDengine.

TDengine In Production

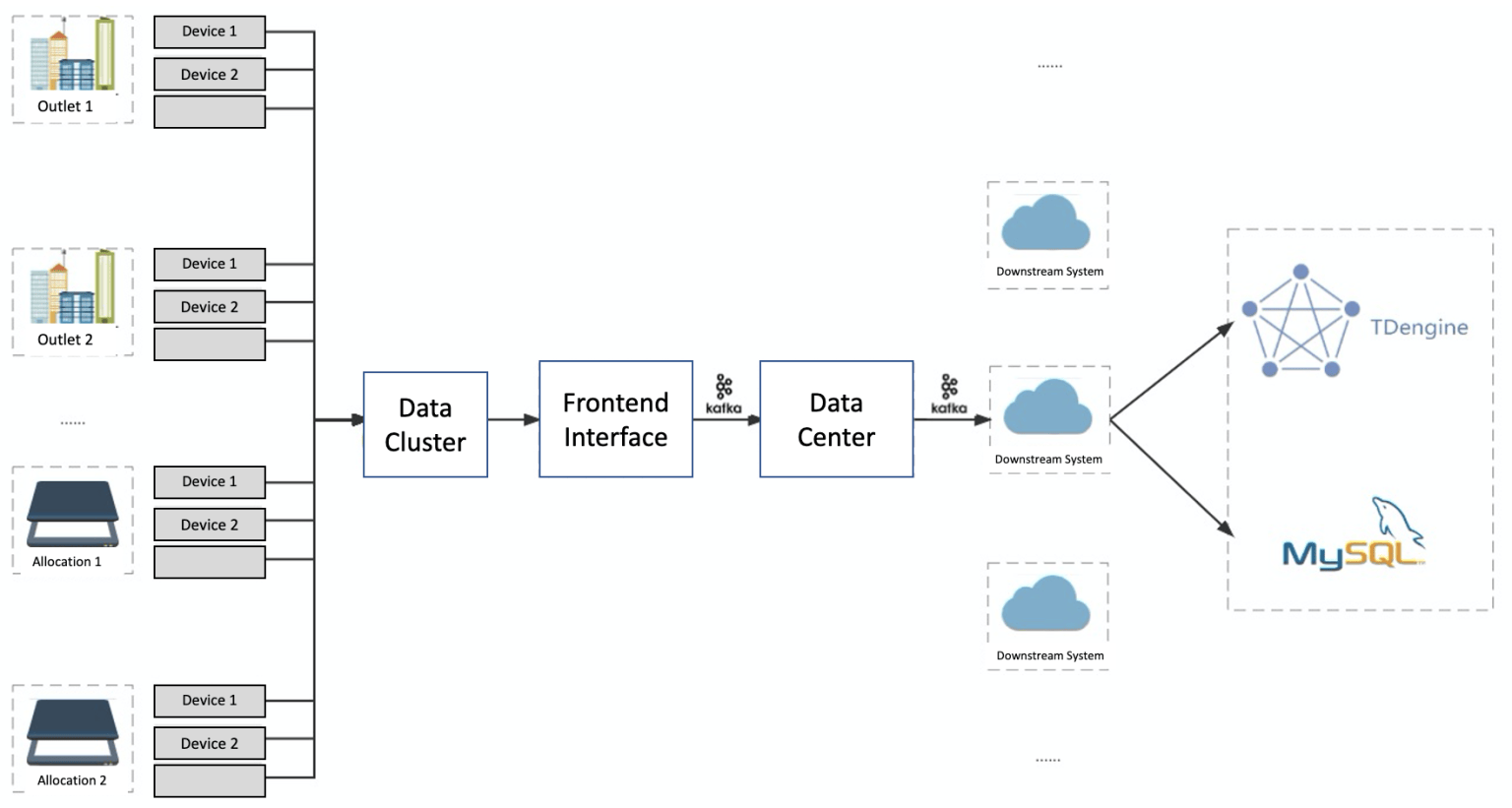

Our current architecture consists of Spring Boot + MyBatis (Java Persistence Framework) + MySQL + TDengine. TDengine is the time-series database (TSDB) responsible for storing and processing time-series data, and MySQL is responsible for the storage and processing of non-time series data. The high-level architecture is shown in the diagram below:

The deployment of TDengine was relatively simple since we did not have to migrate data and only had to add TDengine as a data storage repository for real-time time-series data.

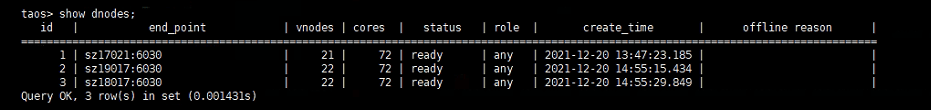

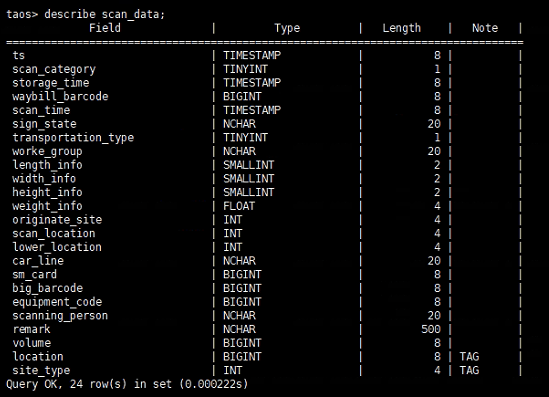

Currently, we are using TDengine version 2.2.2.0. We have deployed a cluster on 3, 16 Core servers with 64G RAM. The write speed is about 5000 rows per second. Our schema uses the novel idea of “one table per device” which in our case translates to “one scanner, one table”. We set location and site type as labels/tags for each device.

We put the location in the table name when building the table so that even if the location changes, we still have historical context for the device data. For example, scan_6100000000265_790117 represents the scanner whose device number is 6100000000265 and whose address is 790117. If this scanner changes its location, we can simply create a new subtable of scan_6100000000265_800000, which is separate from the old table and allows us to have both the new location data and old location data for the device.

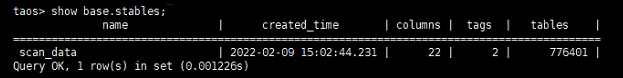

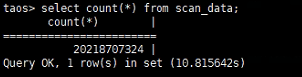

At present, there are nearly one million subtables under the “scan_data” supertable. After over a month of use, about 20 billion rows of data have been saved in TDengine.

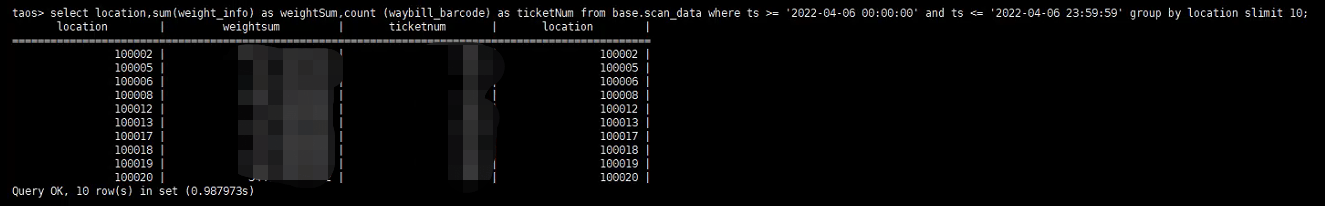

It is worth mentioning that common queries based on TDengine can be executed within 1 second, and some specific queries can even be executed in milliseconds.

select location, sum(weight_info) as weightSum, count (waybill_barcode) as ticketNum from base.scan_data where ts>='2022-04-07 00:00:00' and ts<='2022-04-07 23:59:59' group by location;

Another example.

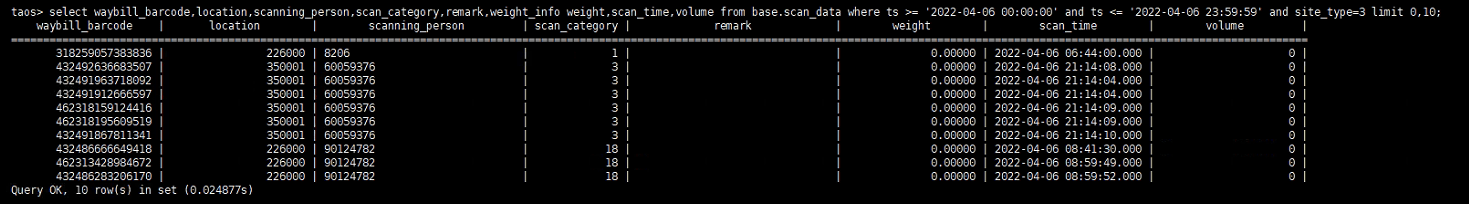

select waybill_barcode, location, scanning_person, scan_category, remark, weight_info weight, scan_time, volume from base.scan_data where ts>='2022-04-07 00:00:00' and ts<='2022-04-07 23:59:59' and site_type=3 limit 0,10;

In terms of storage, we can see that our supertable has 20 fields, most of which are of type int and about 5 are of type varchar. The largest field is an nchar with a length of 500 used to store Chinese character strings which occupies less than 300GB. When we used MySQL to store this data we needed several TB of disk space. Additionally, TDengine consumes fewer resources in terms of CPU and memory and is far more efficient.

Summary

Needless to say, our testing and use of TDengine were not all smooth sailing. We also encountered some issues along the way which we outline below.

- We underestimated data concurrency and had set the default time precision to be at millisecond resolution. Due to this we often encountered repeated timestamps which resulted in write failures and data not being stored in the database. However, we were able to resolve this by increasing the default time precision to be at nanosecond resolution.

- If a new label is added to the super-table, the label of the existing data is null. The labels for each subtable need to be manually changed which is not very user-friendly.

- Since TDengine’s table creation is single-threaded, there is a bottleneck at about 10000/s and so it is not recommended to use automatic table creation during initial setup. If the number of tables is not large it is not an issue.

Fortunately, we were able to get a lot of help from TDengine’s community staff to resolve the above issues with a combination of parameter configuration optimization and reasonable workarounds. Since then we have been considering expanding our use of TDengine across our growing business. We are of the opinion that with the features and optimizations anticipated in TDengine 3.0, it will be better integrated into the use cases within Yunda. We expect to have increasingly closer cooperation with TDengine in the future.