In the era of the Internet of Things (IoT), vehicles are not only just individual self-contained transportation objects, but also Internet-connected endpoints which can be capable of two-way communication. The real-time data generated by connected vehicles enhance user experiences in car rental and fleet management business, drive innovative business model like usage-based insurance, and build the foundation of autonomous driving and vehicle-to-everything (V2X) paradigm. Normally, sensors in vehicles collect real-time vehicle information such as vehicle speed, GPS location, etc., and send the data to cloud data processing platforms. The cloud platform provides fast persistence of the vehicle data and provides both real-time and historical data analysis for front end applications.

For 100,000 connected vehicles with a data acquisition rate at around every 1-30 seconds, about 0.29 to 8.64 billion records will be generated every day. At this scale of data generation, the performance of the data persistence layer is often the bottleneck of the performance of the platform and a monster in the platform budget. A typical HBase solution usually needs at least 6 high-performance servers equipped with 8-core CPUs and 32GB RAM, while using a time series database (TSDB) like TDengine only needs one server with a 2-core CPU and 8GB RAM.

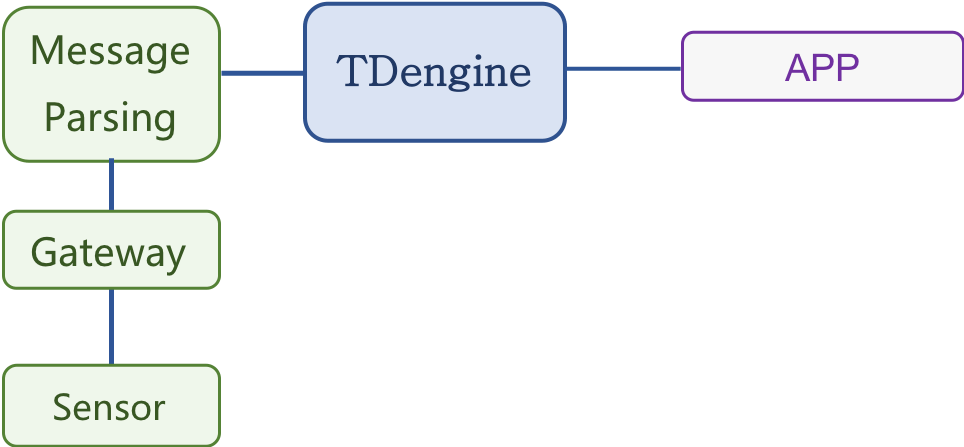

Architecture

The traditional HBase solution is extremely heavy for a connected vehicle data processing platform.

In both solutions, real-time vehicle data is collected by sensors, sent through network gateways, authorized and parsed, and then sent to the core processing modules. HBase itself only provides the data persistence functionalities, and the solution often needs a Kafka module for message queuing and subscription, a Redis cluster for caching the latest real-time data, and possibly a Spark module for the data aggregation and computing work. Fortunately for TDengine, the architecture is a lot lighter. All the functionalities mentioned above have already been integrated into TDengine. TDengine is a purposely built for time series data processing engine integrated with the fastest time series data storage/query engine, message queuing, caching, data subscription and stream computing functionalities. In the following part of this article, we will show readers how to build the backend of a demo connected vehicle platform using TDengine.

Data Model

Connected vehicle data is composed of a variety of sensor and usage data. Many companies use data models specified by the ISO 22901 industry-standard diagnostic format along with some customized modifications. Usually, the collected data types contain information about the vehicle location, drivetrain metrics, and some other third-party tracking indicators. Here we use a simple data model assuming our sensor only collects the most important data listed below:

- Timestamp

- Longitude

- Latitude

- Altitude

- Direction

- Speed

Static information about vehicles:

- License Plate Number

- Vehicle Model

- VIN

According to TDengine’s user manual, the best practice to use TDengine in IoT scenarios is to create a supertable for sensors with the same type and then create a table for every single sensor. The supertable defines the table columns and data types and provides a convenient interface for joint queries on multiple devices. Tables are tagged with static information such as license plate number, the vehicle model, VIN, driver’s information, etc. Tags can still be modified after tables are created.

We first create a database for this demo.

create database demodb cache 8192 ablocks 2 tblocks 1000 tables 10000Create the supertable for the car sensor. Define the data columns as collecting time ts , vehicle location information including longitude , latitude , altitude , and vehicle driving status including direction , velocity . Each vehicle occupies its own table that is tagged by the license plate number card and vehicle model model.

create table vehicle(ts timestamp, longitude bigint, latitude bigint, altitude int, direction int, velocity int)

tags(card int, model binary(10));Create a table for a BMW whose license plate number is 28436.

create table v1 using vehicle tags(28436, ‘MyModel’);Data Insertion

Now let’s insert a new data record into TDengine.

insert into v1 values(‘2019-07-03 18:48:59.000’, 1, 2, 3, 4, 5);For testing purposes, we can insert records in batches to improve efficiency. Refer to the TDengine for more detailed usage of batch insertions.

Here we provide a vehicle data simulator written in C. This simulator first creates 100,000 tables and then writes about one month’s data into each table (with a time interval of 1 minute and 44,000 in total). Please note the data is fake.

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <unistd.h>

#include "time.h"

#include "taos.h"

int main(int argc, char *argv[]) {

taos_init();

TAOS *taos = taos_connect("127.0.0.1", "root", "taosdata", NULL, 0);

if (taos == NULL) {

printf("failed to connect to server, reason:%s\n", taos_errstr(taos));

exit(1);

}

if (taos_query(taos, "create database db cache 8192 ablocks 2 tblocks 1000 tables 10000") != 0) {

printf("failed to create database, reason:%s\n", taos_errstr(taos));

exit(1);

}

taos_query(taos, "use db");

char sql[65000] = "create table vehicles(ts timestamp, longitude bigint, latitude bigint, altitude int, direction int, velocity int) tags(card int, model binary(10))";

if (taos_query(taos, sql) != 0) {

printf("failed to create stable, reason:%s\n", taos_errstr(taos));

exit(1);

}

int begin = time(NULL);

for (int table = 0; table < 100000; ++table) {

sprintf(sql, "create table v%d using vehicles tags(%d, 't%d')", table, table, table);

if (taos_query(taos, sql) != 0) {

printf("failed to create table t%d, reason:%s\n", table, taos_errstr(taos));

exit(1);

}

for (int loop = 0; loop < 44; loop++) {

int len = sprintf(sql, "insert into v%d values", table);

for (int row = 0; row < 1000; row++) {

len += sprintf(sql + len, "(%ld,%d,%d,%d,%d,%d)", 1561910400000L + 60000L * (row + loop * 1000L), row, row, row, row, row);

}

if (taos_query(taos, sql) != 0) {

printf("failed to insert table t%d, reason:%s\n", table, taos_errstr(taos));

}

}

}

int end = time(NULL);

printf("insert finished, time spend %d seconds", end - begin);

}To compile the simulator code given above, name the source code file to test.c and then create the following makefile file in the directory with test.c.

ROOT = ./

TARGET = exe

LFLAGS = -Wl,-rpath,/usr/local/lib/taos/ -ltaos -lpthread -lm -lrt

CFLAGS = -O3 -g -Wall -Wno-deprecated -fPIC -Wno-unused-result -Wconversion -Wno-char-subscripts -D_REENTRANT -Wno-format -D_REENTRANT -DLINUX -msse4.2 -Wno-unused-function -D_M_X64 -std=gnu99 -I/usr/local/include/taos/

all: $(TARGET)

exe:

gcc $(CFLAGS) ./test.c -o $(ROOT)/test $(LFLAGS)

clean:

rm $(ROOT)test After compilation, you will get an executable file test in the same directory. To run it, simply execute:

./testIn this test, the TDengine service and the simulator program were running on the same machine with a 2-core CPU and 8GB RAM. The total time spent on inserting was 3946 seconds, giving a insertion speed of 4,400,000,000 / 3946 = 1,115,000 records per second, which is about 5,770,000 data points per second. Considering the result is from a single-threaded client, the multiple-threaded writing may very likely give a few more times of performance boost. But the current writing performance is adequate for connected vehicle scenarios with a scale of 100,000 vehicles.

Query the Vehicle Data

TDengine has done a lot of optimizations on time series data queries. Based on the dataset generator above, we used TDengine’s CLI taos to test some of the common queries which eventually gave out some sensational performance stats.

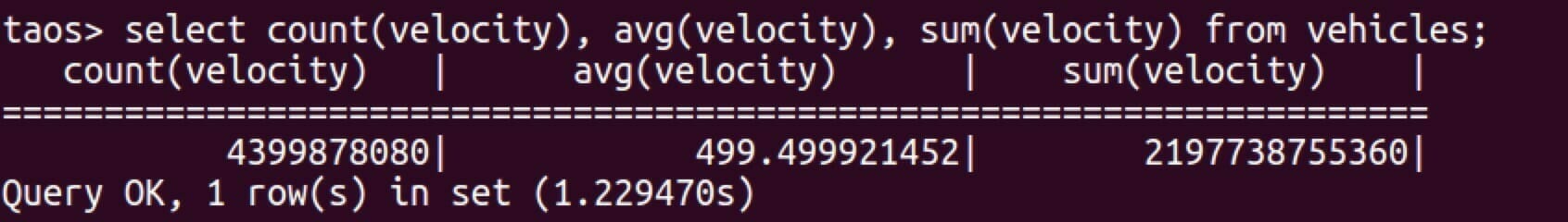

Query on Full Volume

Query the total number of data points, the average and the summation of velocity for all 100,000 vehicles in a month.

select count(velocity), avg(velocity), sum(velocity) from vehicles;

Details of a Single Vehicle

Querying data of a single vehicle for different time intervals.

select last(*) from v1;

select * from v1 where ts >= '2019-07-01 00:00:00' and ts < '2019-07-01 00:00:00' >> 1h.txt

select * from v1 where ts >= '2019-07-01 00:00:00' and ts < '2019-07-02 00:00:00' >> 1d.txt

select * from v1 where ts >= '2019-07-01 00:00:00' and ts < '2019-07-11 00:00:00' >> 10d.txt

select * from v1 where ts >= '2019-07-01 00:00:00' and ts < '2019-08-01 00:00:00' >> 31d.txt| Query Content | Latency (ms) |

|---|---|

| Last record | 2.3 |

| Last hour | 2.1 |

| Last day | 6.3 |

| Last 10 days | 15.4 |

| Last 31 days | 31.6 |

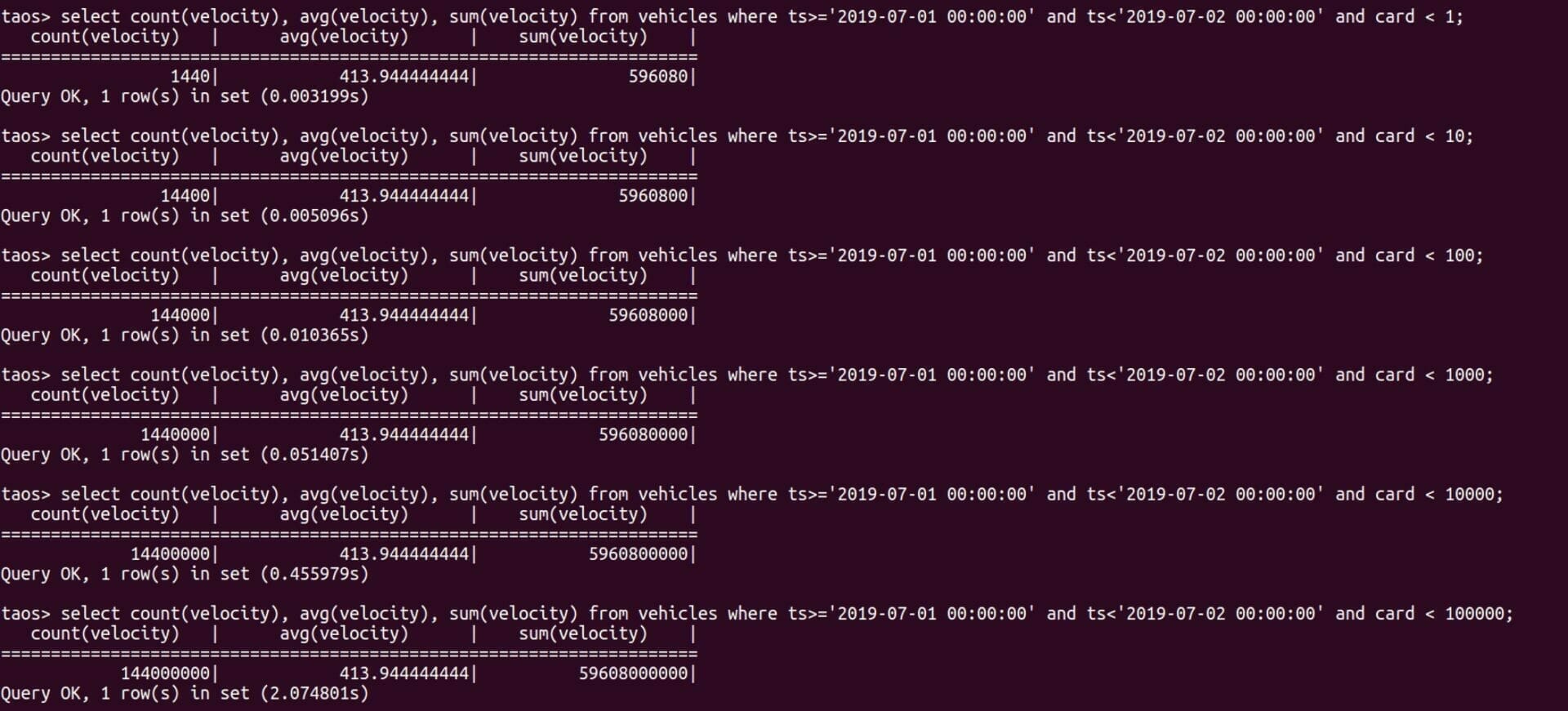

Multi-vehicle Aggregation over 1 Day

Aggregating data of multiple vehicles for a day’s timespan.

| Query Content | Latency (ms) |

|---|---|

| 1-Vehicle Aggregation Over 1 Day | 3.2 |

| 10-Vehicle Aggregation Over 1 Day | 5.1 |

| 100-Vehicle Aggregation Over 1 Day | 10.4 |

| 1000-Vehicle Aggregation Over 1 Day | 51.4 |

| 10,000-Vehicle Aggregation Over 1 Day | 455.9 |

| 100,000-Vehicle Aggregation Over 1 Day | 2074.8 |

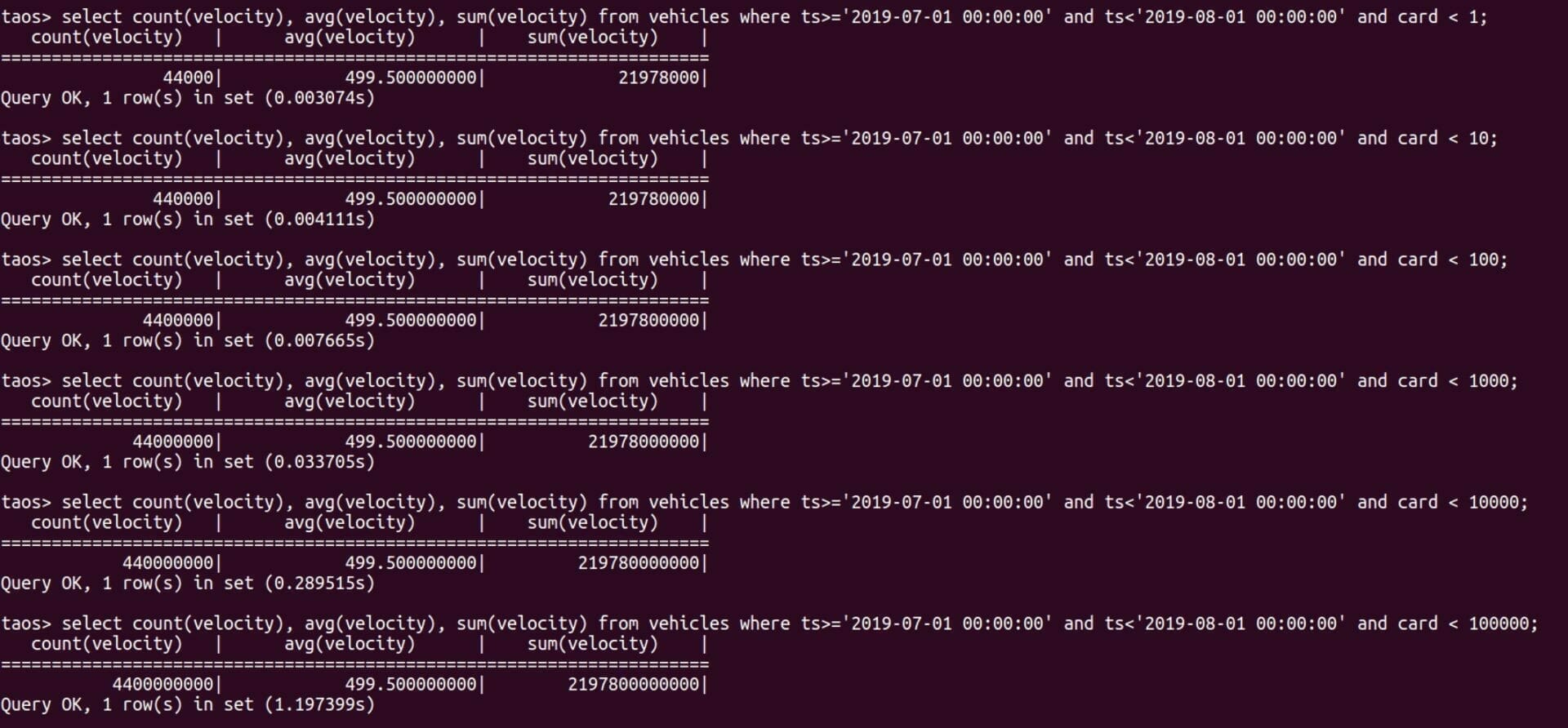

Multi-vehicle aggregation over 1 month

Aggregation data of multiple vehicles for a month.

| Query Content | Latency (ms) |

|---|---|

| 1-Vehicle Aggregation Over 1 Month | 3.1 |

| 10-Vehicle Aggregation Over 1 Month | 4.1 |

| 100-Vehicle Aggregation Over 1 Month | 7.7 |

| 1000-Vehicle Aggregation Over 1 Month | 33.7 |

| 10,000-Vehicle Aggregation Over 1 Month | 289.5 |

| 100,000-Vehicle Aggregation Over 1 Month | 1197.4 |

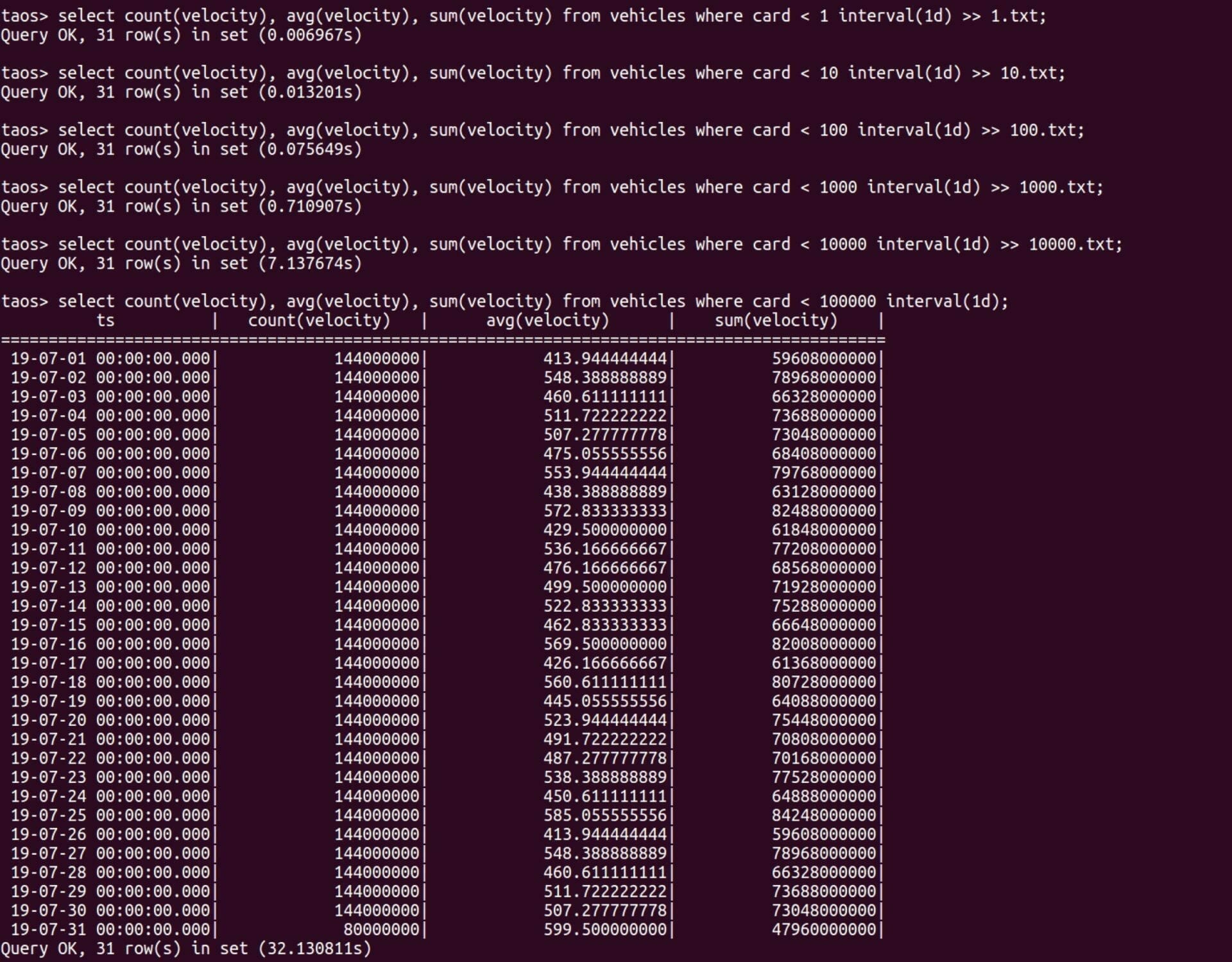

Multi-vehicle Data Downsampling over 1 Month

Aggregation queries on multiple vehicles with downsampling for a period of one month.

| Query Content | Latency (ms) |

|---|---|

| 1-Vehicle Downsampling Over 1 Month | 6.9 |

| 10-Vehicle Downsampling Over 1 Month | 13.2 |

| 100-Vehicle Downsampling Over 1 Month | 75.6 |

| 1000-Vehicle Downsampling Over 1 Month | 710.9 |

| 10,000-Vehicle Downsampling Over 1 Month | 7137.6 |

| 100,000-Vehicle Downsampling Over 1 Month | 32130.8 |

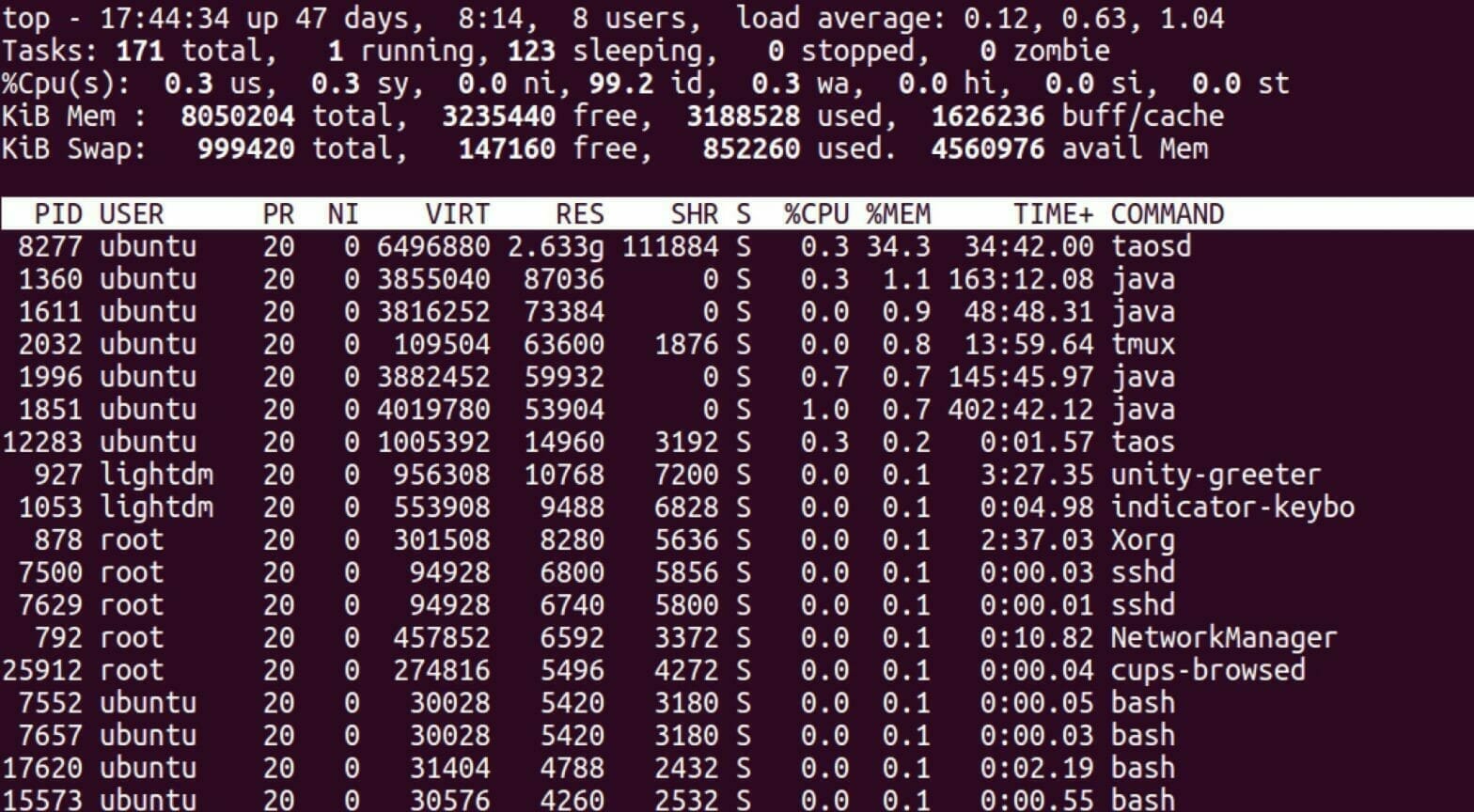

Usage of Hardware Resources

While running, the taosd service used about 2.7GB of RAM and had a very low CPU usage.

Conclusion

TDengine is a high-performance, easy-to-deploy and economical time series data processing engine that can be used in many IoT scenarios including connected vehicle data processing. On a single node with a 2-core CPU and 8GB RAM, TDengine gives an incredible writing throughput at a level of million records per second. IoT data like connected vehicles data are mostly sensor-collected timer series data, and time series purpose-built data processing engines like TDengine are much better choices than the traditional heavy Hadoop-Spark solutions in those scenarios.