With the explosive growth of time-series data in recent years, led by increasing adoption of IoT technologies, the processing of this data has become a challenging task for enterprises across many industries. As traditional general-purpose databases and data historians are seldom able to handle the scale of modern time-series datasets, there is a trend toward deploying a purpose-built time-series database (TSDB) as a core part of the industrial data infrastructure. And considering the requirements of time-series data processing, high scalability has become an essential component for a modern time-series database.

Distributed Design

In a distributed architecture, the components of a system are spread across multiple nodes instead of being centralized in a single location. The decoupling of compute and storage resources is particularly important in a time-series database context because it enables these components to be scaled independently and more quickly than a tightly coupled system.

Furthermore, by replicating data and services across multiple nodes, distributed systems can continue to function even if some of the nodes fail. This is essential for fault tolerance and disaster recovery. And by distributing processing tasks across multiple nodes, distributed systems can achieve better performance than centralized systems. This is because the workload is spread out, allowing each node to focus on a smaller subset of tasks, which can be completed more quickly. As time-series data platforms are often ingesting and processing large amounts of data 24 hours a day, they benefit greatly from the fault tolerance and enhanced performance provided by a distributed design.

Finally, distributed systems do not require custom, ultra-high-end servers or expensive and restrictive software licenses. Instead, they can make use of commodity hardware and open-source software, and for that reason can be built more cost-effectively than centralized systems.

Since version 3.0, TDengine features a fully distributed architecture in which tasks are divided among nodes in a cluster.

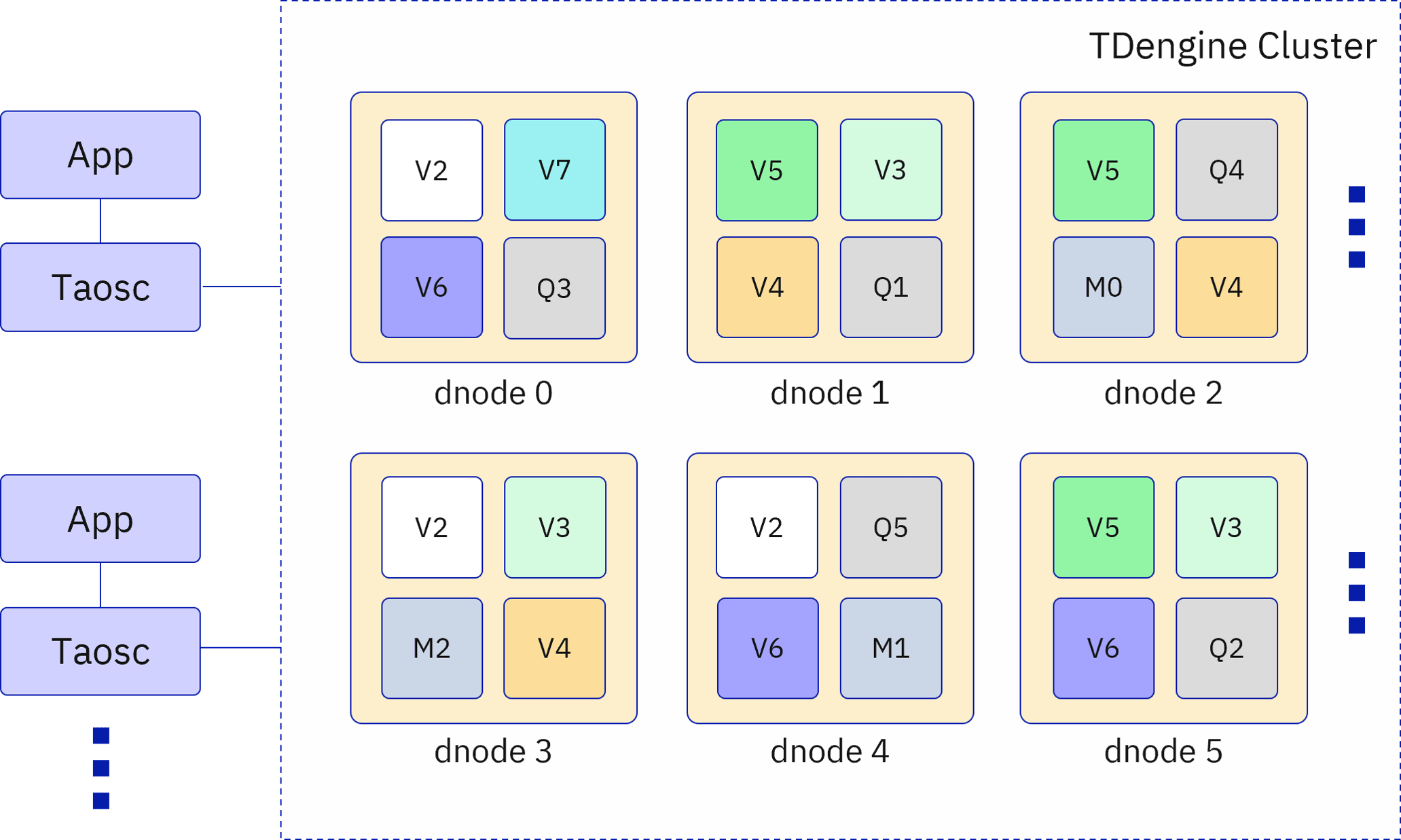

As shown in the figure, a TDengine cluster consists of one or more data nodes (dnodes). A dnode is a instance of the TDengine server running on a physical machine, virtual machine, or container. Each dnode is logically subdivided into virtual nodes (vnodes), query nodes (qnodes), stream nodes (snodes), and management nodes (mnodes), of which vnodes are used for storage and qnodes are used for compute. By deploying vnodes and qnodes on different dnodes, TDengine implements the separation of storage and compute: a fault occurring on a vnode does not affect any qnodes, and vice versa, and qnodes and vnodes can be scaled in or out separately.

Scalability

A high level of scalability ensures that systems and processes can accommodate increasing demand. This is facilitated by the distributed design mentioned above. Because workloads are processed by multiple decentralized nodes, it is easy to add more nodes to handle larger amounts of data without overloading any single node; likewise, it is easy to remove nodes in the event that requirements change and resources need to be reallocated.

Scalability is particularly important at this stage because time-series datasets are rapidly increasing in scale. As a business grows, the amount of data passing through its pipelines can only become larger, meaning that existing data infrastructure must be expanded to meet new business requirements. Scalability also helps to reduce costs associated with expanding or upgrading data systems, adding resources incrementally as needed rather than in large and expensive blocks.

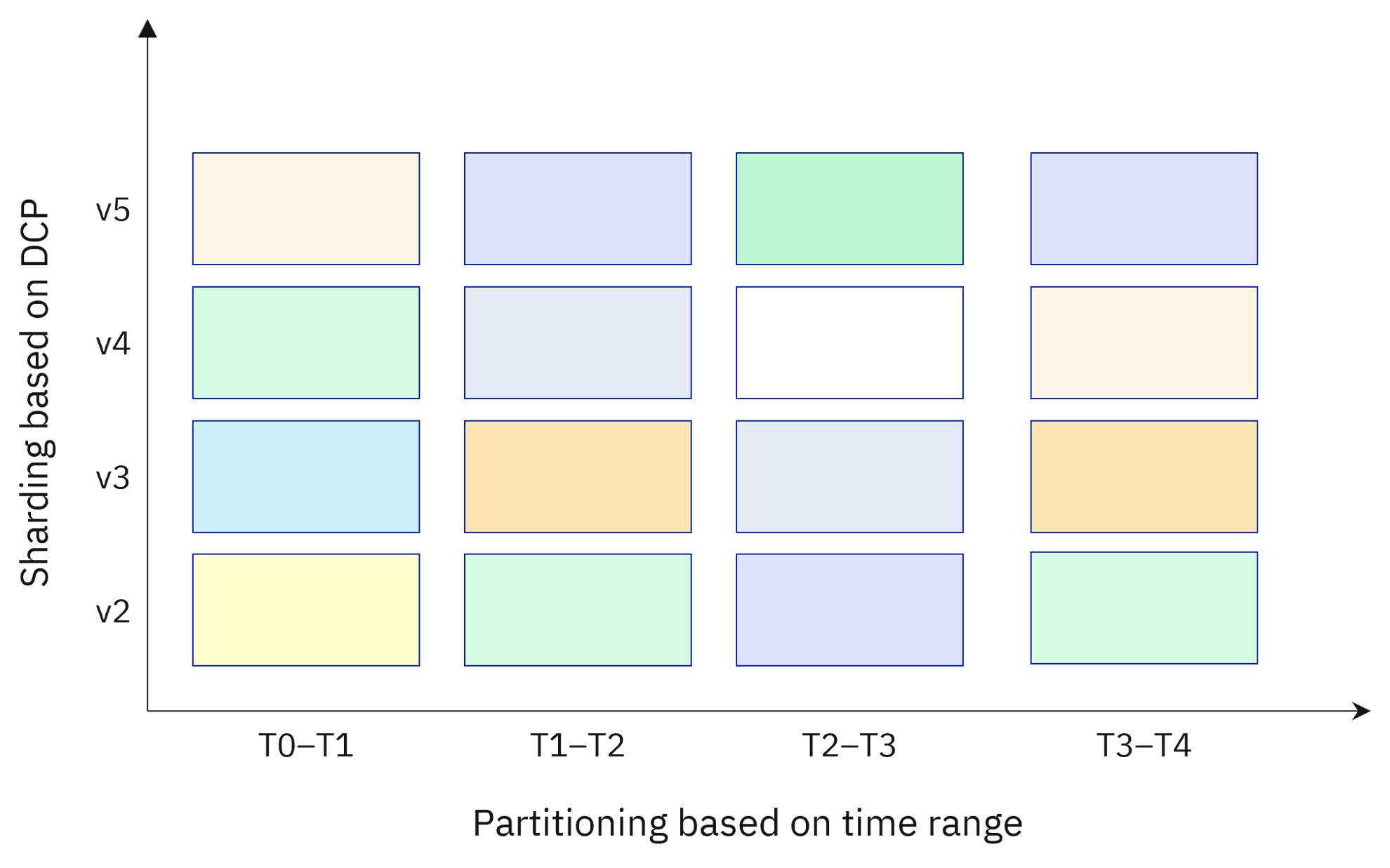

To achieve scalability for massive data sets, TDengine shards data by data collection point and partitions data by time.

In TDengine, all data from a single data collection point is stored on the same vnode, though each vnode can contain the data of multiple data collection points. The vnode stores the time-series data as well as the metadata, such as tags and schema, for the data collection point. Each data collection point is assigned to a specific vnode by consistent hashing, thereby sharding the data from multiple data collection points across different nodes.

In addition to sharding the data, TDengine partitions it by time period. The data for each time period is stored together, and the data of different time periods cannot overlap. This time period is a configurable value of one or multiple days. Partitioning data by time also enables efficient implementation of data retention policies as well as tiered storage.

TDengine also enables enhanced scalability by resolving the issue of high cardinality. In TDengine, the metadata for each data collection point is stored on the vnode assigned to the data collection point instead of a centralized location. When an application inserts data points to a specific table or queries data on a specific table, the request is sent directly to the appropriate vnode. For aggregation on multiple tables, the query request is sent to the corresponding vnodes, which perform the required operation, and then the qnode aggregates the query results from all vnodes involved. This allows TDengine to deliver high performance even as the cardinality of a dataset increases.

Elasticity

Elasticity refers to the ability of a system to dynamically provision and deprovision resources based on changes in demand. Automating the process of scaling resources up or down on an as-needed basis enables data systems to handle sudden spikes in workload and to accommodate growth over time while maintaining optimal performance and avoiding downtime. This builds on the scalability mentioned previously and takes it one step further into the cloud.

By providing elasticity, your time-series database can respond quickly to changing business needs, opportunities, or challenges. You can launch new services, products, and applications quickly and efficiently without worrying about resource constraints — additional nodes are deployed on demand to ensure adequate performance. You can also match resource consumption to actual demand in real time: using only the resources that are required at a particular moment prevents overprovisioning and unnecessary costs.

To support storage elasticity, TDengine automatically splits or combines vnodes based on the situation. If write latency reaches a specified threshold, TDengine can split vnodes so that more system resources will be allocated for data ingestion. However, if the system can guarantee latency and performance, TDengine may combine multiple vnodes into a single vnode to save resources.

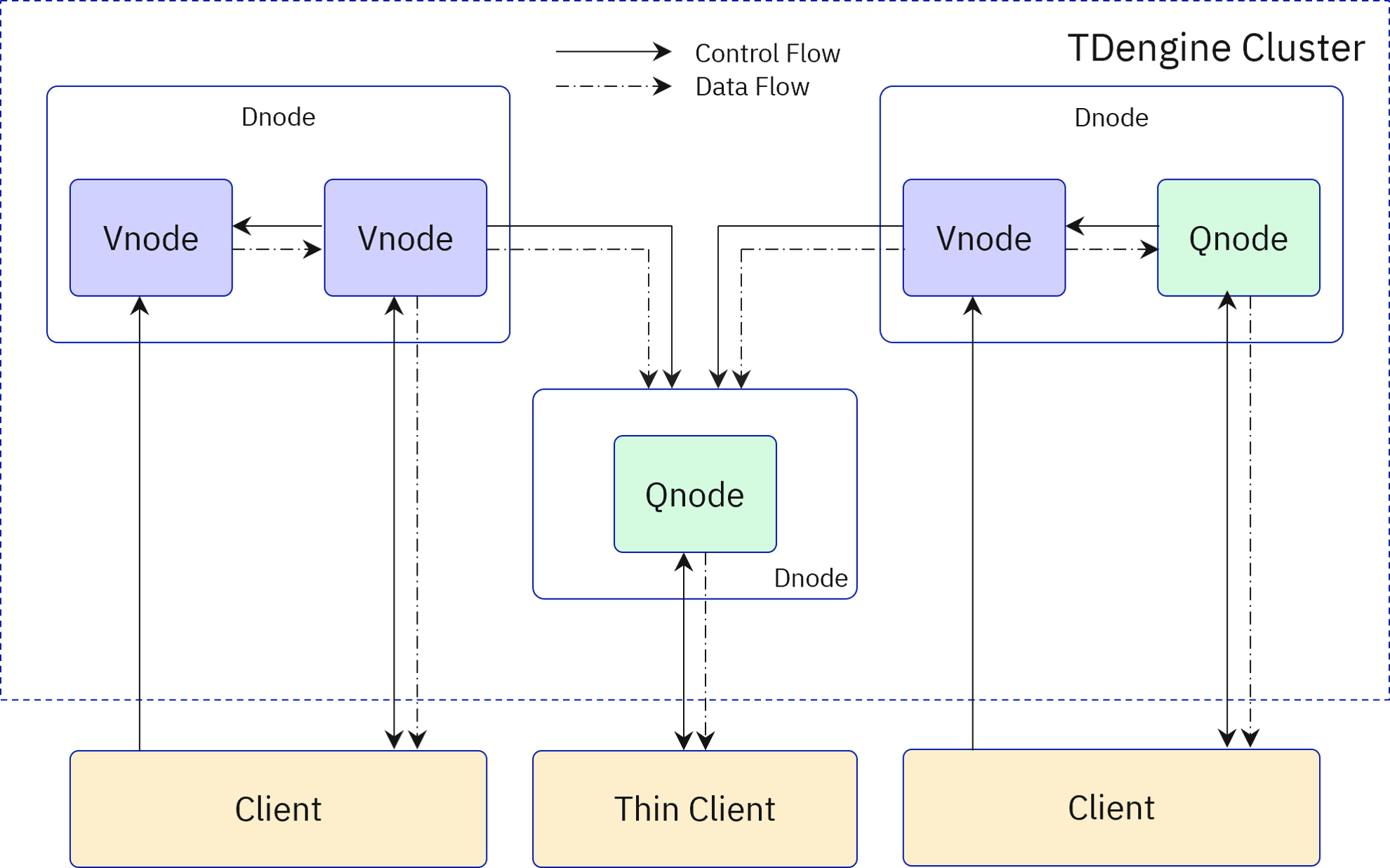

For compute elasticity, TDengine makes use of the qnode. While simple queries such as fetching raw data or rollup data can still be performed on a vnode, those queries that require sorting, grouping, or other compute-extensive operations are performed on a qnode. Qnodes can run in containers and can be started or stopped dynamically based on the system workload. By using qnodes, TDengine is an ideal data analytics platform for time-series data, including real time analytics and batch processing, because the compute resources are nearly infinite and elastic in a cloud environment.

Resilience

Modern software design understands that problems will occur and provides resilience to recover quickly from faults and ensure business continuity. High availability and high reliability are key components of resilience.

For a time-series database, high availability is achieved by replicating data across multiple nodes; if one node fails, another can take its place and the database can continue to provide services. The database system must ensure appropriate data consistency and have a mechanism for establishing consensus. To implement high reliability, a traditional write-ahead log (WAL) is still an excellent option.

With a highly available and highly reliable time-series data platform, you can be sure that your data is accurate and that it can be used by your applications when you need it. In addition, this kind of resilience can help reduce costs associated with disruptions by minimizing downtime and reducing the need for recovery efforts.

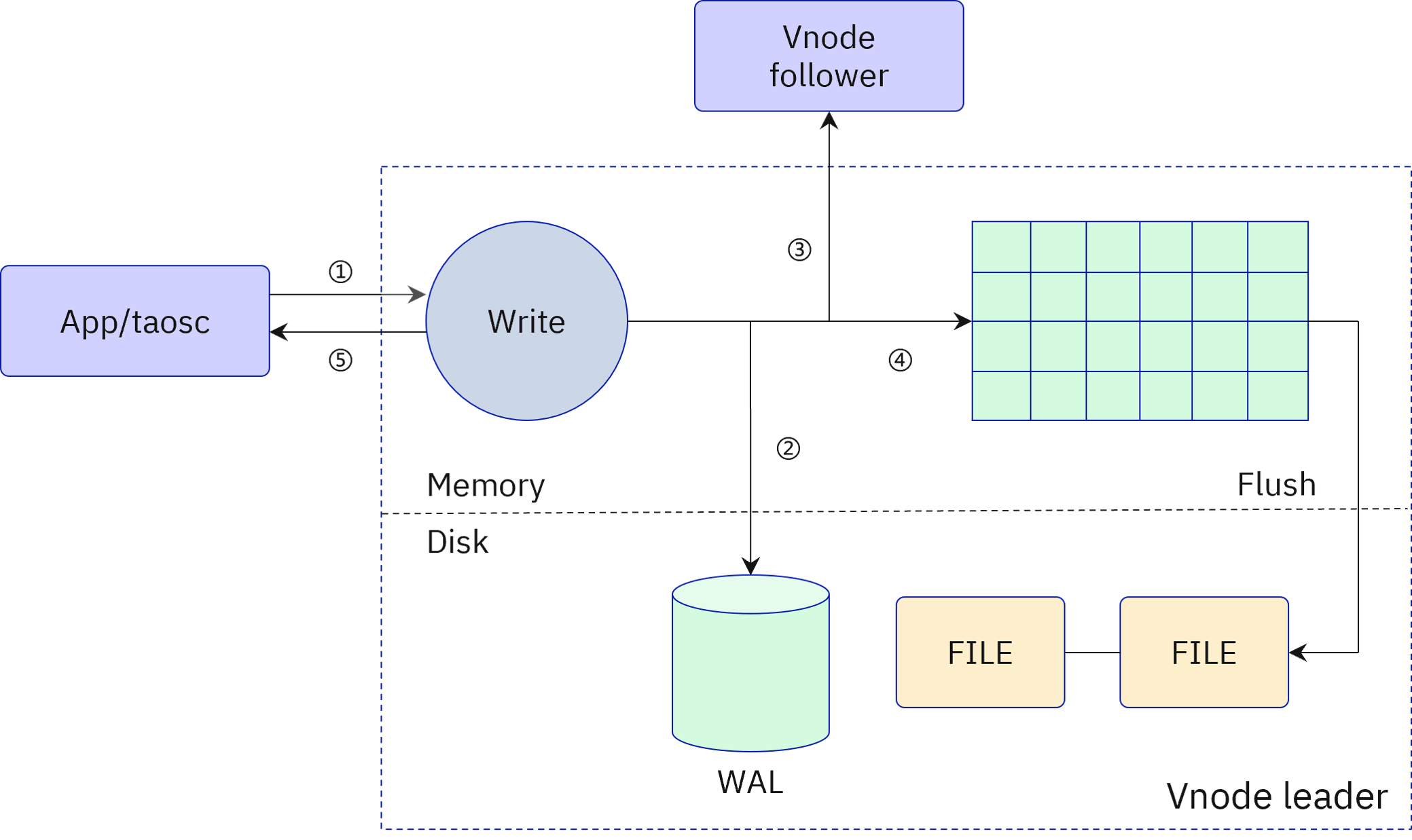

TDengine provides resilience through its high reliability and high availability design. For any database, storage reliability is the top priority. TDengine uses the traditional write-ahead log (WAL) to guarantee that data can be recovered even if a node crashes. Incoming data points are always written into the WAL before TDengine sends an acknowledgement to the application.

TDengine provides high availability through data replication for both vnodes and mnodes. Furthermore, vnodes on different dnodes can form a virtual node group (vgroup). The data in each vgroup is synchronized through the Raft consensus algorithm to ensure its consistency. Data writes can only be performed on the leader node, but queries can be performed on both the leader and followers simultaneously. If the leader node fails, the system automatically selects a new leader node and continues to provide services, ensuring high availability.

For metadata, a TDengine cluster can include three mnodes that also use Raft to maintain consistency. TDengine implements strong data consistency for metadata; however, for performance reasons, eventual consistency is used for time-series data.

Automation

Automation is a critical component of infrastructure and application management. For a time-series database to be truly scalable, its deployment, management, and scaling itself must be supported by automated processes.

Automation also ties into resilience and scalability: automated failover and disaster recovery mechanisms enhance the resilience of systems, while automated provisioning and deprovisioning of infrastructure resources enhances their scalability.

Going further, automation enables consistency across environments by enforcing unified policies and configurations across all components of the system. With a time-series database that supports a high level of automation, you can be sure that your nodes and infrastructure are configured correctly and consistently, reducing the risk of errors or security vulnerabilities. It also greatly enhances portability, enabling deployment across various cloud platforms in addition to on-premises and hybrid environments.

TDengine can be deployed in a Kubernetes environment using standard procedures, either with a Helm chart or with the kubectl tool. After you configure TDengine as a StatefulSet in Kubernetes, each node in your cluster is created as a pod. You can then use Kubernetes to scale your cluster in or out on demand, in addition to other operations and management tasks.

Observability

Observability provides a comprehensive view of system performance and behavior, allowing you to detect problems quickly and address them before they cause significant downtime or service disruptions. Given the critical nature of the time-series database in the overall data infrastructure, observability is an indispensable characteristic for identifying and addressing bottlenecks, optimizing resource utilization, and improving system performance and reliability.

A time-series database must integrate with observability systems to enable real-time visibility into system behavior. This integration lets enterprises not only optimize system performance, but also maintain compliance and improve customer satisfaction due to decreased downtime.

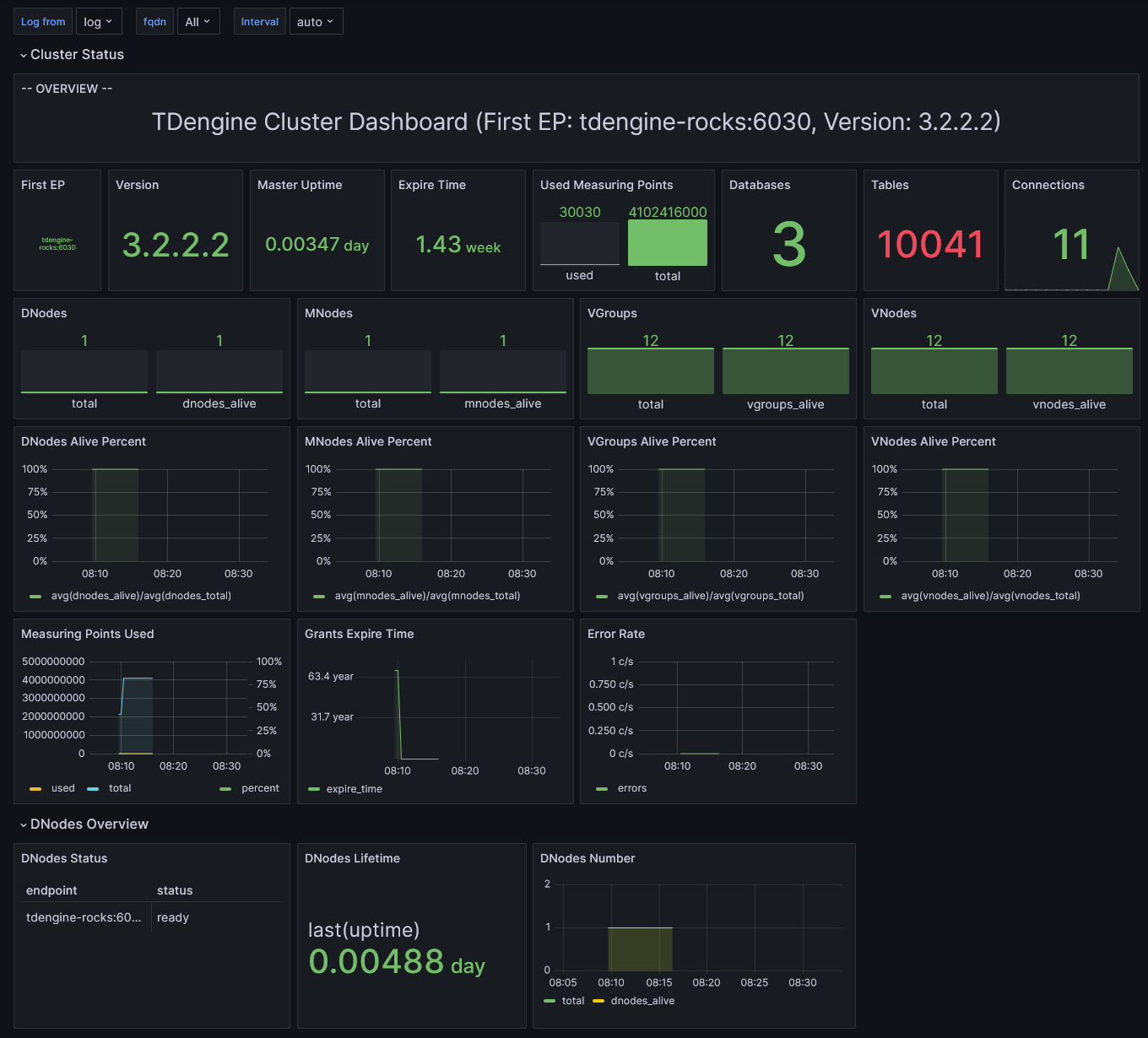

TDengine monitors the system status by collecting metrics such CPU, memory, and disk usage in addition to database-specific metrics like slow queries. The taosKeeper component of TDengine can send the metrics to other monitoring tools like Prometheus, allowing TDengine to be integrated with existing observability systems. and more. In addition, the TDinsight component integrates with Grafana, providing a dashboard for visualization and alerting.

Conclusion

Considering the growing size of datasets across industries, modern time-series databases must be highly scalable today to meet the business requirements of tomorrow. A distributed design is necessary to build the scalable, fault-tolerant, and high-performance systems that are required for handling time-series datasets. And by leveraging cloud-native technologies and principles in their data infrastructure, enterprises can achieve better business outcomes, faster time-to-market, higher customer satisfaction, and increased revenue.

Through its natively distributed design, data sharding and partitioning, separation of compute and storage, Raft for data consistency, and more, TDengine provides the scalability, elasticity, and resilience needed for time-series data processing. By supporting containers, Kubernetes, comprehensive monitoring metrics, and automation scripts, TDengine can be deployed and run on public, private or hybrid clouds as a cloud-native solution. With a scalable time-series database like TDengine, your systems can handle the demands of modern computing and provide reliable, high-quality services to customers around the world.