Introduction

In the last decade and especially in the post-COVID world, along with the ramifications of climate change, digital transformation has taken center stage as companies strive to become resilient to shocks to the supply chain, power outages and resource shortages. Optimizing manufacturing is a large part of it, as evident by the rise in projects related to Industry 4.0 and IIoT and the increasing use of ML and robotics. This has also led to an exponential increase in time-series data that needs to be ingested at very high frequencies, stored, analyzed and streamed to ML pipelines and to geographically dispersed stakeholders.

This time-series data needs to be stored in purpose-built platforms that are equally resilient and can be deployed on highly available and scalable infrastructure.

TDengine: Natively Distributed and Scalable

TDengine, as a time-series database purpose-built for Industry 4.0 and Industrial IoT, is easily deployed on any platform to ensure high availability and scalability. In this blog we specifically show how TDengine can be deployed in cluster of VMs and remain operational even with the loss of a node.

Firstly, it is important to note that the logical architecture of TDengine is itself designed to be distributed, highly available and scalable. It will be useful if you read about the distributed architecture of TDengine first, in order to follow this blog. Specifically, it will help to know the concepts of “dnodes”, “vnodes” and “mnodes”.

Setting up a VM Cluster

For the purposes of this blog, I set up 3 Ubuntu VMs in VirtualBox locally, and demonstrate how to set up a TDengine cluster for high availability.

If you already have other virtualization software, you can, of course, use that software and skip this section.

Install VirtualBox

Firstly, you must install VirtualBox. Please follow the instructions for your particular host operating system.

Create a NAT Network

Create VMs

For this blog we chose to create Ubuntu 22 VMs.

For each VM we allocated 2G RAM and 1 CPU.

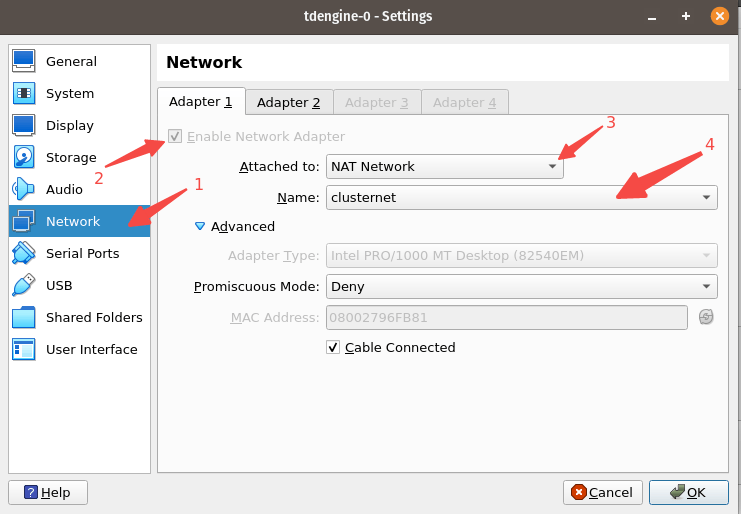

We also created network adapters as follows in each VM.

Note that you choose the NAT network that you created in in the previous step.

See the Ubuntu website for information on how to create an Ubuntu VM in VirtualBox.

Update /etc/hosts files on each VM

You can run the following to get the IP address of each node.

tdengine@tdc01:~$ ifconfig

enp0s3: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.2.4 netmask 255.255.255.0 broadcast 10.0.2.255

inet6 fe80::77b9:e21f:2466:22b2 prefixlen 64 scopeid 0x20<link>

ether 08:00:27:96:fb:81 txqueuelen 1000 (Ethernet)

RX packets 20760 bytes 4686683 (4.6 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 10612 bytes 3444110 (3.4 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0Set the hostname on each node if you have not already done so.

sudo hostnamectl set-hostname <hostname>Now you can add the hostname and the IP addresses of each node to the /etc/hosts file on each node.

It should look something like the following. In my case, I’ve named the nodes tdc01, tdc02 and tdc03 and their IP addresses are dynamically assigned by the VirtualBox NAT Network that you created earlier. You can also choose static IP addresses if you want.

127.0.0.1 localhost

10.0.2.4 tdc01

10.0.2.5 tdc03

10.0.2.6 tdc02

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allroutersYou should also ensure that each of the nodes can ping the other nodes using the hostnames that you have given them, after you edit the /etc/hosts files on each node.

Deploy and start the cluster

Now we can start the process of creating and deploying a TDengine HA cluster.

Install the latest TDengine version on your first node

On this node you will leave the FQDN blank since you are setting up the cluster. This node will be the first EP (first End Point) of the cluster.

tdengine@tdc01:~/downloads$ sudo dpkg --install TDengine-server-3.1.0.0-Linux-x64.deb

Selecting previously unselected package tdengine.

(Reading database ... 186303 files and directories currently installed.)

Preparing to unpack TDengine-server-3.1.0.0-Linux-x64.deb ...

Unpacking tdengine (3.1.0.0) ...

Setting up tdengine (3.1.0.0) ...

./post.sh: line 107: /var/log/taos/tdengine_install.log: No such file or directory

Start to install TDengine...

./post.sh: line 107: /var/log/taos/tdengine_install.log: No such file or directory

System hostname is: tdc01

Enter FQDN:port (like h1.taosdata.com:6030) of an existing TDengine cluster node to join

OR leave it blank to build one:

Enter your email address for priority support or enter empty to skip:

Created symlink /etc/systemd/system/multi-user.target.wants/taosd.service → /etc/systemd/system/taosd.service.

To configure TDengine : edit /etc/taos/taos.cfg

To start TDengine : sudo systemctl start taosd

To access TDengine : taos -h tdc01 to login into TDengine server

TDengine is installed successfully!

Processing triggers for man-db (2.10.2-1) ...Install TDengine on the remaining nodes

When you install TDengine on the remaining nodes, you must enter the hostname:port of the first node. In my case, it is: tdc01:6030 as shown below.

System hostname is: tdc02

Enter FQDN:port (like h1.taosdata.com:6030) of an existing TDengine cluster node to join

OR leave it blank to build one:tdc01:6030

Enter your email address for priority support or enter empty to skip:

Created symlink /etc/systemd/system/multi-user.target.wants/taosd.service → /etc/systemd/system/taosd.service.

To configure TDengine : edit /etc/taos/taos.cfg

To start TDengine : sudo systemctl start taosd

To access TDengine : taos -h tdc01 -P 6030 to login into cluster, then

execute : create dnode 'newDnodeFQDN:port'; to add this new node

TDengine is installed successfully!

Processing triggers for man-db (2.10.2-1) ...

Add the nodes to the cluster

Please ensure that the taosd service is running on each node.

To start taosd you can use the following:

sudo systemctl start taosdThen start the taos CLI on any node and add the nodes as follows:

taos> create dnode 'tdc02:6030';

Create OK, 0 row(s) affected (0.009944s)

taos> show dnodes;

id | endpoint | vnodes | support_vnodes | status | create_time | reboot_time | note |

====================================================================================================================================================================

1 | tdc01:6030 | 0 | 4 | ready | 2023-09-04 18:58:10.391 | 2023-09-04 18:58:10.367 | |

2 | tdc02:6030 | 0 | 4 | ready | 2023-09-04 19:01:21.746 | 2023-09-04 19:00:18.628 | |

Query OK, 2 row(s) in set (0.003692s)

taos> create dnode 'tdc03:6030';

Create OK, 0 row(s) affected (0.010349s)

taos> show dnodes;

id | endpoint | vnodes | support_vnodes | status | create_time | reboot_time | note |

====================================================================================================================================================================

1 | tdc01:6030 | 0 | 4 | ready | 2023-09-04 18:58:10.391 | 2023-09-04 18:58:10.367 | |

2 | tdc02:6030 | 0 | 4 | ready | 2023-09-04 19:01:21.746 | 2023-09-04 19:00:18.628 | |

3 | tdc03:6030 | 0 | 4 | ready | 2023-09-04 19:02:48.980 | 2023-09-04 19:02:40.144 | |

Query OK, 3 row(s) in set (0.001831s)

Check the number of mnodes

If you read the documentation on the distributed logical architecture of TDengine, you may recall that an “mnode” is a logical unit, part of taosd, that manages and monitors the running status of all data nodes (dnodes) and also manages and stores metadata. By default only 1 mnode is created.

taos> show mnodes;

id | endpoint | role | status | create_time | role_time |

==============================================================================================================================

1 | tdc01:6030 | leader | ready | 2023-09-04 18:58:10.393 | 2023-09-04 18:58:10.385 |

Query OK, 1 row(s) in set (0.002263s)Add mnodes

For HA, we must add 2 more mnodes on dnodes 2 and 3.

taos> create mnode on dnode 2;Create OK, 0 row(s) affected (4.062746s)taos> show mnodes; id | endpoint | role | status | create_time | role_time |============================================================================================================================== 1 | tdc01:6030 | leader | ready | 2023-09-04 18:58:10.393 | 2023-09-04 18:58:10.385 | 2 | tdc02:6030 | follower | ready | 2023-09-04 20:23:33.318 | 2023-09-04 20:23:37.365 |Query OK, 2 row(s) in set (0.002233s)taos> create mnode on dnode 3;

Create OK, 0 row(s) affected (4.077106s)

taos> show mnodes;

id | endpoint | role | status | create_time | role_time |

==============================================================================================================================

1 | tdc01:6030 | leader | ready | 2023-09-04 18:58:10.393 | 2023-09-04 18:58:10.385 |

2 | tdc02:6030 | follower | ready | 2023-09-04 20:23:33.318 | 2023-09-04 20:23:37.365 |

3 | tdc03:6030 | follower | ready | 2023-09-04 20:24:38.435 | 2023-09-04 20:24:42.501 |

Query OK, 3 row(s) in set (0.004268s)Demonstrate High Availability

Now we use the tool taosBenchmark to create a database. By default taosBenchmark will create a very large database with 100 million rows, but for the purposes of this blog we create a small database to show how HA works.

Note that you must install taosTools in order to have taosBenchmark installed.

Create a small database of simulated Smart Meter data

tdengine@tdc01:~$ taosBenchmark -I stmt -d test -n 100 -t 100 -a 3

[09/04 20:28:19.612119] INFO: client version: 3.1.0.0

Press enter key to continue or Ctrl-C to stop

failed to create dir:/var/log/taos/ since Operation not permitted WARING: Create taoslog failed:Operation not permitted. configDir=/etc/taos/

[09/04 20:28:27.559290] INFO: command to create database: <CREATE DATABASE IF NOT EXISTS test VGROUPS 1 replica 3 PRECISION 'ms';>

[09/04 20:28:27.569557] SUCC: created database (test)

[09/04 20:28:27.575220] WARN: failed to run command DESCRIBE `test`.`meters`, code: 0x80002603, reason: Table does not exist

[09/04 20:28:27.575403] INFO: stable meters does not exist, will create one

[09/04 20:28:27.575465] INFO: create stable: <CREATE TABLE test.meters (ts TIMESTAMP,current float,voltage int,phase float) TAGS (groupid int,location binary(24))>

[09/04 20:28:27.582501] INFO: generate stable<meters> columns data with lenOfCols<80> * prepared_rand<10000>

[09/04 20:28:27.593078] INFO: generate stable<meters> tags data with lenOfTags<62> * childTblCount<100>

[09/04 20:28:27.598267] INFO: start creating 100 table(s) with 8 thread(s)

.

.

.

.

.

[09/04 20:28:29.828752] INFO: Total 100 tables on bb test's vgroup 0 (id: 2)

[09/04 20:28:29.833427] INFO: Estimate memory usage: 3.93MB

Press enter key to continue or Ctrl-C to stop

[09/04 20:28:37.095207] INFO: pthread_join 0 ...

[09/04 20:28:37.201477] SUCC: thread[0] progressive mode, completed total inserted rows: 10000, 97145.85 records/second

[09/04 20:28:37.201584] SUCC: Spent 0.106339 seconds to insert rows: 10000 with 1 thread(s) into test 94038.88 records/second

[09/04 20:28:37.201593] SUCC: insert delay, min: 0.4890ms, avg: 1.0294ms, p90: 1.3420ms, p95: 3.5180ms, p99: 11.9660ms, max: 11.9660msNote that you will have to press the “Enter” key when prompted and there will be several lines of output. We are only displaying the first few lines and the last few lines.

Check the number of rows in the database

taos> select count(*) from test.meters; count(*) |======================== 10000 |Query OK, 1 row(s) in set (0.020480s)See the distribution of vgroups

Vnodes on different data nodes can form a virtual node group to ensure the high availability of the system.

As you can see below, there is one vgroup.

taos> show test.vgroups\G;

*************************** 1.row ***************************

vgroup_id: 2

db_name: test

tables: 100

v1_dnode: 1

v1_status: leader

v2_dnode: 2

v2_status: follower

v3_dnode: 3

v3_status: follower

v4_dnode: NULL

v4_status: NULL

cacheload: 0

cacheelements: 0

tsma: 0

Query OK, 1 row(s) in set (0.005120s)Create a new database with 3 replicas to test HA

taos> create database if not exists testha replica 3;

Create OK, 0 row(s) affected (6.371307s)

taos> show databases;

name |

=================================

information_schema |

performance_schema |

test |

testha |

Query OK, 4 row(s) in set (0.002791s)Create a table named t1 and insert some data into it.

taos> use testha;

Database changed.

taos> create table if not exists t1(ts timestamp, n int);

Create OK, 0 row(s) affected (0.001430s)

taos> insert into t1 values(now, 1)(now+1s, 2)(now +2s,3)(now+3s,4);

Insert OK, 4 row(s) affected (0.005576s)

taos> select * from t1;

ts | n |

========================================

2023-09-04 20:42:00.449 | 1 |

2023-09-04 20:42:01.449 | 2 |

2023-09-04 20:42:02.449 | 3 |

2023-09-04 20:42:03.449 | 4 |

Query OK, 4 row(s) in set (0.001884s)Check online dnodes

taos> show dnodes; id | endpoint | vnodes | support_vnodes | status | create_time | reboot_time | note |=================================================================================================================================================================== 1 | tdc01:6030 | 3 | 4 | ready | 2023-09-04 18:58:10.391 | 2023-09-04 18:58:10.367 | | 2 | tdc02:6030 | 3 | 4 | ready | 2023-09-04 19:01:21.746 | 2023-09-04 19:00:18.628 | | 3 | tdc03:6030 | 3 | 4 | ready | 2023-09-04 19:02:48.980 | 2023-09-04 19:02:40.144 | |Query OK, 3 row(s) in set (0.004388s)RAFT Protocol

TDengine uses the RAFT protocol for data replication. For the RAFT protocol to work, it needs a quorum of (N/2)+1 nodes and can tolerate a failure of (N-1)/2 nodes in the cluster.

Disconnect the tdc01 node

You can either power off the VM or just disconnect the network.

taos> show dnodes; id | endpoint | vnodes | support_vnodes | status | create_time | reboot_time | note |===================================================================================================================================================================<strong>1</strong><strong>|</strong><strong> tdc01:</strong><strong>6030</strong><strong>|</strong><strong>3</strong><strong>|</strong><strong>0</strong><strong>|</strong><strong> offline </strong><strong>|</strong><strong>2023-09-04</strong><strong>18</strong><strong>:</strong><strong>58</strong><strong>:</strong><strong>10.391</strong><strong>|</strong><strong>1969-12-31</strong><strong>16</strong><strong>:</strong><strong>00</strong><strong>:</strong><strong>00.000</strong><strong>|</strong><strong> status </strong><strong>not</strong><strong> received </strong>| 2 | tdc02:6030 | 3 | 4 | ready | 2023-09-04 19:01:21.746 | 2023-09-04 19:00:18.628 | | 3 | tdc03:6030 | 3 | 4 | ready | 2023-09-04 19:02:48.980 | 2023-09-04 19:02:40.144 | |Query OK, 3 row(s) in set (0.004617s)Insertion and querying still works

taos> insert into testha.t1 values(now, 1)(now+1s, 2);

Insert OK, 2 row(s) affected (0.002733s)

taos> select count(*) from testha.t1;

count(*) |

========================

6 |

Query OK, 1 row(s) in set (0.013952s)

taos> insert into testha.t1 values(now, 1)(now+1s, 2);

Insert OK, 2 row(s) affected (0.002751s)

taos> select count(*) from testha.t1;

count(*) |

========================

8 |

Query OK, 1 row(s) in set (0.002328s)

taos> select * from testha.t1;

ts | n |

========================================

2023-09-04 20:42:00.449 | 1 |

2023-09-04 20:42:01.449 | 2 |

2023-09-04 20:42:02.449 | 3 |

2023-09-04 20:42:03.449 | 4 |

2023-09-04 20:47:58.913 | 1 |

2023-09-04 20:47:59.913 | 2 |

2023-09-04 20:49:20.395 | 1 |

2023-09-04 20:49:21.395 | 2 |

Query OK, 8 row(s) in set (0.003532s)Restore node tdc01

As soon as node tdc01 is restored, it rejoins the cluster.

Note that the mnodes leader is tdc03.

taos> show dnodes;

id | endpoint | vnodes | support_vnodes | status | create_time | reboot_time | note |

===========================================================================================================================================================================

1 | tdc01:6030 | 3 | 4 | ready | 2023-09-04 18:58:10.391 | 2023-09-04 20:50:34.226 | |

2 | tdc02:6030 | 3 | 4 | ready | 2023-09-04 19:01:21.746 | 2023-09-04 19:00:18.628 | |

3 | tdc03:6030 | 3 | 4 | ready | 2023-09-04 19:02:48.980 | 2023-09-04 19:02:40.144 | |

Query OK, 3 row(s) in set (0.002064s)taos> show mnodes;

id | endpoint | role | status | create_time | role_time |

==============================================================================================================================

1 | tdc01:6030 | follower | ready | 2023-09-04 18:58:10.393 | 2023-09-04 20:50:36.959 |

2 | tdc02:6030 | follower | ready | 2023-09-04 20:23:33.318 | 2023-09-04 20:45:43.682 |

<strong>3</strong><strong>|</strong><strong> tdc03:</strong><strong>6030</strong><strong>|</strong><strong> leader </strong><strong>|</strong><strong> ready </strong><strong>|</strong><strong>2023-09-04</strong><strong>20</strong><strong>:</strong><strong>24</strong><strong>:</strong><strong>38.435</strong><strong>|</strong><strong>2023-09-04</strong><strong>20</strong><strong>:</strong><strong>45</strong><strong>:</strong><strong>43.693</strong><strong>|</strong>

Query OK, 3 row(s) in set (0.004637s)Take a look at the vgroups as well.

taos> show testha.vgroups\G;

*************************** 1.row ***************************

vgroup_id: 3

db_name: testha

tables: 0

v1_dnode: 1

v1_status: follower

v2_dnode: 2

v2_status: follower

v3_dnode: 3

v3_status: leader

v4_dnode: NULL

v4_status: NULL

cacheload: 0

cacheelements: 0

tsma: 0

*************************** 2.row ***************************

vgroup_id: 4

db_name: testha

tables: 1

v1_dnode: 1

v1_status: follower

v2_dnode: 2

v2_status: leader

v3_dnode: 3

v3_status: follower

v4_dnode: NULL

v4_status: NULL

cacheload: 0

cacheelements: 0

tsma: 0

Query OK, 2 row(s) in set (0.016652s)Only 1 node is up

Now we can address the case when only a single node is running.

We power down or disconnect nodes tdc01 and tdc02.

<strong>tdengine@tdc03:~$ </strong>ping tdc01

PING tdc01 (10.0.2.4) 56(84) bytes of data.

^C

--- tdc01 ping statistics ---

3 packets transmitted, 0 received, 100% packet loss, time 2055ms

<strong>tdengine@tdc03:~$</strong> ping tdc02

PING tdc02 (10.0.2.6) 56(84) bytes of data.

From tdc03 (10.0.2.5) icmp_seq=1 Destination Host Unreachable

From tdc03 (10.0.2.5) icmp_seq=2 Destination Host Unreachable

From tdc03 (10.0.2.5) icmp_seq=3 Destination Host Unreachable

From tdc03 (10.0.2.5) icmp_seq=4 Destination Host Unreachable

^C

--- tdc02 ping statistics ---

6 packets transmitted, 0 received, +4 errors, 100% packet loss, time 5066ms

pipe 3Now if we try to connect to the cluster, we cannot. Therefore, it is impossible to query or insert data.

<strong>tdengine@tdc03:~$</strong> taos -h tdc03

failed to create dir:/var/log/taos/ since Operation not permitted WARING: Create taoslog failed:Operation not permitted. configDir=/etc/taos/

Welcome to the TDengine Command Line Interface, Client Version:3.1.0.0

Copyright (c) 2022 by TDengine, all rights reserved.

failed to connect to server, reason: Sync leader is unreachableNow we spin up or connect the node tdc01.

<strong>tdengine@tdc03:~$ </strong>ping tdc01

PING tdc01 (10.0.2.4) 56(84) bytes of data.

64 bytes from tdc01 (10.0.2.4): icmp_seq=1 ttl=64 time=0.285 ms

64 bytes from tdc01 (10.0.2.4): icmp_seq=2 ttl=64 time=1.10 ms

^C

--- tdc01 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1003ms

rtt min/avg/max/mdev = 0.285/0.691/1.098/0.406 msTry to connect to the cluster again.

taos> select count(*) from test.meters;

count(*) |

========================

10000 |

taos> show databases;

name |

=================================

information_schema |

performance_schema |

test |

testha |

Query OK, 4 row(s) in set (0.004463s)

taos> select count(*) from testha.t1;

count(*) |

========================

8 |

Query OK, 1 row(s) in set (0.012661s)TDengine Cloud – Enterprise-ready

With TDengine Cloud you get a highly secure, fully managed service with backups, scalability, high-availability and Enterprise-ready features including SSO and replication across public clouds. You can sign up for a Starter TDengine Cloud account at in less than a minute and it does not require a credit card.

TDengine Cloud is the easiest way for anyone to try the technology behind TDengine and experience a high performance next generation data historian.