Introduction

SF Technology Big Data Ecosystem has to collect and store large amounts of monitoring data each day to ensure the smooth and stable running of the whole IT system. Even though we have employed a solution of OpenTSDB+HBase to handle the storage of all metrics from the big data monitoring platform, there are still quite a lot of pain points in this solution, thus an upgrade is needed. Through comparative research on the solutions provided by several prevailing time-series databases (TSDB) such as IoTDB, Druid, ClickHouse, and TDengine, we finally decided to choose TDengine as a solution. Since using TDengine, impressive improvements have been achieved in the terms of stability and writing/query performance. Moreover, the storage costs are 10 times lower than the former solution.

Scenarios and Pain Points

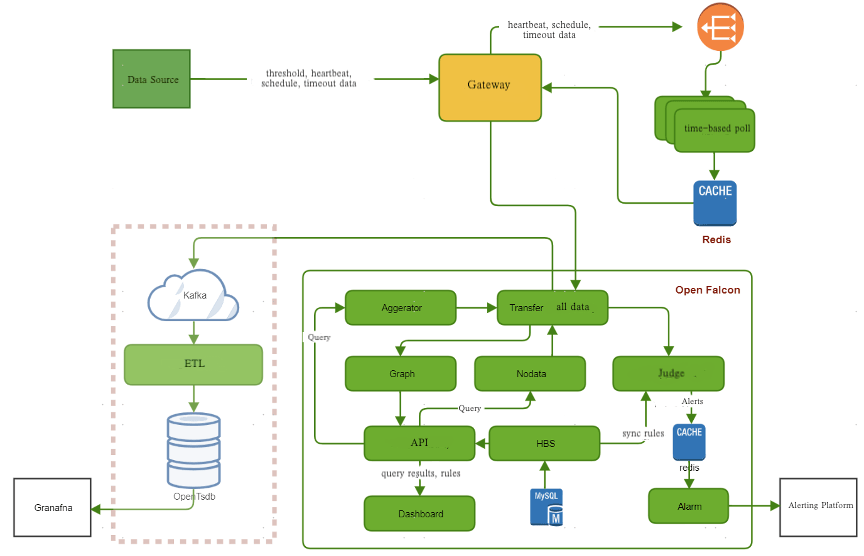

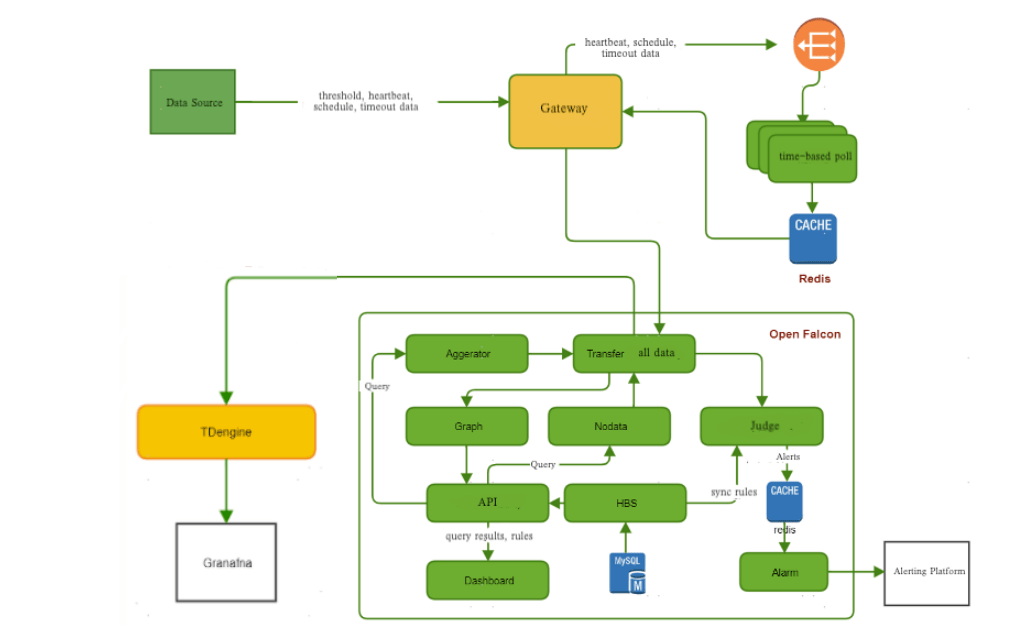

SF Technology is a leading logistics technology company in the world, which is dedicated to constructing Intelligent Operation Center (IOC) and Smart Logistics Network as well as continuously engaged in the fields of big data & its products, AI & its applications, and integrated logistics solutions. To ensure the stable operation of all kinds of big data services, we constructed a big data monitoring platform based on OpenFalcon. Considering OpenFalcon uses RRDtool to handle data, it is not applicable to store all monitoring data. Thus, we employed OpenTSDB+HBase as a solution in the first place.

Currently, billions of records are written into the big data monitoring platform per day. With the increasing volume of metric data, we are urged to deal with numerous pain points:

- First, the monitoring platform relies on external dependencies like Kafka, Spark, and HBase to handle data. An overlong data processing pipeline will undermine the reliability of the platform. Meanwhile, it appears difficult to locate the problem when an external dependency is

- Regarding that, the amount of monitoring data is extremely large and all data has to be retained over half a year, we utilize a cluster consisting of 4-nodeOpenTSDB + 21-node HBase to store all data. Even after compression, it still needs 1.5T storage (3 copies) for every single day, thus the storage costs are very high.

- OpenTSDB as a solution barely satisfies the requirement of data writing, but it cannot meet the requirement to deal with large-timescale and high-frequency queries. On the one hand, OpenTSDB presents a high latency to return query results (it requires over 10 seconds to respond); on the other hand, considering OpenTSDB’s QPS is relatively low, highly concurrent queries will easily cause the breakdown of the whole system, which might lead to the suspension of all services.

Why Use TDengine for Big Data Monitoring Platform?

To resolve the problems mentioned above, we need a more efficient and cost-effective solution, hence we’ve conducted research on different technical solutions provided by prevailing time-series databases. The following is a brief summary:

- IoTDB: The capstone Apache project contributed by Tsinghua University, showing high performance in a single-machine mode, while IoTDB cannot support the cluster mode and shows defects in data recovery and scalability.

- Druid: A high-performance and scalable distributed database present features of automatic recovery, automatic load balancing, and accessible operating system, but Druid relies on ZooKeeper and Hadoop to implement deep storage, which makes the system too complex.

- ClickHouse: It demonstrates the highest performance, but its high management & maintenance costs are not cost-effective. Moreover, scaling the ClickHouse cluster is very complex and needs more resources.

- TDengine: High performance, low management costs, and simple management & maintenance make TDengine meet all requirements for our usage scenarios. Besides, TDengine is highly available and supports scaling out.

Through a comprehensive comparison, we selected TDengine as an upgrade solution. TDengine supports various connectors to write data, including JDBC and HTTP. As monitoring data ingestion requires high writing performance, we adopt Go Connector to write data. Here are the procedures:

- Performing data cleaning to remove incorrect data

- Formatting data to implement data transformation

- Concatenating SQL statements to generate different SQL statements based on the characteristics of monitoring data

- Using batch writing to write data after SQL concatenation

Data Modeling

Before data ingestion, TDengine requires to design schema based on the characteristics of data for achieving the best performance. The followings are the typical characteristics of monitoring data:

- The data types are fixed and timestamped.

- The data is unpredictable as adding new tags is always required when a new node or a new server is added, hence the data model cannot be designed in advance and has to adjust in a real-time manner.

- There are not many tag rows but the tags will constantly change. Meanwhile, the data value is relatively uniform, including timestamps, metrics, and intervals.

- Each record is relatively small (approx. 100 bytes per record).

- The total amount of data is enormously large, which is over 5 billion records per day.

- The data needs to be retained for at least six months.

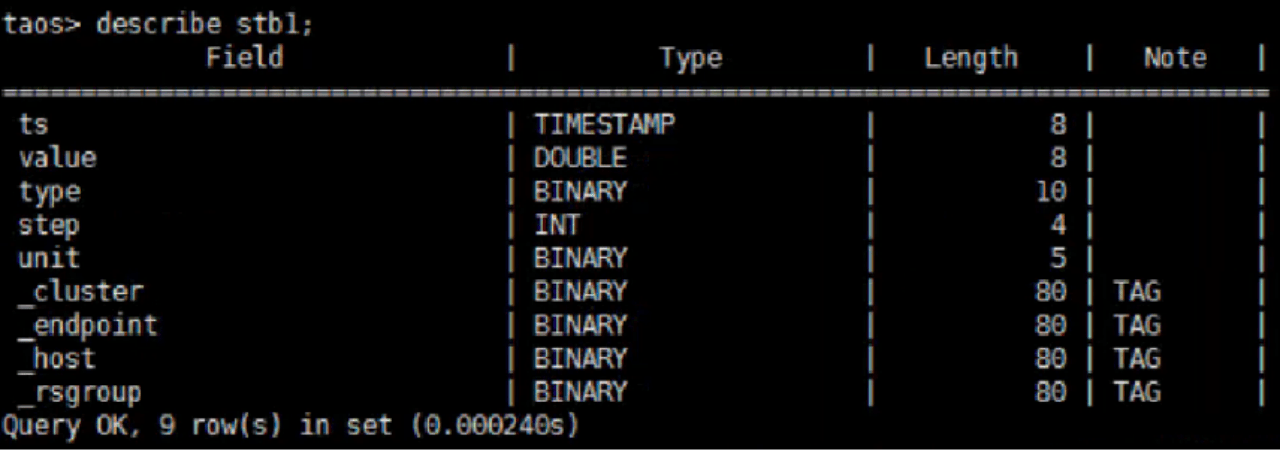

In light of the data model recommended by TDengine, a STable should be created for each type of data point. Take “cpu_usage” for example, the metrics of “cpu_usage” can be collected from every host machine (a data point), then we can create a STable to store those metrics. According to the characteristics of monitoring data and the usage scenarios, the data model can be designed in the way as shown below:

- Creating a STable for each kind of data to facilitate further aggregations and analysis

- As there are always descriptive names for each metric, those names can be set as the tags of STable.

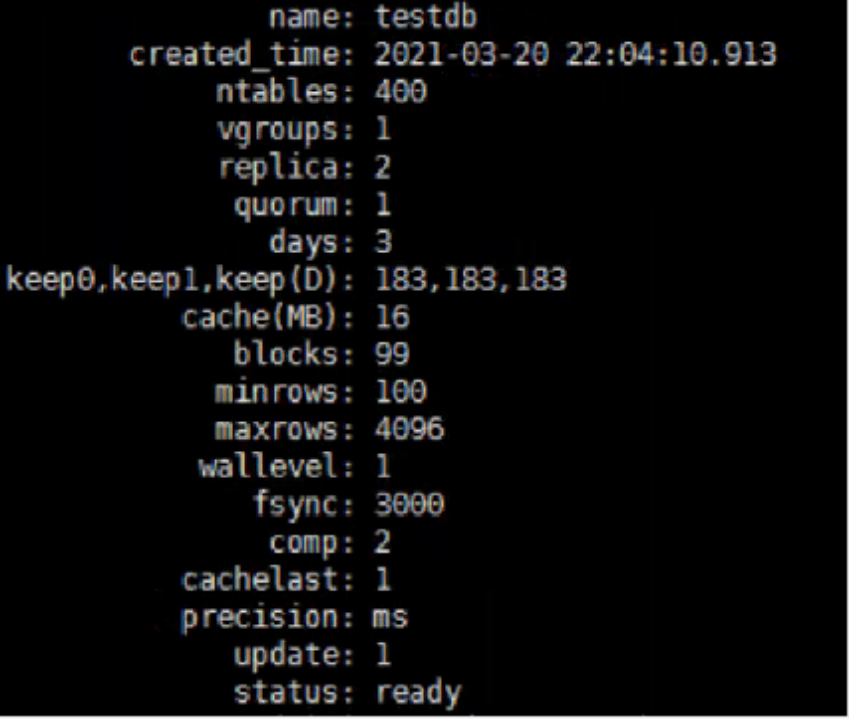

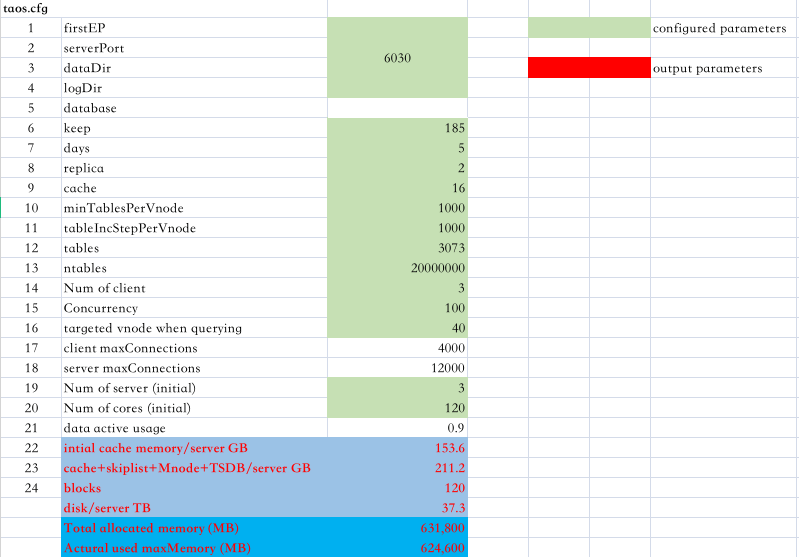

The configuration of the database:

The schema of STable is like this:

Field Application

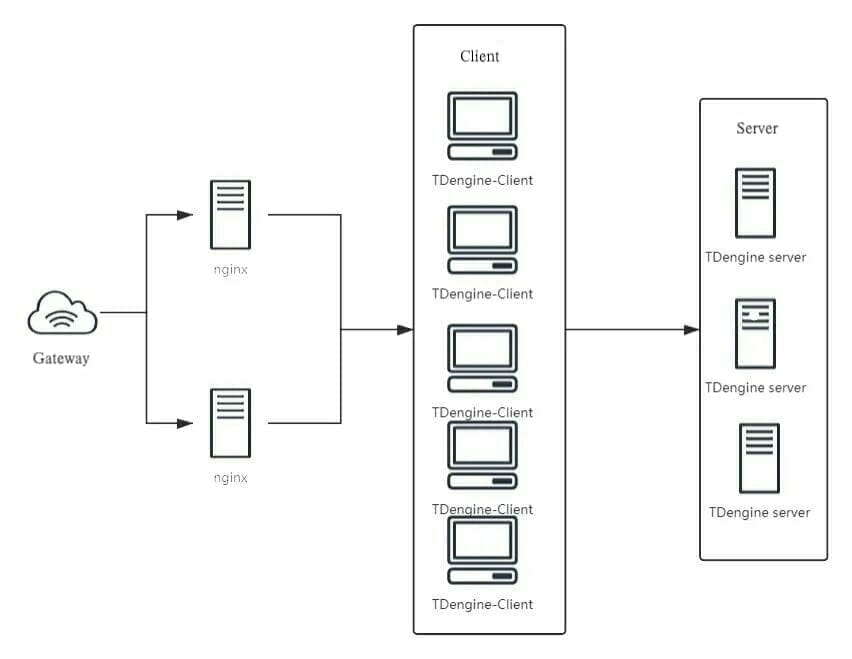

The high availability of the big data monitoring platform is the key to ensure the smooth running of the big data processing platform. As the metric data throughput will continuously increase with the growing business, scaling out the storage is needed. Therefore, the field application of TDengine is as follows:

To ensure high availability and scalability, we employed the Nginx cluster to balance the load and then divided a client layer to facilitate the scaling out/down based on the size of data traffic.

The difficulties encountered in the application process are presented as follows:

- First, we could not pre-design the schema of STables and tables for the uncertain metric data on writing (as the connector only identifies the data type), hence we had to identify whether a new STable should be created when writing every single record. Such a process would greatly undermine the writing performance if we accessed TDengine for every single identification. To resolve this problem, we created a local cache, which has powerfully fueled the insert speed.

- Second, there were two problems that occurred in the usage scenario when performing queries. The first one was a bug triggered by Grafana, which caused a system breakdown when refreshing a Grafana dashboard, but this problem was fixed by TDengine developers in an efficient and professional manner. Another problem was that all query requests will be only sent to a single server via HTTP, which might bring overloading to a single physical machine and undermine the high availability of query service, so we employed the Nginx to evenly balance the query requests on separate nodes.

- Third, data types and data volume demonstrated a significant impact on TDengine’s performance, hence configuring parameters based on the specific usage scenarios was TDengine supports the storage of 20 million tables in a three-node cluster (256G). Meanwhile, if the number of tables exceeds 20 million, a query cache(approx. 10G) should be reserved and new nodes should be added to guarantee the writing performance.

An Effective Upgrade

After the upgrade, the TDengine cluster undertakes the whole monitoring data writing as easy as pie. The following graphic is the architecture of the upgraded system:

- After the upgrade, the big data monitoring platform has dispelled the reliance on external dependencies and greatly shorten the pipeline of data processing procedures.

- The writing speed of TDengine can hit 900,000 records per second in an optimal configuration. Generally, the insert speed will not slower than 200,000 records per second.

- The query speed based on precomputation is fascinatingly fast (p99 query can return results within 0.7s), which is fairly enough for our daily usage. When it comes to non-precomputation queries (the time range is over 6 months), it only takes about 7-8s to return the results.

- The number of physical machines in the server cluster scales down from 21 to 3, the required storage per day is 93G (2 copies). In the same scenario, OpenTSDB+HBase requires 10 times more resources than TDengine, hence TDengine manifests its powerful advantage to lower the management & maintenance costs.

Conclusion

From the perspective of the big data monitoring scenario, TDengine proves its advantages of lower management & maintenance costs, faster writing & query performance, and a more accessible operating system. TDengine developers provided professional and efficient support during the whole upgrade process, here we want to deliver our sincere gratitude. Besides, regarding the problems encountered in the project, we also want to offer some suggestions to improve TDengine’s performance in a similar application scenario:

- Support more friendly “table_name” settings

- Support other big data platforms and aggregated queries with other platforms

- Support more diversified SQL statements

- Smoother greyscale upgrade

- Automatically deleting tables

- Faster recovery from the breakdown

In the coming future, considering the successful upgrade in this project, we will employ TDengine to handle data in more application scenarios, such as IoT and aggregated queries with other data sources via Hive on TDengine.