Working with high-volume metrics is a challenging endeavor, but one that is becoming more and more essential in a world of microservices, serverless workflows, and IoT devices.

Both TDengine and Tremor touch parts of this space, and together they create a highly effective solution and share a lot of design principles.

Without diving too deep into what either of them does, TDengine is a high-performance time-series database (TSDB) using SQL as its query language. Tremor is a high-performance event processing engine using, you might have guessed it, a SQL dialect for its configuration.

There are many uses where these two technologies complement each other, including filtering, normalization, pre-aggregation, and dual writing, but the most interesting one, and the one we’re going to look at in this post, is alerting.

Before we dive into the how, let’s talk about why this is such a challenging task. Two things are needed for alerting to work: real-time processing for triggering the alert and fast persistence to get the context for the alert.

Without real-time processing of incoming events, alerting requires periodically fetching the state from the store, checking against the alerting condition, and then triggering the alert. This introduces latency and, with scale, will put a significant tax on the database.

On the other hand, without a fast storage system, alerting is limited to a “point in time” observation, either lacking the context that makes an alert actionable or requiring an unreasonable amount of memory to keep the context in scope just in case of the rare event of an alert.

You can see how TDengine and Tremor complement each other here, so let’s dive in and build something. We’ll start with the basic setup from Tremor’s metrics guide. Click here to view the code. This sets us up with a quick runnable combination of TDengine, Tremor, Grafana, and Telegraf as a data collector.

Let’s look at one of our aggregated data points:

{

"timestamp":1652101700000000000,

"tags":{

"host":"998fb3b53ea2",

"cpu":"cpu-total",

"window":"1min"

},

"field":"usage_idle",

"measurement":"cpu",

"stats":{

"var":0.14,

"min":94,

"mean":94,

"percentiles":{

"0.5":95,

"0.99":95,

"0.9":95,

"0.999":95

},

"stdev":0.35,

"count":6,

"max":95

}

}

To turn this into an alert, we first need to figure out what we want to alert on. So let’s come up with a condition: “the system’s CPU idle is less than 95% for at least a minute”.

This can be turned into a SELECT statement like this:

select event from normalize

where match event of

case %{measurement == "cpu", field == "usage_idle", tags ~= %{cpu == "cpu-total", `window` == "1min"}, stats ~= %{mean < 95}} => true

case _ => false

end into alert;

One problem this leaves is that the alert will be triggered over and over again as long as the condition is met — not a helpful state for the operational team having to look after the systems. To solve this, we can throw in a simple deduplication script:

# Initiate state

match state of

# If state wasn't set set it

case null =>

let state = {"last": {}, "this": {}, "ingest_ns": ingest_ns}

# If we're two times beyond the timestamp we can just re-initialize

case _ when ingest_ns - state.ingest_ns > swap_after * 2 =>

let state = {"last": {}, "this": {}, "ingest_ns": ingest_ns}

# If we're one time over the `swap_after` we:

# * move this -> last

# * re-initialize`this`

# * set `ingest_ns` for the next round

case _ when ingest_ns - state.ingest_ns > swap_after =>

let state.ingest_ns = ingest_ns;

let state.last = state.this;

let state.this = {}

case _ => null

end;

# If we have seen this alert before drop it

match present state.this[event.tags.host] of

case true => drop

case _ => null

end;

# We didn't see this event before remember it

let state.this[event.tags.host] = true;

# If we saw it in last, we also drop it

match present state.last[event.tags.host] of

case true => drop

case _ => event

end

end;

Last but not least, we can format the alert into something that alerta can understand:

select

{

"environment": "Production",

"event": "CPU",

"group": "Host",

"origin": "telegraf",

"resource": event.tags.host,

"service": ["host"],

"severity": "major",

"text": "#{ event.measurement } #{ event.field } exceeds maximum of 99%",

"type": "exceptionAlert",

"value": "idle < 99%"

} from dedup into alert;

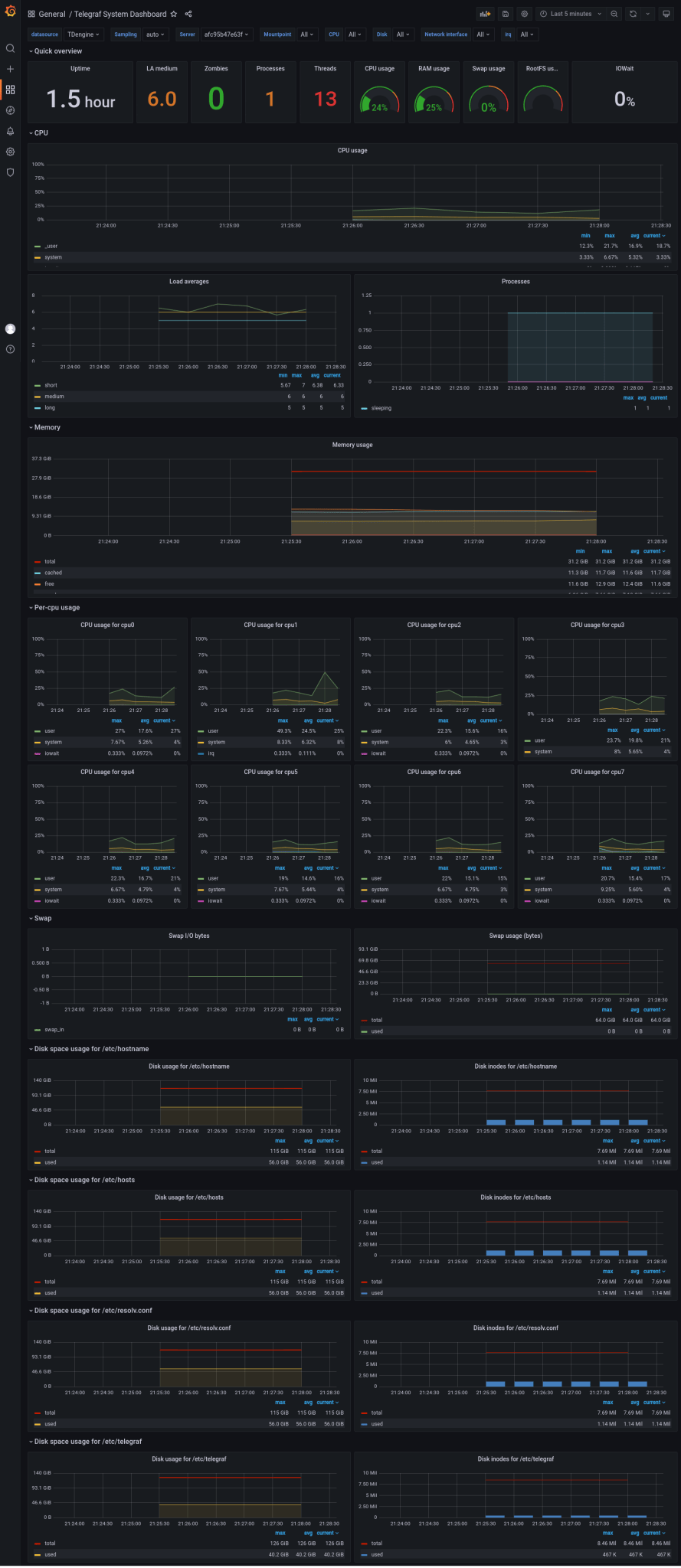

Here is a screenshot of the system monitoring dashboard:

In the demo, it’s sent to alerta, but we could equally send it to any other HTTP endpoint for alerting to show alerts in Slack, Discord, or forward them to PagerDuty.