Introduction

I happened to come across a blog written by the CEO and founder of TDengine, Jeff Tao. It happened to be a heartfelt blog about his father and I was amazed by the sincerity and openness. I reached out to him and it turned out that the technology he had developed into a product was something that I was looking for. Jeff was very forthcoming and helpful and gave me a thorough introduction to time-series databases. He even showed me the source code of TDengine to illustrate answers to some questions I had.

Since we had a need for a time-series database (TSDB) and with all of Jeff’s support and enthusiasm, we decided to choose TDengine based also on a careful analysis of our requirements. Once the dust had settled on our project, I decided to write this blog.

Business needs

Lion Bridge Group Network provides a logistics platform that can track commercial vehicle locations in addition to providing computing services for analytics. Lion Bridge’s Big Data team had designed a system that could collect data from the standard JT808 protocol streamed into Apache Flink. Flink would perform some calculations and the calculated data would be made available to business users in varied groups that included risk management, finance and even fraud-detection. More than 40 million records were being written daily and historical data also had to be provided for at least the past 6 months.

Iterations of system architecture

We iterated through several architectures before we landed on TDengine and the 4 main iterations are described below.

Stage 1

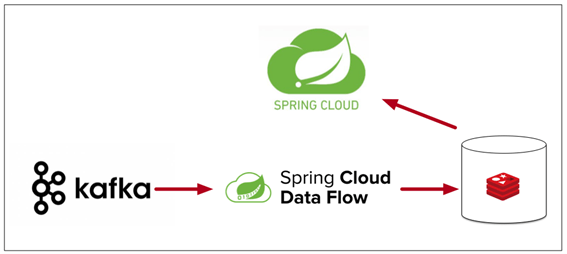

In this phase we had an architecture approximately as follows which gave us very basic functionality. This was also constrained by our team size, server resources and time available.

In this phase, we used MQ to access manufacturers data and used non-distributed real-time computing which enabled basic functionality and real-time location tracking and queries.

Stage 2

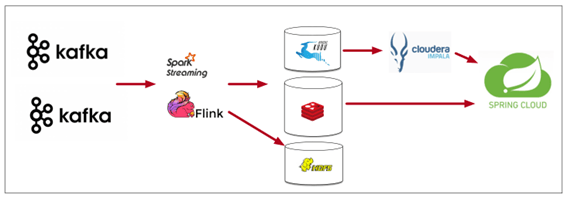

In stage two we revised the architecture and built a cluster which used Apache Yarn for resource scheduling. This was a distributed system for real-time processing and we also used Apache Spark and Flink to stream data to Redis, Apache HDFS and Apache Kudu. This allowed us to achieve a fairly elastic and resilient system.

Since we were tracking vehicle location in real-time, our system had to write large amounts of data in real-time while also enabling low-latency queries on large amounts of data. We used Apache Kudu for analytics and used Cloudera Impala for SQL queries. GPS data was compressed and written to Apache HDFS.

Stage 3

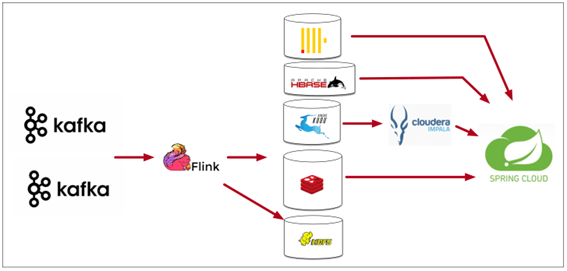

As our requirements were gradually becoming more stringent, we were trying to simplify and optimize our system.

We decided to eliminate Spark and unify all calculations in Flink. We also wanted to simplify storage of GPS records. We decided to test Hbase as well as Clickhouse as a replacement for the Kudu + Impala model.

We were not satisfied with Hbase because while it has a reasonable partitioning strategy, it does not support SQL. We were hoping that Clickhouse would satisfy both requirements which it does. While Clickhouse’s performance was pretty good, it was not enough to meet our growing demands.

Stage 4

In 2021 we developed a new system in which we incorporated the JT808 protocol for commercial vehicle tracking. This enhanced our system because we were able to screen providers based on market agreements and improve the bidding and bargaining processes with a view to increasing the overall efficiency of the platform from the end user perspective.

As mentioned above, we had conducted a series of tests on ClickHouse and we found some issues. Taking location tracking as an example, the query latency for a single vehicle was high. We were searching for a platform that had extremely high write performance, very low latency for queries across large timespans as well as real-time queries and which supported SQL and met all our business requirements.

Since GPS data is time-series data, we had been looking for databases that dealt optimally with time-series big data. It was at this time that we serendipitously came across TDengine and came across the blog of the TDengine founder, Jeff Tao.

Why We Chose TDengine

TDengine is an excellent time series database product designed and optimized based on typical business scenarios and data characteristics of the Internet of Things and Big Data. Below I share some elegant design strategies that impressed me.

Device = Table

The idea of 1 table per device is quite counterintuitive, especially in the standard database world. Whether it is ClickHouse or other databases, most of the database tables are constructed from the business dimension, while in TDengine, the table is constructed in units of collection points, and one device is equal to one table.

For Lion Bridge, if we have 500,000 cars on the road, then we need to build 500,000 monitors. At first glance it strains credulity, but there are advantages to this approach. The first advantage is that we want every device to be easily queried with very low latency for a recent timeframe. In TDengine, data is stored continuously and can be obtained in a single block, which basically eliminates random access to the disk. Similar to Kafka’s sequential read and write mechanism, it is extremely efficient. TDengine completely solves our pain point for this case.

At the same time, this new concept also greatly improves the writing speed of time series data, which is easily comprehensible if one understands sequential writes in Kafka.

“Inheritance” at the table level

TDengine has the concept of a supertable, which is an abstract collection of a type of ordinary table. For example, for a particular type of sensor one can declare a supertable, with certain tags/labels, and each sensor of that type gets to have it’s own subtable which “inherits” labels from the supertable. Pre-aggregation/pre-calculations (similar to index queries) on the supertable can greatly improve efficiency and reduce disk scans.

Scientific and logical distributed architecture

In TDengine, all logical units are called nodes, and a letter is added before each node, and different logical nodes are born:

- Physical node (Pnode), represents a physical (machine) node, generally speaking, a bare metal node.

- A data node (Dnode), which represents a running instance, is also a combination unit of data storage

- Virtual node (Vnode) is the smallest unit of work that is really responsible for data storage and computing. Vnodes are combined to form a Dnode.

The reason we call it scientific, is that when the data is sharded, the Vnodes in the Dnode can be distributed, and each Vnode can have tables from multiple devices. This is similar to MongoDB’s Sharding mechanism. According to the original design intention of TDengine, in order to store the data from a device in a continuous and orderly manner, it maps a device to a single Vnode instead of splitting it across multiple Vnodes which avoids data scattered across different locations.

In TDengine, the Vgroup mechanism is used to ensure highly reliable data copy and synchronization. You can read more about this on the TDengine website. All in all, these functional divisions and logical designs enable TDengine not only to be highly reliable, but also to provide extremely low latency reads and extremely high performance writes for millions of devices. Of course, to see how powerful it is, you can refer to the stress test comparison, or you can try it out yourself.

Two subtleties of mnode

In the above explanation, I deliberately avoided a description of Mnode, and I will explain it separately below.

It is very simple to understand Mnode in a logical sense. It is the manager of metadata. One can see this design in other distributed systems such as Hadoop’s NameNode and metadata information saved by Zookeeper in Kafka. But TDengine’s Mnode has two additional and very clever functions.

The Mnode works almost invisibly and the system can automatically create an Mnode on a Dnode of it’s choosing. When users need to access the TDengine cluster, they must know where the Mnode is first, and then they can obtain the cluster information and data through the Mnode. This process is accessed through taosc (client module). The subtlety and cleverness of the design is that you can directly access any Dnode in the cluster to obtain the information about the Mnode, thereby eliminating the need to obtain metadata from a centralized metadata node. This design strategy is used in ElasticSearch and the new version of Kafka. In one sentence, it is a decentralized metadata storage and acquisition mechanism. In terms of architecture design, TDengine is leading edge technology.

Secondly, Mnode can help monitor the load on Dnodes. If there is high load on a Dnode or unbalanced distribution of data storage, the Mnode can help transfer Dnode data, and eliminate any skew in data storage. It is worth mentioning that during this process, TDengine will continue to provide services without any effect on end users.

TDengine in practice

Location tracking data storage and queries

We streamed JT808 protocol data into Kafka and directly wrote the data to TDengine through Flink. We learned from TDengine staff that the TDengine driver uses off-heap memory, which greatly optimizes data caching and writing.

In terms of queries, since TDengine naturally supports SQL, the learning cost of application development is very low, which greatly improves development efficiency.

Last location query

Previously, we needed to use Flink to enter data into the Redis cluster for storage and query at the last location. The “latest hot data cache” strategy in TDengine allows us to omit the Redis component entirely. This is an extremely helpful feature and is proof that TDengine was really designed to capture the needs of IoT Big Data in order to reduce the complexity of architecture and lower costs of development, training and maintenance.

In terms of a cache management strategy, TDengine chooses FIFO over LRU.

TDengine is designed from scratch for IoT time-series Big Data. Keeping in mind the characteristics of IoT, time-series data, it uses FIFO in temporal order to define the caching strategy. Fresh data will always be written to cache, which is convenient for enterprises to query the latest hot data. Relying on this design, we only need a simple SQL to get the desired data from the memory at the fastest speed. TDengine acts like an in-memory database that supports SQL. After deploying TDengine, we got rid of a full set of Redis clusters that were used only for “last location” queries.

Automated partitioning and data retention policies

We talked about the sharding mechanism of TDengine above, but how does sharding work exactly? TDengine can set parameters of days for data storage and partition data by time range. To put it simply, we search location data for the past 180 days. However, there are more queries that use monthly timeframes. So we can set the days to 30 and the data is essentially divided into monthly blocks. This makes searching more efficient.

For data that is older than 180 days, we do not perform any searches. We use the keep parameter of TDengine to set how long the data will be kept. We set keep=180, so that data older than 180 days is automatically cleared. This saves enormous amounts of storage and lowers our storage costs significantly.

The ramifications of using TDengine

TDengine has a series of very efficient compression methods. After migrating to TDengine, our overall data storage has been reduced by more than 60%, and our compute costs have dropped as well. We do not need to use Redis or Hbase clusters anymore. Clickhouse is no longer needed for location storage. Metal is used where more brute force is required but at present, TDengine has been a great help in “cost reduction” and we expect that we will become even more efficient in the future.

Conclusion

From my personal experience, I think TDengine is a young and dynamic company with a very strong R&D and engineering culture. I have enjoyed and continue to enjoy discussing technology and TDengine with Jeff Tao. He is an example of a great business leader with a vision but who can roll up his sleeves and write code. He is pragmatic and can bring substance to his vision.

I hope that TDengine will hold more Meetups in the future to conduct more exchanges, and can also have more information on best practices and have a lot more examples for those who are new to time-series databases.