The Industrial Internet of Things (IIoT) is the continuous integration of collectors and controllers with sensing and monitoring capabilities, as well as mobile communications and intelligent analytics, into every aspect of industrial production. With the IIoT, traditional industrial production is entering into the age of intelligence.

The data obtained from Internet-connected equipment is used to improve efficiency, enable real-time decision-making, solve key problems, and create new, innovative experiences. However, new challenges have been brought by the increasing level of fragmentation and number of interconnected devices. To unlock the power of data, industry needs end-to-end, interoperating solutions that bridge the gap between equipment and the Internet and drive a new wave of innovation.

Background

SIMICAS® is a digital transformation solution that Siemens DE&DS DSM has developed for equipment manufacturers. With SIMICAS, you can remotely maintain equipment and perform intelligent analytics on service data, enabling higher efficiency and reduced costs in the post-sales phase.

This solution offers the flexibility you need to define your path to digitalization based on your own development needs. It consists of the SIMICAS intelligent gateway, SIMICAS configuration tool, SIMICAS Metrics Performer, and SIMICAS Performance analyzer, the latter two of which are based on the open IoT system MindSphere.

System architecture

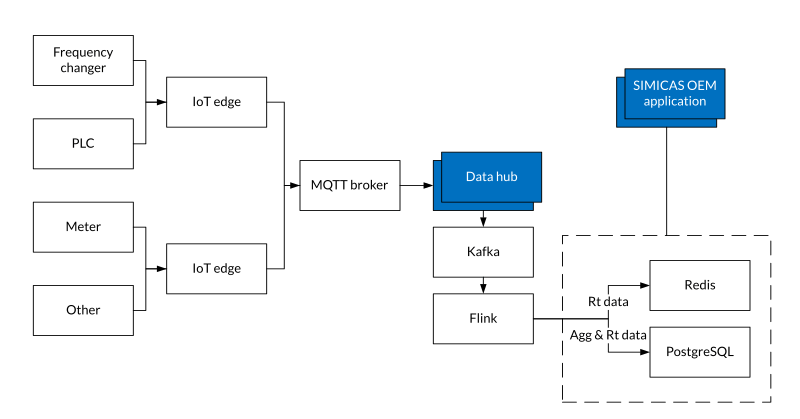

In version 1, the architecture involved Flink, Kafka, PostgreSQL, and Redis. The following figure shows the data flow.

The network gateways deployed on premises transmit equipment data to the IoT edge components, which process this data and write it to the Kafka queue. Flink then consumes the data in the queue and performs the required computations on it. The raw data and computed metrics are then written into a PostgreSQL database, with the newest data also being written to Redis for faster query response. Historical data is still queried from PostgreSQL.

Business challenges

After SIMICAS 1.0 was launched, two pain points were identified:

First, introducing Flink and Kafka into the architecture made deploying the solution cumbersome and required more server resources. Second, applications for the solution became more complex because databases and tables had to be partitioned in PostgreSQL in order to store the large amounts of incoming data.

The solution team was therefore tasked with finding ways to reduce complexity, hardware resource usage, and customer costs.

Component selection

It was decided to rearchitect the SIMICAS solution to resolve the identified pain points and align better with future development plans. A component was needed that met the following requirements:

- High performance: millions of concurrent writes, tens of thousands of concurrent reads, and the ability to handle a large number of aggregate queries without performance deterioration

- High availability: cluster deployment, horizontal scaling, and no single points of failure

- Reduced cost: low requirements on hardware resources and high compression ratio

- High level of integration: message queue, stream processing, and caching features included with the database as a single solution

The solution team compared various open-source data platforms and time-series database (TSDB) management systems and finally selected TDengine as the only platform that offered built-in message queuing, stream processing, and caching.

Implementation

Data flow

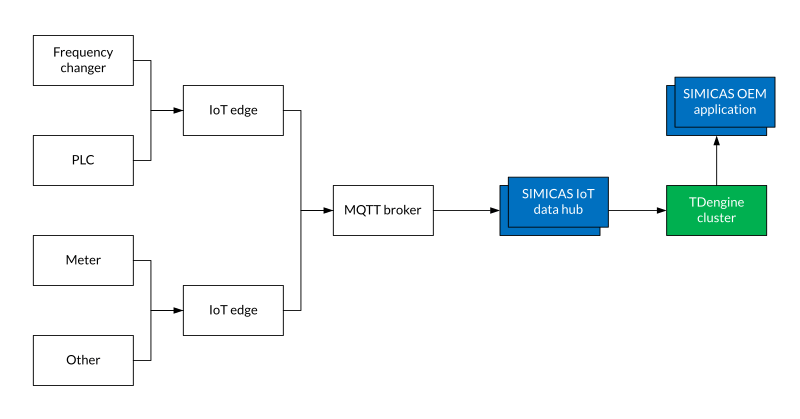

In SIMICAS OEM 2.0, three components – Flink, Kafka, and Redis – were replaced by TDengine, greatly simplifying the system architecture.

Data model

Creating the database

The solution uses a three-node TDengine cluster. In the cluster, a database is created that retains data for two years, replicates data to all three nodes, and enables record updating.

create database if not exists simicas_data keep 712 replica 3 update 2;Creating the table for real-time metrics

An independent supertable is created for each type of equipment that the solution handles. Within each supertable, a table for each device is created.

create stable if not exists product_${productKey} (ts timestamp,linestate bool,${device_properties}) tags (device_code binary(64));create table if not exists device_${device_code} using product_${productKey} tags (${device_code});Creating the table for status data

A single supertable is created to store the status data of all equipment in the solution.

create stable if not exists device_state (ts timestamp,linestate bool,run_status int,error_code binary(64),run_total_time int,stop_total_time int,error_total_time int) tags (device_code binary(64),product_key binary(64));create table if not exists device_state_${device_code} using device_state tags (${device_code},${productKey});Metric Calculation

Java Expression Language (JEXL) is used to define expressions that the system converts into SQL query tasks. The results of these queries are used to calculate the metric, which is then sent to the frontend for display.

As an example, the following JEXL expression is used to find the average voltage of all devices in project abc:

avg(voltage,run\_status=1 \&\& project=abc)The system breaks this expression down into the following SQL queries:

- Query all devices whose run_status is 1 and whose project is abc.

- From the results of step 1, query the latest value of the voltage field for each device

- From the results of step 2, calculate the average value.

With multithreading and the high-performance querying offered by TDengine, the P99 latency for a single KPI is under 100 ms.

Discovered issues

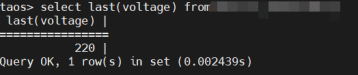

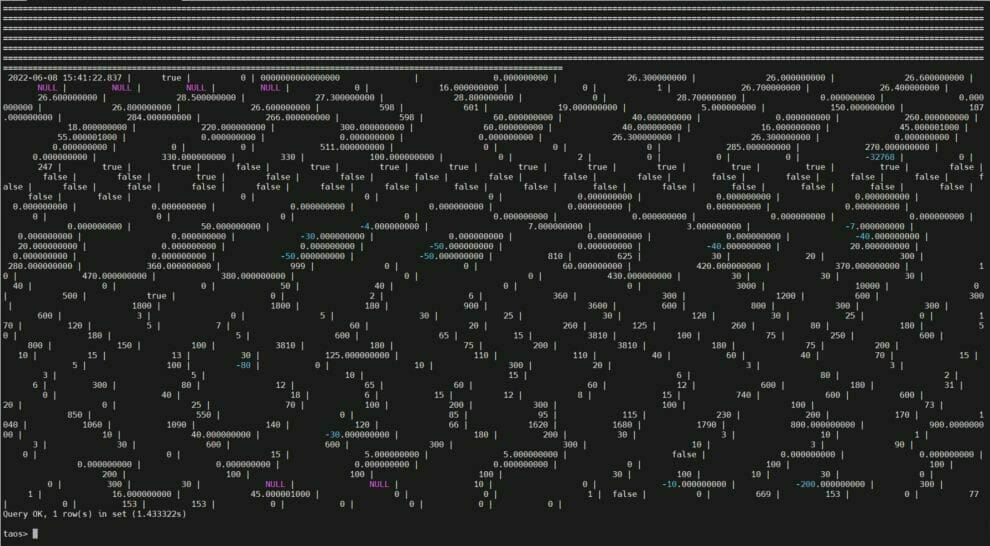

The best practices published by TDengine recommend using a multiple column data model in which metrics are stored as columns in a supertable. However, in environments with a large number of metrics, this can cause SQL statements to be excessively long. Also, devices that upload data only when a change occurs will have a large number of null values in their tables, making the SELECT LAST(*) FROM device_xxx statement inefficient.

Upon consultation with the TDengine Team, it was clarified that the LAST function queries each column until it finds a non-null value. In the current edition of TDengine, this means that the LAST function may access data that is not in the cache.

To resolve the issue with long SQL statements, an upper limit was set on the number of metrics that a single device can have. For the second issue, the method of uploading data to the system was changed.

These issues could have been avoided with better consideration of the production environment. Going forward, SIMICAS supports both single column and multiple column data models so that customers can choose the best model for their use case.

Finally, the maintenance and monitoring capabilities of TDengine are weaker than its competitors. However, the recently released TDinsight has improved this situation, and the SIMICAS team plans to test this component in the next phase.

Conclusion

Overall, the SIMICAS team was greatly impressed with the performance of TDengine and the level of support received. The simplified architecture enabled by TDengine will benefit all SIMICAS customers, and we are excited to continue working with TDengine in the future.