OPPO’s smart wearables generate vast amounts of time-series data. This data naturally requires very high frequency writes and vast volumes of data need to be written. Additionally, this data also requires supplementary data that is updated but not in real-time. OPPO’s Smart IoT Cloud Team had a MongoDB/MySQL cluster solution that could not keep up with the volume of data without constantly expanding storage. In addition to storage demands, each MySQL and MongoDB cluster was relatively independent and had large maintenance and development costs. In response to these expensive pain points, Smart IoT Cloud team started looking into and comparing several Time-Series Database (TSDB) products with the aim of finding a cost-effective and lasting solution.

| TSHouse 2.0 | TDengine | InfluxDB 2.0 | |

|---|---|---|---|

| Storage Engine | LSM | Data stored in columns High compression ratio | LSM |

| High Availability | Supported | Native | Enterprise edition |

| Out-of-Order Writes | Not supported | Supported | Supported but affects performance |

| Permanent Storage | Supported | Supported | Enterprise edition |

The diagram above summarizes the research results of three TSDBs that were investigated. Firstly, TSHouse is an in-house cloud monitoring time series database. The bottom layer of TSHouse is the TSDB storage engine of Prometheus which did not support historical data and out-of-order writing. InfluxDB performed secondary compression on historical data which affected performance. Neither TSHouse nor InfluxDB could support our stringent business needs. TDengine, in addition to being open-source, is capable of writing historical data without any performance degradation and has very high compression ratios. This is exactly what we needed – to cut storage costs while maintaining or increasing performance. Subsequently, we chose to conduct a more detailed product research and performance test on TDengine.

TDengine Product and Capability Research

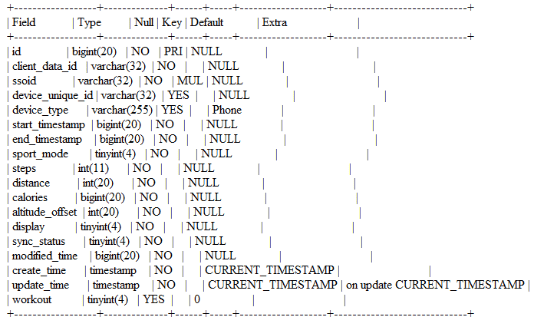

The structure of a data table is as follows:

To make comparisons, we wrote 600,000 rows of data to a MySQL container (since some business systems are deployed on MySQL clusters) and also to a TDengine container, each with 4 cores/12G RAM, to observe CPU/memory/disk. The test found that while the CPU and memory consumption are basically the same, the data storage of TDengine is about 1/4 that of the MySQL environment.

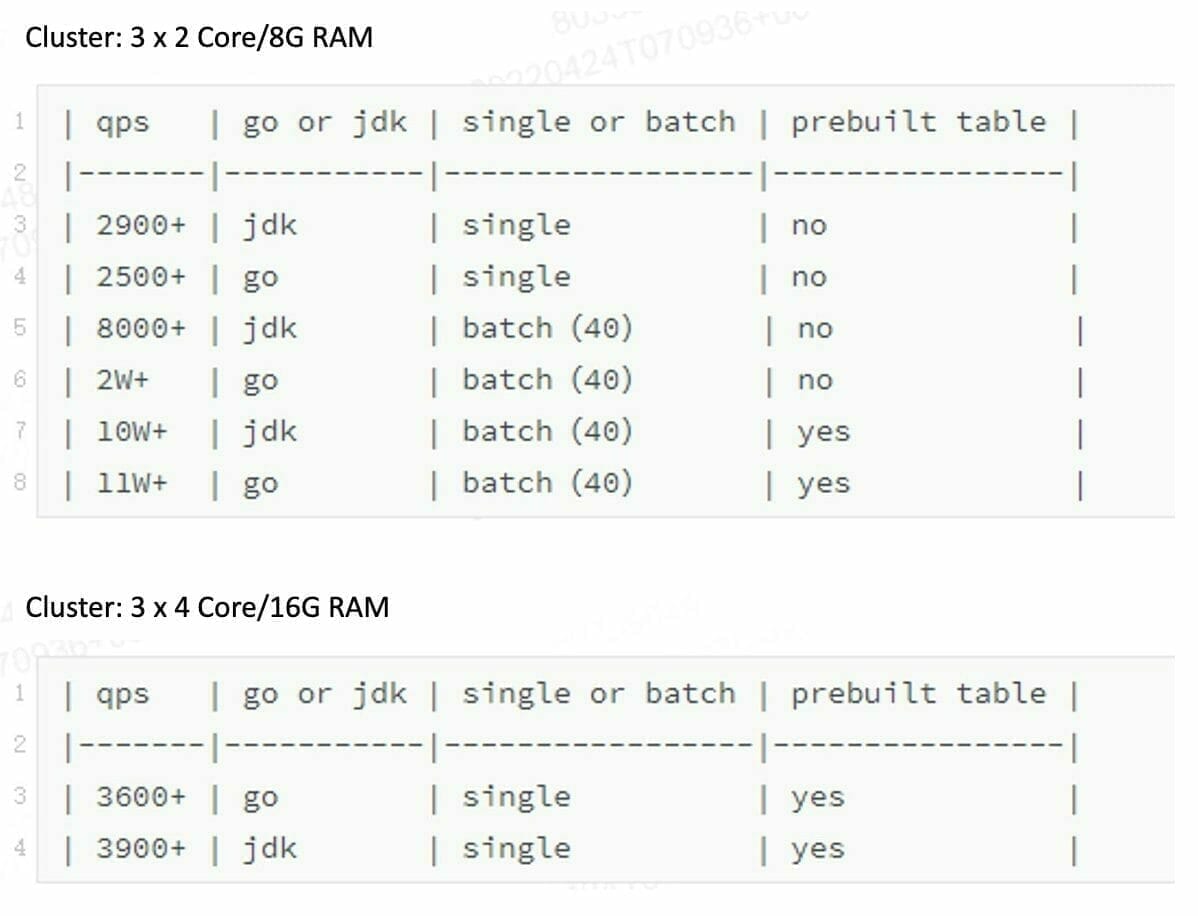

We also conducted TDengine write tests in different containers and physical machines and some of the results are shown in the tables below:

It should be noted that actual testing should be conducted for different business scenarios to determine deployment parameters that meet requirements. During the entire testing process, TDengine engineers also answered questions and helped us in a timely manner.

Thoughts on TDengine Architecture and Features

We verified some key features based on TDengine’s rich product manuals. These include features relevant to data management, data writing, aggregate computing, cluster expansion, and high-availability and reliability.

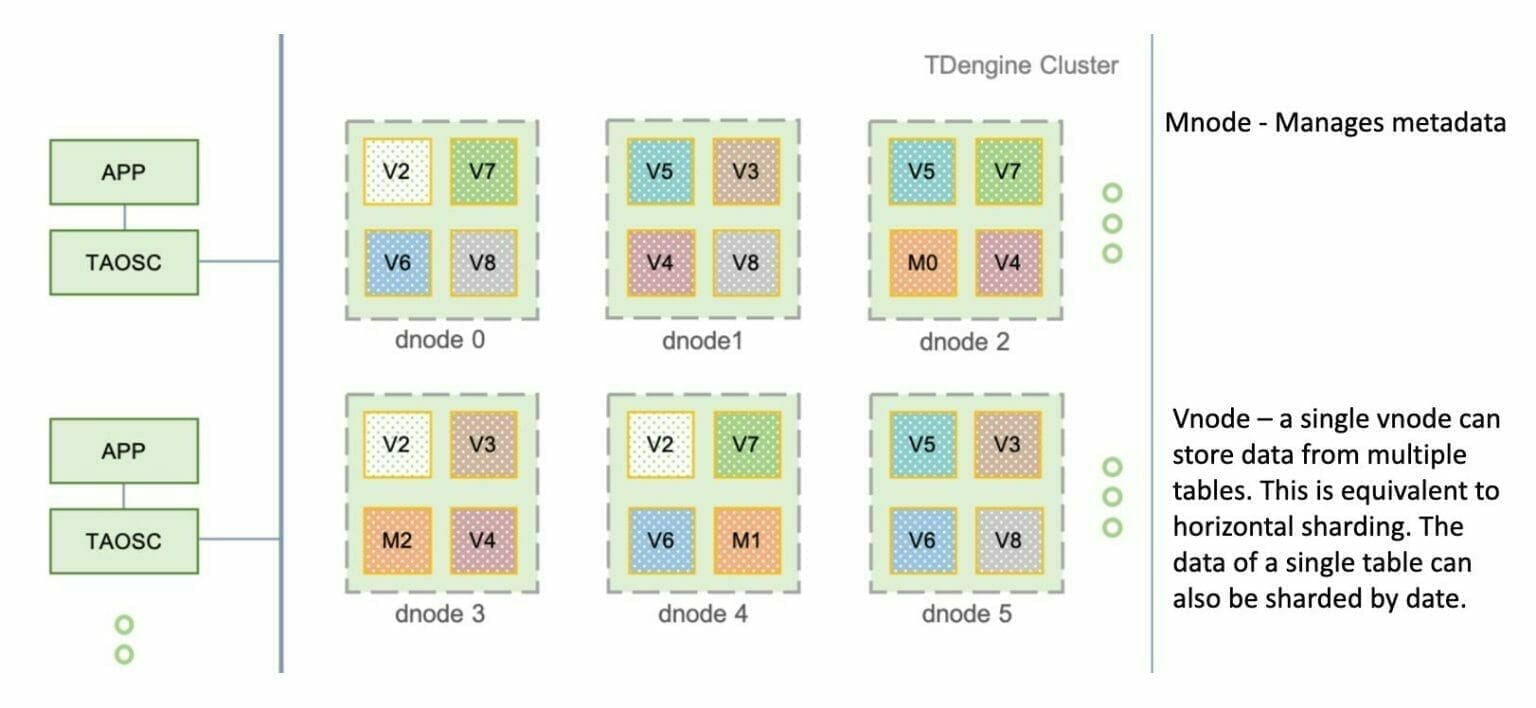

In terms of data management, TDengine’s metadata and business data are separated. As shown in the figure below, the Mnode is responsible for managing metadata information; a single Vnode can store data from multiple tables, which is equivalent to horizontal sharding. At the same time, the data within a single table can continue to be sharded by date.

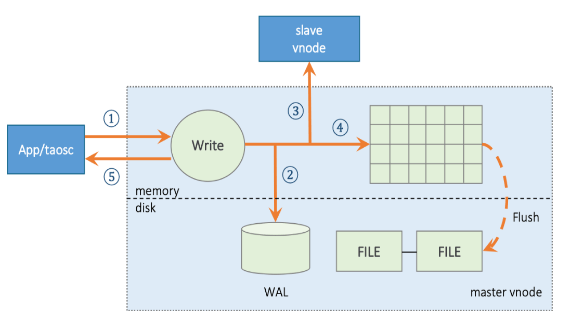

In terms of data writing logic, it is similar to LSM (Log Structured Merge): WAL (Write Ahead Log), memory block, disk FILE.

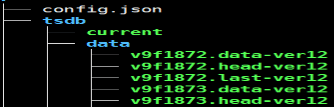

In TDengine, data is stored contiguously in blocks in a file. Each data block contains only the data of a single table, and the data is arranged in ascending order of time, which is the primary key. Data is stored in columns in data blocks, so that data of the same type can be stored together. This improves the compression ratio significantly, and saves storage space.

We chose the default value 10 for a parameter, DAYS, which tells TDengine the time range of the data stored in a single file. So in our case with the default value, TDengine keeps 10 days worth of data in a single data file. Data is compressed and only appended to a data file. This is extremely advantageous in terms of performance for historical and out of order data. This is also essentially very optimal for IoT use cases; resource consumption after writing is reduced, ensuring better read and write performance.

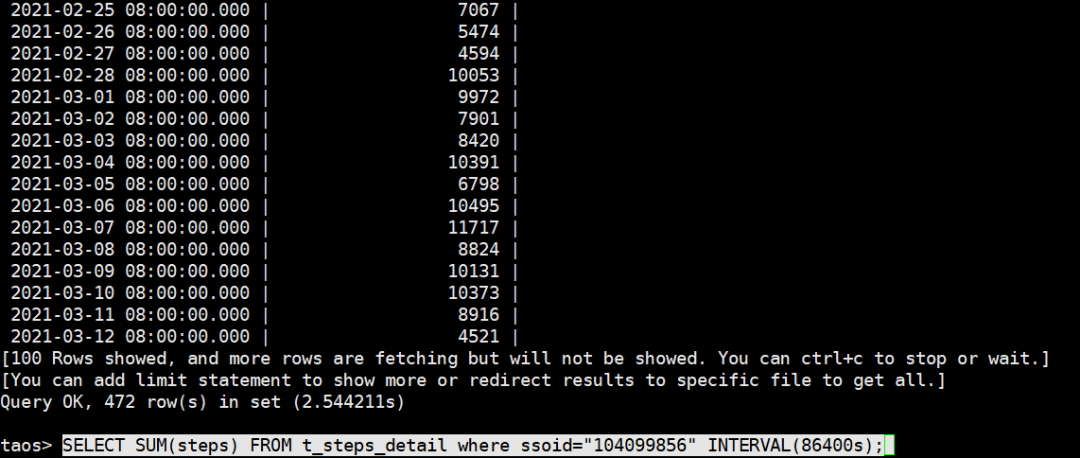

TDengine In Practice

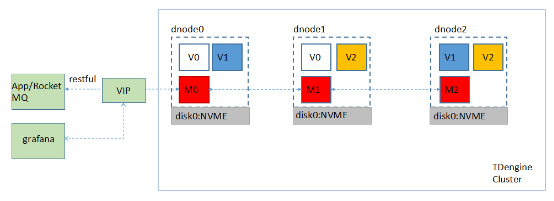

We deployed a TDengine cluster in the production environment based on our requirements:

The cluster is composed of 3 AWS-EC2 containers (8C 32GB 3.5TB NVME disks), configured as 3 nodes and 2 replicas. The write side uses RESTful requests to VIP nodes and forwards them to the database service. In the figure, V0-V2 are 3 groups of data shards with 2 copies, and M0-M2 are 1 group of management nodes with 3 copies.

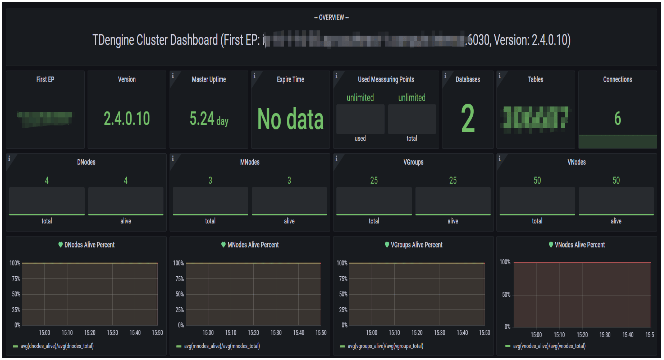

The configured Grafana panel looks like this:

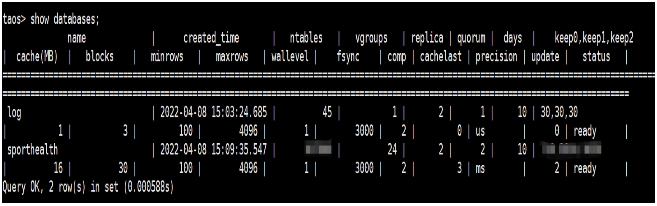

The background table structure is as follows:

At present, real-time data is being written to TDengine and historical data is also being imported synchronously.

Performance

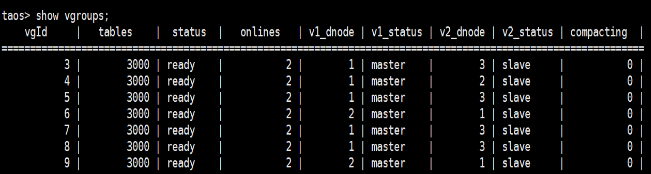

- Use the last_row() function to query the latest status of 380,000 devices at one time.

In terms of current storage conditions, for a 6.6TB cluster in the production environment, we roughly estimated the compression ratio before and after, which is about 6.6/0.4. This is approximately 15 times lower in TDengine than in MySQL.

In our original cluster, there were no replicas, and we simply deployed 5 sub-databases on MySQL. We used 5 machines each with 4 Cores, 8 GB RAM and 2 TB disk. After deploying TDengine, there are now 3 machines each with 8 Cores, 32 GB RAM and 2TB disk. With TDengine, we have automated replicas and unified capabilities, as well as the ability to use hybrid cloud. This is an optimization and improvement of the entire platform and also will help us scale in the future.

Summary

Through our journey starting with exploration, due diligence and deployment, the staff of TDengine provided us with sufficient and timely assistance. They were a great help in building the backend capability of time series data in our business. Currently TDengine holds data from an overseas cluster and we will be adding more clusters that will feed data into TDengine as our business grows and scales.