Project Background

NIO Power has invested heavily in EV charging infrastructure in order to provide customers with a faster and convenient charging experience. As of May 6 2022, NIO Power had already deployed 916+ battery-swap stations and 787+ power charger stations across the country, with more than 3,404 DC Superchargers and 3,461 regular chargers. Additionally 96,000+ home chargers have also been installed.

All this charging infrastructure requires equipment monitoring and analysis. Data is collected by the equipment and transmitted to the cloud for storage so that the business operation team has both a real-time as well as historical view of the infrastructure.

Status Quo

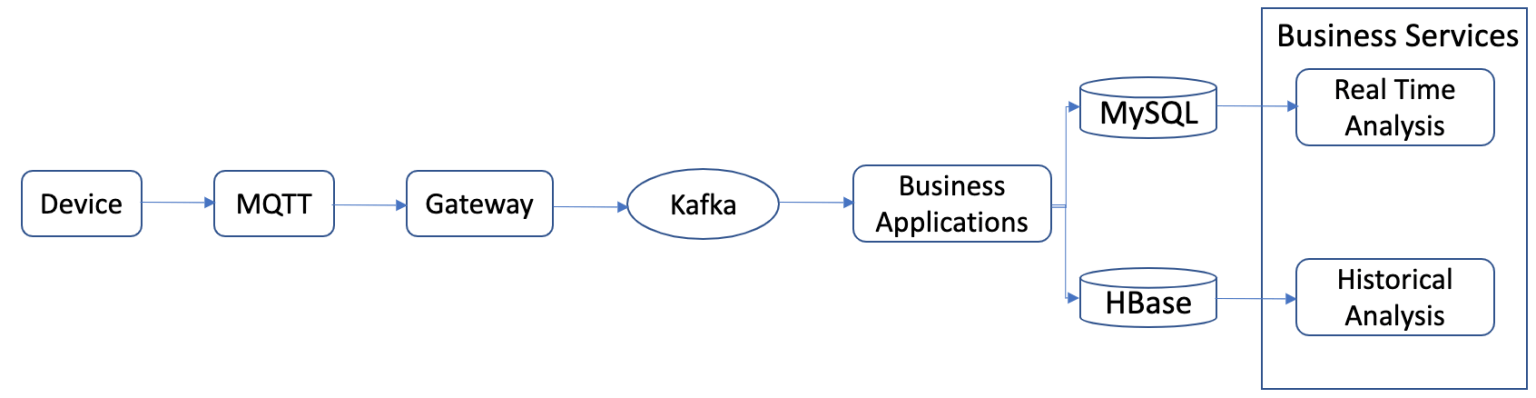

Originally, the system consisted of MySQL + HBase for data storage. MySQL stores the latest real-time data from the devices, and HBase stores the historical data. The high-level architecture is as follows:

There were several reasons to choose HBase to store historical data:

- HBase is widely used in the field of big data and suitable for storing massive data. It has good write performance.

- It supports dynamic change of columns and is very convenient and flexible for data model changes.

- The base technology is key-value storage, so data can be relatively sparse. Empty data does not occupy storage space.

- The team has relatively long experience with HBase.

Pain Points

In the early days, when the number of devices was relatively small and the amount of data was not large, HBase performed well and could meet business needs.

With the rapid deployment of equipment in swapping stations and supercharging stations across the country, the amount of equipment has grown substantially and the amount of accumulated data is increasing exponentially. This has created a bottleneck in querying historical data and our business operation needs cannot be met. The problem is mainly reflected in the following points:

- HBase only supports “row key” index which has great limitations. While some queries can be satisfactorily executed, complex queries can require entire table scans.

- While a secondary index can be introduced to get around the “row key” limitation, it requires additional development or introduction of middleware which increases the complexity of the system.

- As the data volume of an HBase table increases, automatic partitioning is triggered. This results in a decrease in write performance. This can be resolved by specifying pre-partitioning when creating a table but it is very painful to adjust and needs to be rebuilt to take effect.

- HBase is not suitable for large-scale scan queries, and the performance is relatively poor.

- HBase does not support aggregated queries. Since the amount of data in a large-span time range is very large, charts cannot be rendered.

- HBase deployment relies on ZooKeeper, and the operation and maintenance costs are very high.

Development Plan

Technical Selection

To address these pain points, we turned our attention to time-series databases (TSDB) that are popular and more suitable for the IoT field. After thorough investigation and comparison of multiple products we finally decided to use TDengine instead of HBase.

We also considered OpenTSDB when selecting time-series database. OpenTSDB is an excellent, mature time-series database product and is used internally for other applications and can address some of the pain points encountered with HBase:

- OpenTSDB is optimized on the basis of HBase, including storage metadata mapping and compression mechanism, which greatly reduces the data storage space.

- OpenTSDB provides data aggregation query function, which can support the business requirements of larger time span query.

However, OpenTSDB is still dependent on HBase architecture which lends itself to complexity.

TDengine was a much better choice for our business requirements. In addition to it’s extremely high write and read performance, TDengine’s features are perfectly suited to resolve our pain points:

- TDengine introduces the novel concept of supertable. A supertable corresponds to a device type, and a sub table which inherits from the supertable, is created for each device. This works well since the data model for a device type is naturally the same and data for each device can be isolated and is not mixed with data from other devices.

- Multi-level storage is built-in which allows configurable storage on different storage media depending on the age of the data. Newer data is frequently accessed and can be stored on an SSD to ensure efficiency while old data can be stored on HDD to save costs.

- TDengine does not rely on any third-party software and is very easy to install and deploy in clusters. That is, scalability is native and TDengine supports flexible expansion.

- TDengine provides a variety of aggregate functions to support aggregate queries on data.

Pre-Testing

We executed some simple performance tests on TDengine to ensure that it can meet our business needs.

Test Preparation

- Single-node deployment

- 8 cores 32GB, 500GB storage

- Use default configuration

- Write data using RESTful API

Testing Scenarios

Simulate 10,000 devices with message concurrency approximately 4k.

- Define the supertable as follows:

CREATE STABLE device_data_point_0 (ts timestamp, d1 nchar(64), d2 nchar(64), ...) TAGS (t1 binary(64));- Initially, each message was used to write data once, but this did not meet the performance requirements. We changed to batch writing and after accumulating a batch of data (100 pieces) and writing in batch, our performance requirements were met.

Test Conclusion

We decided to use the batch writing mechanism with configurable single batch data size and installed TDengine on a single-node with a default configuration of 8 cores, 32 GB RAM and 500 GB storage. We decided to use the RESTful API to write data. There was no issue when writing 4k/s but the peak value was 7 k/s. Since the amount of information contained in a single message was too high, it is split into 30 pieces and written to TDengine. So in actual processing the actual write is 210 k/s, which is higher than what we could achieve with HBase cluster. With a reduction in resources used and better performance, not to mention a simpler architecture with less maintenance, we were able to reduce costs significantly

Migration Plan

We gradually transitioned devices to TDengine from HBase in order to ensure business function continuity.

Data Double Write

There is no off-the-shelf tool to migrate data from HBase to TDengine and developing a tool would be too expensive and not worth the cost for a one-time activity.

Given that we didn’t want to waste development resources, and that we needed a transition period in case of an issue with TDengine we needed the option to quickly switch back to HBase without affecting business functions. So we decided to keep HBase and write data to both TDengine and HBase.

Write Method

Based on our test results we decided to write data in batch:

- Process data of different device types in parallel

- The data reported by the consumer device is put into the queue

- When the queue length reaches n or exceeds the waiting time t, the data is taken out of the queue and written in batches

After stress testing, in the case of n = 1,000 and t = 500 ms, the time for a single write was basically within 10 ms. This meant that we could support tens of thousands of concurrent writes per second for a single device type leaving us with further room for optimization and improvement.

Query Switch

In order to ensure a smooth migration and no incomplete data before and after migration, we made a query switch:

- Configure the online time, T, of the TDengine function.

- Determine the query request time range and online time of TDengine, T, and decide whether to check HBase or TDengine.

- After the transition period ends, stop the HBase service.

After migration, the schema becomes as follows:

Current Deployment Status

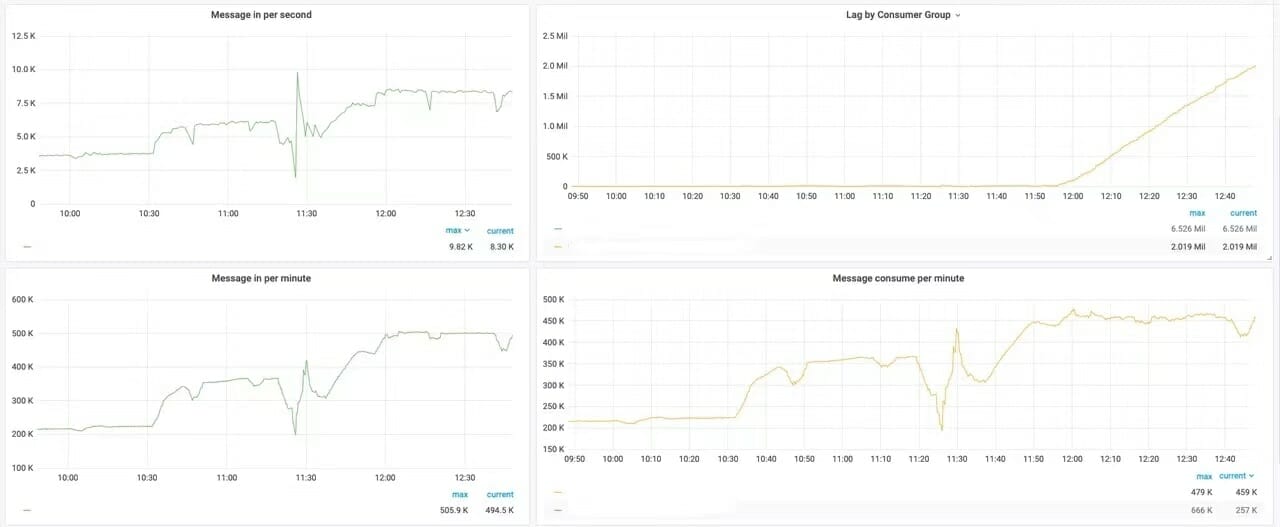

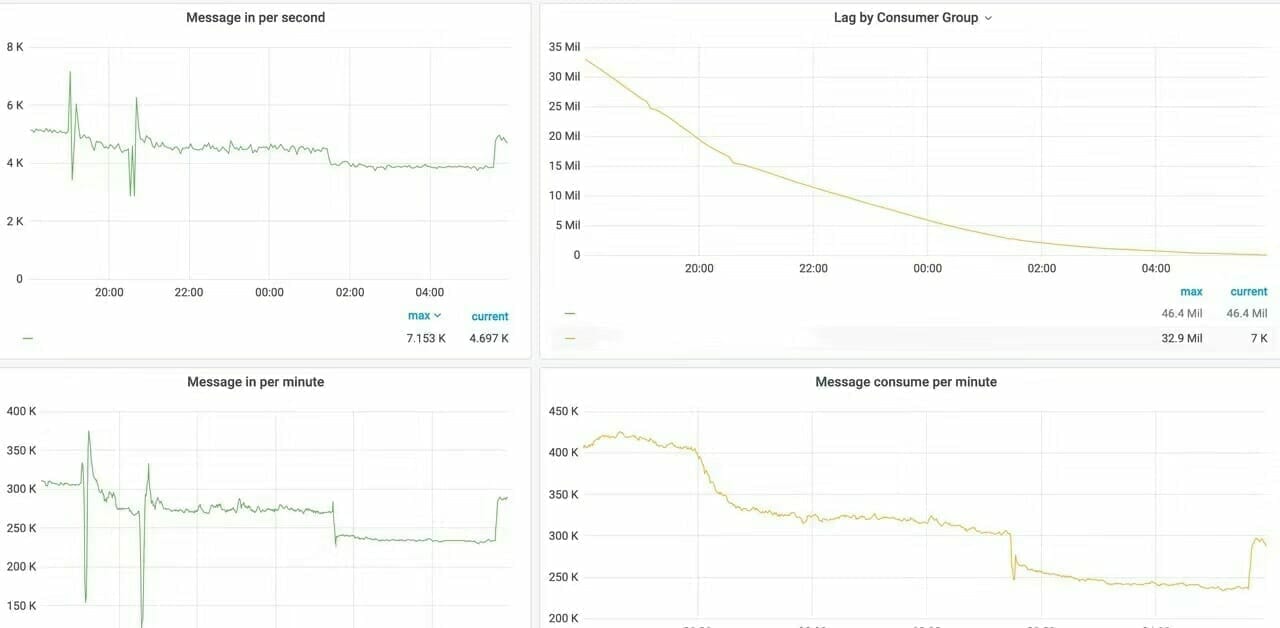

After switching some online devices to the TDengine cluster, the cluster performance has been stable.

When compared to HBase performance:

- The query speed is significantly improved. A query of 24-hour data for a single device returns in seconds on HBase while the same query returns in milliseconds on TDengine.

- TDengine has reduced storage space of daily incremental data by 50% compared to HBase.

- The cost of cluster computing resources is more than 60% lower with TDengine compared to HBase.

Conclusion

- Generally speaking, TDengine’s read and write performance is very good. In addition to meeting our business needs, it significantly reduces computing resources and operation and maintenance costs. Currently we are using very basic features provided by TDengine but are looking into implementing some of the advanced features provided by TDengine.

- We have encountered issues such as the impact of schema adjustment on data writing during the application release process and unexpected write exceptions. Additionally, unclear exception definitions make it difficult to located problems especially those that are schema-related data write problems.

- In terms of monitoring, there are currently fewer monitoring indicators supported than we would like but these monitoring indicators will be available in future versions of TDengine. (Note: TDinsight has been released for TDengine monitoring to solve this issue)

- In terms of data migration, currently there are few officially supported tools, so it is not easy to migrate data from other storage engines to TDengine, and additional development is required. (Note: TDengine team has released a plugin for dataX, which is an open source data migration tool)