iLink, is the industrial vehicle networking application of LiuGong which used MySQL as the database engine. But processing speed was extremely slow and the database was prone to downtime, due to unreasonably complex queries at the application layer and very high-frequency writing of historical data. As a result user experience suffered and LiuGong’s search for a time-series data solution led to TDengine.

Project Introduction

iLink is an industrial IoV (Internet of Vehicles) application for LiuGong’s industrial vehicle business in the international market. It allows users to see real-time dynamics of all vehicle equipment, such as location and operational status among many other parameters. By allowing remote monitoring of equipment it also facilitates efficient service and maintenance planning.

iLink was based on MySQL which was the backend storage engine for most of the business and was used by the application layer as well as the analysis layer. Due to complex queries and high-frequency writing of historical data, MySQL was slow and unresponsive and prone to downtime. This had led to a very poor end-user experience. The reason is that relational databases such as MySQL are not optimal for storing massive time series data, and are inefficient in massive data aggregation, downsampling and other operations.

To solve the above problems, we decided to use a dedicated time series database (TSDB) to store and process time series data which we hoped would increase the throughput and stability of the entire system. After comprehensive investigation, we chose TDengine since our use case is very consistent with TDengine’s concept of “one device, one table”. TDengine also supports aggregation and downsampling queries on big data and would resolve our pain points.

TDengine in Production

Our architecture works as follows: data is collected mainly from Tbox and other devices. Data is parsed into JSON by a parsing layer and sent to Kafka. A warehousing program then consumes this data and writes it to TDengine.

We deployed TDengine 2.4.0.16 version, in single-copy mode, on a 4-core 8GB+1TB server. Current disk usage is about 110G.

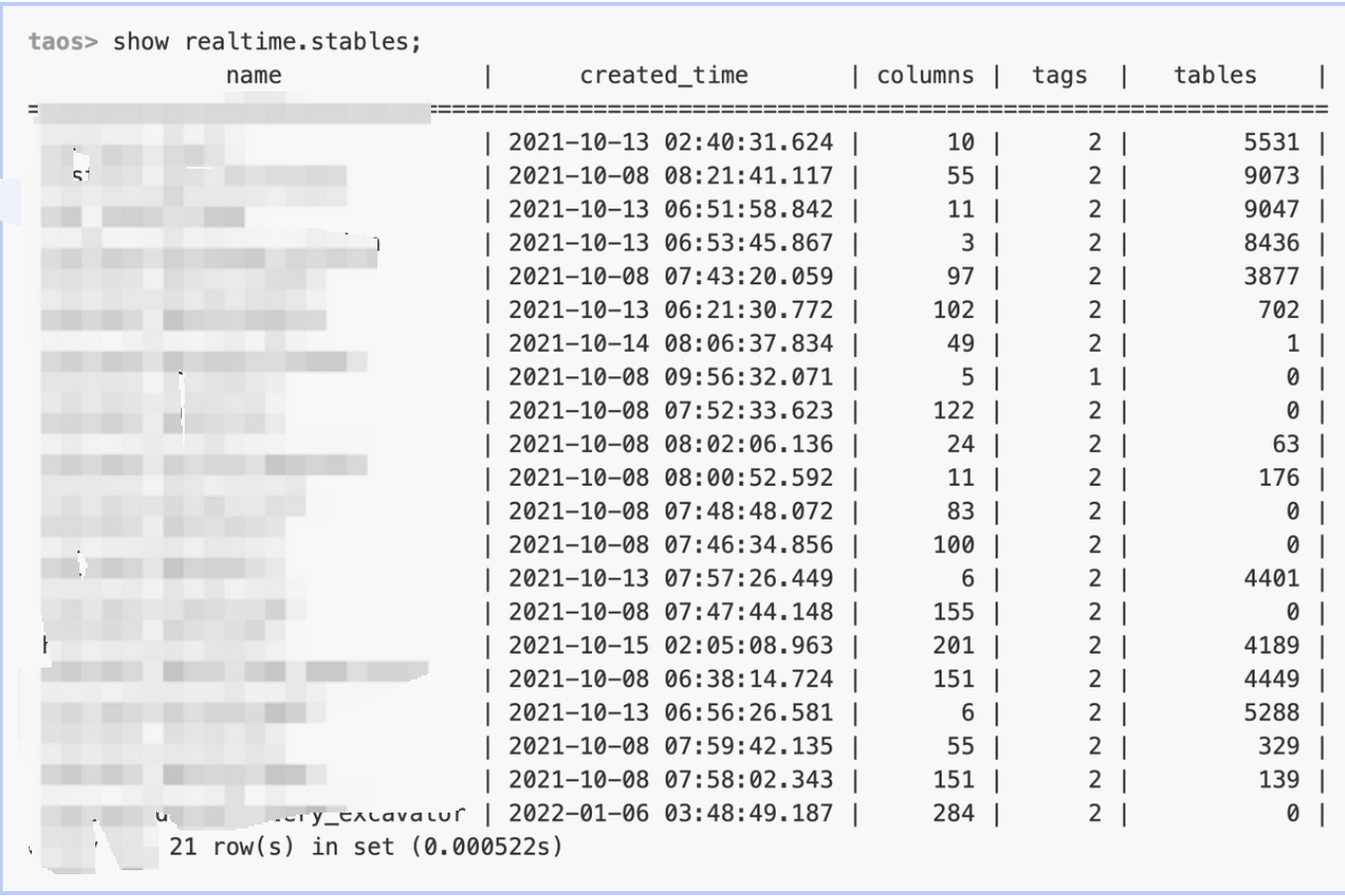

At present, the total number of tables in the library has reached 55,701. Adhering to the principle of “one table for one data collection point”, we chose different dimensions and established a total of 21 supertables, such as: vehicle type, equipment type, data type. The current total data volume is about 400-500 million rows, and the writing frequency is about every 5 minutes for each vehicle. TDengine can easily deal with this level of writing.

In terms of queries, we provide query services by using APIs and this has provided relief from our business pain points. When deciding to replace MySQL, we did extensive query comparison testing.

Database Query Test

| Query | Query Type | Latency (ms) |

|---|---|---|

| Query MCU historical data | Normal filter query | MySQL: 926.8 TDengine: 23.1 |

| Query Tbox historical data | Normal filter query | MySQL: 30.6 TDengine: 12.7 |

| Query device tracking | Downsampling, slicing, and aggregation | MySQL: query not possible TDengine: 2.8 |

| Query equipment failure history | Normal filter query | MySQL: 21153 TDengine: 24.3 |

As shown in the figure above, there are mainly four functions in which the query capability of TDengine is far greater than that of MySQL.

Here is an example of how deploying TDengine has transformed our user experience. We have some business reports which count the data of all devices every hour. This process often takes more than an hour in MySQL and this had a very negative impact on the business. After switching to TDengine, this process only takes about 10 seconds.

TDengine in Practice – Issues and Experience

As can be seen from the above figure, the number of collection parameters i.e. the number of columns for each type of equipment is relatively large and often in the hundreds. This indirectly affects the compression rate which we discovered later while investigating other issues with TDengine staff.

We were able to ascertain the reason for this. Each Vnode in TDengine has its own buffer. The buffer size is determined by the value of cache * blocks (in MB). Data is written to file when 1/3 of the cache is full and data is compressed during writing. When the number of columns is very high, the length of a single row is very large and not many rows of data are needed to fill 1/3 of the cache and trigger the write to file. This means there are too few samples which leads to poor compression.

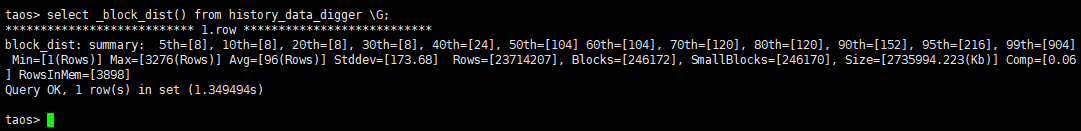

When you view the distribution of data blocks using “select_block_dist()” from the supertable, you can see that more than 99% of all blocks are SmallBlocks.

We were able to resolve this by increasing the blocks parameter (default is 6) for new data. As for historical data, we plan to use the defragmentation compression provided by TDengine to do this.

This operation is relatively simple. After backing up the data files, use compact vgroups in (3,4,5,6) (the number is the Vgroup ID). However this operation needs to be conducted during planned downtime so as not to impact the business.

Summary

We are planning projects related to vehicle maintenance which will improve vehicle utilization and safety, for which TDengine will be the backbone. We will plan to have more in-depth interactions with TDengine as our global business grows.