A Europe-based connected vehicles company’s data storage system was under great pressure to process massive amounts of vehicle location tracking data—there were about 20 million location records per minute. The Elasticsearch platform they used not only caused a serious waste of physical resources, but had a query bottleneck, so there was an urgent need to find a more efficient database.

Project Introduction

A vehicle location tracking project faced the prospect of consuming 80% of its storage in less than half a month. The volume of vehicle location data had reached 20 million records/minute and it was overwhelming the Elasticsearch cluster. The cluster consisted of 15 nodes with each node being 32 cores, 48GB RAM and 2 TB of disk space. IO operations were leading to instability of the PaaS layer and also affecting other dependent resources, such as cloud hosts and middleware. It was clear that some changes needed to be made immediately.

Given the volume of data, characteristics of data and the massive processing requirements, the company concluded that what was needed was a time series database (TSDB). They made the choice of TDengine based on the following dimensions:

- Performance Improvement – Data ingestion, query and storage performance needed to be improved.

- Cost Reduction – we needed to reduce hardware and/or cloud service costs

- Simple Architecture – We needed to simplify the architecture of the system.

- Scalability/High Availability – We needed open source software that included clustering.

Performance Testing

The company wanted to take advantage of the write performance of TDengine and wanted to run stress tests. They selected location data generated by some business scenarios for the write performance testing. The testing environment is shown in the following figure:

- TDengine cluster server: (4 CPU, 8G RAM, 400G Disk) * 1(16 CPU, 24G RAM, 400G Disk) * 1

- TDengine version: 2.0.19.1

- Elasticsearch server: (48 CPU 500G RAM 3.5T Disk) *1

They use 400,000 records/day/vehicle, for a total of 150 days and for 120 vehicles. The total number of records written was 7.2 billion, and the total number of threads was 40. The final performance comparison between TDengine and Elasticsearch for writing and storage is as follows:

| Total Records | Total Time | Records/Second | Storage | |

|---|---|---|---|---|

| TDengine | 7.2 billion | 2 h 53 m | 690,000 | 1142 GB (two copies) |

| Elasticsearch | 1.67 billion | 1 h 43 m | 270,000 | 1004 GB |

Summary: Compared with Elasticsearch, the storage space efficiency of TDengine is increased by 8 times, and the write performance is increased by 2.5 times.

During initial testing they used default values for the TDengine configuration parameter “blocks” which is the number of cache blocks per vnode. In the subsequent test, they modified it to 100 to match our business needs and optimize TDengine.

Following the write performance test, they tested the query performance of TDengine along the following dimensions:

- per day

- per vehicle

- per day per vehicle

The test results are shown in the following figure:

| TDengine | Elasticsearch | ||||

|---|---|---|---|---|---|

| Records | Latency | Records | Latency | ||

| Per Day | COUNT(*) | 48 million | 71 ms | 22.42 million | 3100 ms |

| SELECT(*) | 48 million | 296 ms | 22.42 million | 1789 ms | |

| Per Vehicle | COUNT(*) | 60 million | 683 ms | 21.16 million | 3740 ms |

| SELECT(*) | 60 million | 403 ms | 21.16 million | 1373 ms | |

| Per Vehicle Per Day | COUNT(*) | 400,000 | 89 ms | 335,000 | 373 ms |

| SELECT(*) | 400,000 | 2.8 s | 335,000 | 35 s | |

Summary: Compared to Elasticsearch, “count(*)” query performance of TDengine is improved by 4 times, and “select*” query performance is improved by 3-10 times.

There were several other advantages of going with TDengine. Firstly it supports one of our business requirements i.e. querying the data of a single device for a certain period of time. Secondly, its query language supports SQL which reduced our learning cost to nearly zero. Thirdly it has almost zero maintenance and management costs. It is also an open source platform and provides transparency and has a large developer community. Lastly the stability and security of TDengine have been verified by many companies, and it is a relatively mature product.

After comprehensive testing and taking into account several perspectives, we finally chose to integrate TDengine into our system.

Actual Deployment

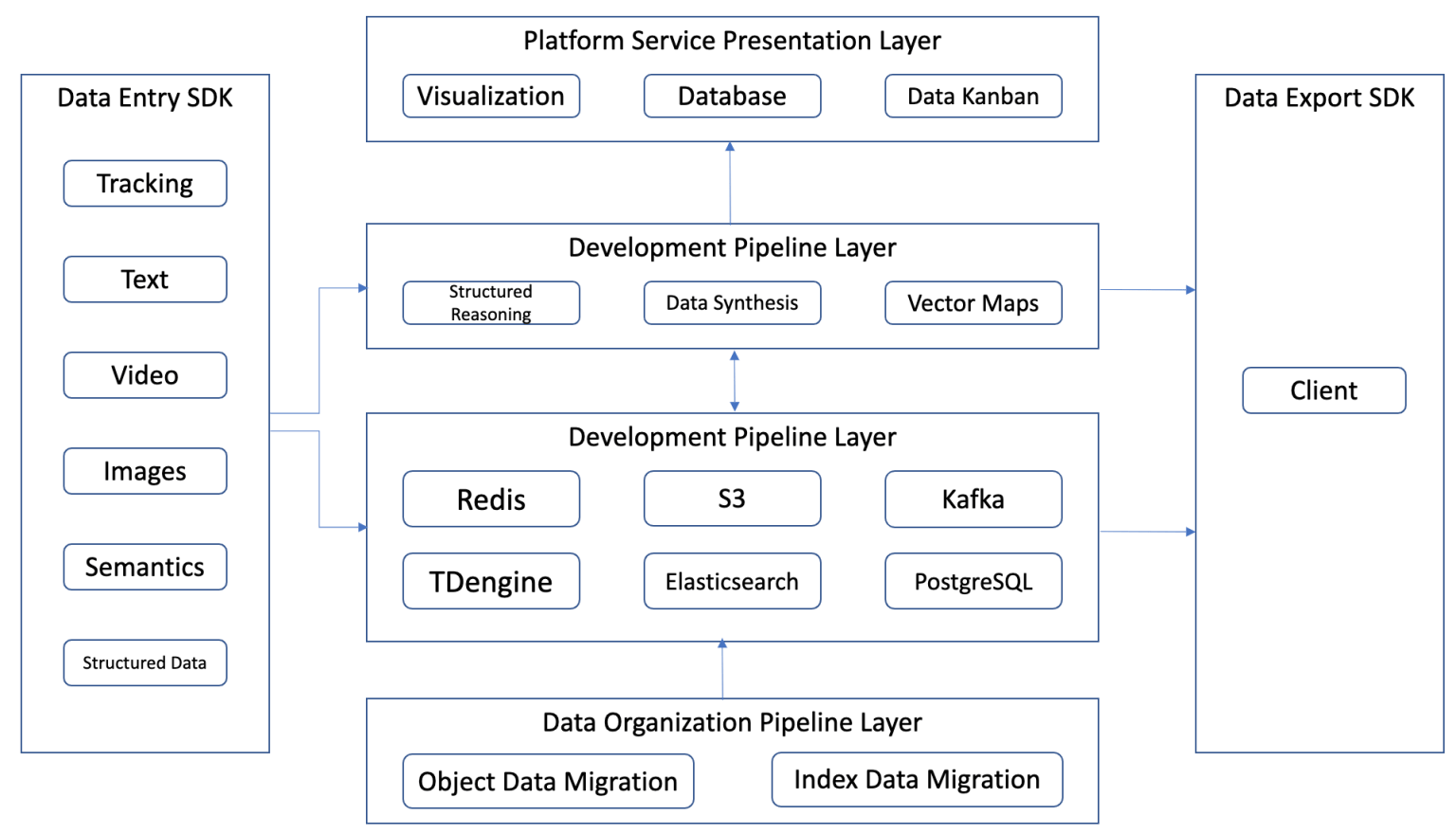

After connecting to TDengine, the business needs to write a large amount of location tracking data in near real time can be easily achieved, and the data department can generate billions of location tracking data every day. While meeting the storage requirements, the query performance can also meet the use of downstream business teams. The overall architecture diagram is as follows:

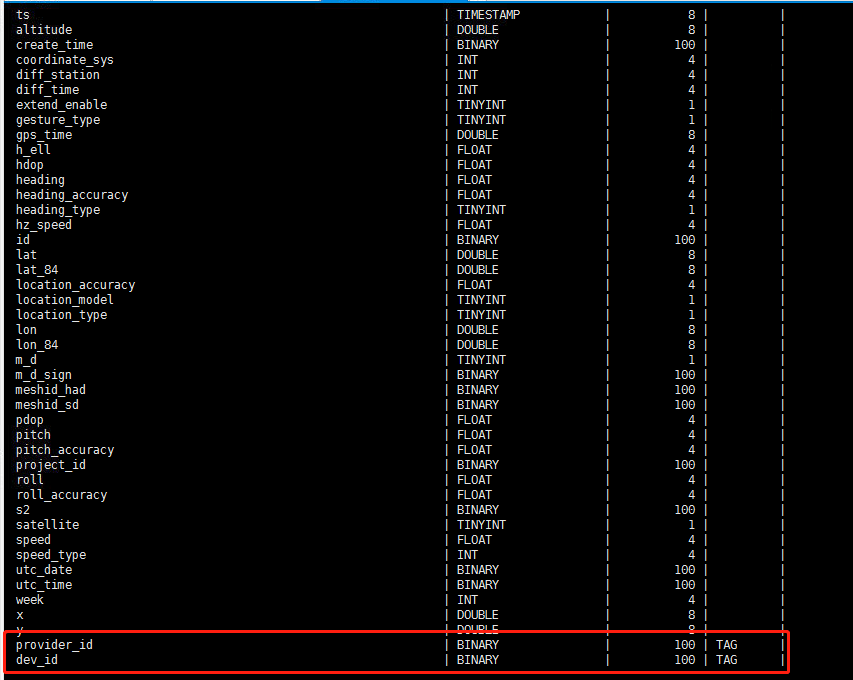

The schema for the table reflects the index relationship based on the tags of the device id and the provider id of the vehicle.

TDengine still has very high performance after several months of being deployed. Taking storage performance as an example, when we used Elasticsearch cluster, 15 nodes could only support data storage for 3 months. After deploying TDengine, a 7-node cluster with the same configuration has supported data storage for 5 months.

Working with TDengine

The process of migration was not at all difficult. TDengine supports several programming languages, and supports standard SQL, similar to MySQL. This greatly reduces the resource cost and labor cost required for migration. The company developed its own migration tool based on TDengine APIs and the migration of 300T+ data was completed in a short period of time.

In terms of specific implementation, the migration tool uses multi-threading to read the data from CSV, and then write it to the new cluster in batches. The sample code is shown in the following figure:

The company did encounter some issues with the implementation of TDengine but were able to resolve them with support from TDengine’s engineers.

One of the use cases was to “draw a rectangular box on the page”. The team tried querying the latitude and longitude directly to obtain tracking data. However the latency was unusably high. The SQL query was as follows:

In order to resolve this, they use Spark to dynamically obtain the number of trajectory points of a certain vehicle provider through latitude and longitude, and achieve the final effect by drawing time points:

They did encounter linear decline in query performance for multi-dimensional and large-scale queries but were able to resolve these issues by going through the extremely comprehensive TDengine documentation especially on cluster configuration and data modeling. The team then implemented several optimizations that resolved their query performance issues.

Conclusion

Based on the excellent performance of TDengine in their current business application, the company will consider migrating larger-scale trajectory data and time series data from other businesses into TDengine in the future. They also hope to leverage TDengine to mine the company’s internal data and extract value, accelerate the development of the EV charging business, and help Location Based Services to win more customers and provide value to our existing and future customers.